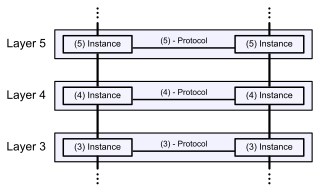

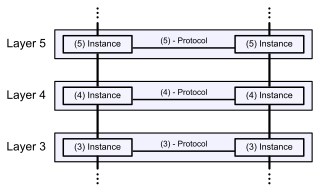

The Open Systems Interconnection (OSI) model is a reference model from the International Organization for Standardization (ISO) that "provides a common basis for the coordination of standards development for the purpose of systems interconnection." In the OSI reference model, the communications between systems are split into seven different abstraction layers: Physical, Data Link, Network, Transport, Session, Presentation, and Application.

A quality management system (QMS) is a collection of business processes focused on consistently meeting customer requirements and enhancing their satisfaction. It is aligned with an organization's purpose and strategic direction. It is expressed as the organizational goals and aspirations, policies, processes, documented information, and resources needed to implement and maintain it. Early quality management systems emphasized predictable outcomes of an industrial product production line, using simple statistics and random sampling. By the 20th century, labor inputs were typically the most costly inputs in most industrialized societies, so focus shifted to team cooperation and dynamics, especially the early signaling of problems via a continual improvement cycle. In the 21st century, QMS has tended to converge with sustainability and transparency initiatives, as both investor and customer satisfaction and perceived quality are increasingly tied to these factors. Of QMS regimes, the ISO 9000 family of standards is probably the most widely implemented worldwide – the ISO 19011 audit regime applies to both and deals with quality and sustainability and their integration.

Interoperability is a characteristic of a product or system to work with other products or systems. While the term was initially defined for information technology or systems engineering services to allow for information exchange, a broader definition takes into account social, political, and organizational factors that impact system-to-system performance.

The Organization for the Advancement of Structured Information Standards is a nonprofit consortium that works on the development, convergence, and adoption of projects - both open standards and open source - for Computer security, blockchain, Internet of things (IoT), emergency management, cloud computing, legal data exchange, energy, content technologies, and other areas.

An open standard is a standard that is openly accessible and usable by anyone. It is also a common prerequisite that open standards use an open license that provides for extensibility. Typically, anybody can participate in their development due to their inherently open nature. There is no single definition, and interpretations vary with usage. Examples of open standards include the GSM, 4G, and 5G standards that allow most modern mobile phones to work world-wide.

In industry, product lifecycle management (PLM) is the process of managing the entire lifecycle of a product from its inception through the engineering, design and manufacture, as well as the service and disposal of manufactured products. PLM integrates people, data, processes, and business systems and provides a product information backbone for companies and their extended enterprises.

Business process modeling (BPM), mainly used in business process management; software development, or systems engineering, is the action of capturing and representing processes of an enterprise, so that the current business processes may be analyzed, applied securely and consistently, improved, and automated. BPM is typically orchestrated by business analysts, leveraging their expertise in modeling practices. Subject matter experts, equipped with specialized knowledge of the processes being modeled, often collaborate within these teams. Alternatively, process models can be directly derived from digital traces within IT systems, such as event logs, utilizing process mining tools.

ISO 10303 is an ISO standard for the computer-interpretable representation and exchange of product manufacturing information. It is an ASCII-based format. Its official title is: Automation systems and integration — Product data representation and exchange. It is known informally as "STEP", which stands for "Standard for the Exchange of Product model data". ISO 10303 can represent 3D objects in Computer-aided design (CAD) and related information.

Quality management ensures that an organization, product or service consistently functions well. It has four main components: quality planning, quality assurance, quality control, and quality improvement. Quality management is focused both on product and service quality and the means to achieve it. Quality management, therefore, uses quality assurance and control of processes as well as products to achieve more consistent quality. Quality control is also part of quality management. What a customer wants and is willing to pay for it, determines quality. It is a written or unwritten commitment to a known or unknown consumer in the market. Quality can be defined as how well the product performs its intended function.

JT is an openly-published ISO-standardized 3D CAD data exchange format used for product visualization, collaboration, digital mockups, and other purposes. It was developed by Siemens.

The ISO 15926 is a standard for data integration, sharing, exchange, and hand-over between computer systems.

International standards in the ISO/IEC 19770 family of standards for IT asset management address both the processes and technology for managing software assets and related IT assets. Broadly speaking, the standard family belongs to the set of Software Asset Management standards and is integrated with other Management System Standards.

Knowledge Discovery Metamodel (KDM) is a publicly available specification from the Object Management Group (OMG). KDM is a common intermediate representation for existing software systems and their operating environments, that defines common metadata required for deep semantic integration of Application Lifecycle Management tools. KDM was designed as the OMG's foundation for software modernization, IT portfolio management and software assurance. KDM uses OMG's Meta-Object Facility to define an XMI interchange format between tools that work with existing software as well as an abstract interface (API) for the next-generation assurance and modernization tools. KDM standardizes existing approaches to knowledge discovery in software engineering artifacts, also known as software mining.

An independent test organization is an organization, person, or company that tests products, materials, software, etc. according to agreed requirements. The test organization can be affiliated with the government or universities or can be an independent testing laboratory. They are independent because they are not affiliated with the producer nor the user of the item being tested: no commercial bias is present. These "contract testing" facilities are sometimes called "third party" testing or evaluation facilities.

A specification often refers to a set of documented requirements to be satisfied by a material, design, product, or service. A specification is often a type of technical standard.

ISO 8000 is the global standard for Data Quality and Enterprise Master Data. It describes the features and defines the requirements for standard exchange of Master Data among business partners. It establishes the concept of Portability as a requirement for Enterprise Master Data, and the concept that true Enterprise Master Data is unique to each organization.

Energistics is a global, non-profit, industry consortium that facilitates an inclusive user community for the development, adoption and maintenance of collaborative, open standards for the energy industry in general and specifically for oil and gas exploration and production.

POSC Caesar Association (PCA) is an international, open and not-for-profit, member organization that promotes the development of open specifications to be used as standards for enabling the interoperability of data, software and related matters.

Open Automated Demand Response (OpenADR) is a research and standards development effort for energy management led by North American research labs and companies. The typical use is to send information and signals to cause electrical power-using devices to be turned off during periods of high demand.

The Seabed Survey Data Model (SSDM) is an industry standard data model for how seabed survey data is stored and managed by oil and gas companies. The International Association of Oil & Gas Producers (IOGP) developed and published this standard in October 2011. Many surveys have been successfully delivered in SSDM.