This article may be too technical for most readers to understand.(September 2018) |

Information quality (InfoQ) is the potential of a data set to achieve a specific (scientific or practical) goal using a given empirical analysis method.

This article may be too technical for most readers to understand.(September 2018) |

Information quality (InfoQ) is the potential of a data set to achieve a specific (scientific or practical) goal using a given empirical analysis method.

Formally, the definition is InfoQ = U(X,f|g) where X is the data, f the analysis method, g the goal and U the utility function. InfoQ is different from data quality and analysis quality, but is dependent on these components and on the relationship between them.

InfoQ has been applied in a wide range of domains like healthcare, customer surveys, data science programs, advanced manufacturing and Bayesian network applications.

Kenett and Shmueli (2014) proposed eight dimensions to help assess InfoQ and various methods for increasing InfoQ: Data resolution, Data structure, Data integration, Temporal relevance, Chronology of data and goal, Generalization, Operationalization, Communication. [1] [2] [3]

In biology, phylogenetics is the study of the evolutionary history and relationships among or within groups of organisms. These relationships are determined by phylogenetic inference methods that focus on observed heritable traits, such as DNA sequences, protein amino acid sequences, or morphology. The result of such an analysis is a phylogenetic tree—a diagram containing a hypothesis of relationships that reflects the evolutionary history of a group of organisms.

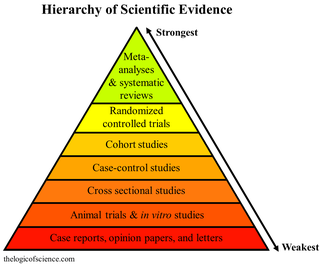

A meta-analysis is a statistical analysis that combines the results of multiple scientific studies. Meta-analyses can be performed when there are multiple scientific studies addressing the same question, with each individual study reporting measurements that are expected to have some degree of error. The aim then is to use approaches from statistics to derive a pooled estimate closest to the unknown common truth based on how this error is perceived. It is thus a basic methodology of metascience. Meta-analytic results are considered the most trustworthy source of evidence by the evidence-based medicine literature.

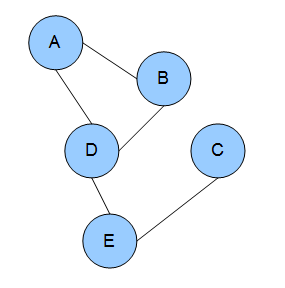

A Bayesian network is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). It is one of several forms of causal notation. Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases.

In probability theory and statistics, the gamma distribution is a two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use:

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior probability contains everything there is to know about an uncertain proposition, given prior knowledge and a mathematical model describing the observations available at a particular time. After the arrival of new information, the current posterior probability may serve as the prior in another round of Bayesian updating.

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection.

Multiple-criteria decision-making (MCDM) or multiple-criteria decision analysis (MCDA) is a sub-discipline of operations research that explicitly evaluates multiple conflicting criteria in decision making. Conflicting criteria are typical in evaluating options: cost or price is usually one of the main criteria, and some measure of quality is typically another criterion, easily in conflict with the cost. In purchasing a car, cost, comfort, safety, and fuel economy may be some of the main criteria we consider – it is unusual that the cheapest car is the most comfortable and the safest one. In portfolio management, managers are interested in getting high returns while simultaneously reducing risks; however, the stocks that have the potential of bringing high returns typically carry high risk of losing money. In a service industry, customer satisfaction and the cost of providing service are fundamental conflicting criteria.

In the domain of physics and probability, a Markov random field (MRF), Markov network or undirected graphical model is a set of random variables having a Markov property described by an undirected graph. In other words, a random field is said to be a Markov random field if it satisfies Markov properties. The concept originates from the Sherrington–Kirkpatrick model.

Sensor fusion is the process of combining sensor data or data derived from disparate sources such that the resulting information has less uncertainty than would be possible when these sources were used individually. For instance, one could potentially obtain a more accurate location estimate of an indoor object by combining multiple data sources such as video cameras and WiFi localization signals. The term uncertainty reduction in this case can mean more accurate, more complete, or more dependable, or refer to the result of an emerging view, such as stereoscopic vision.

Model selection is the task of selecting a model from among various candidates on the basis of performance criterion to choose the best one. In the context of machine learning and more generally statistical analysis, this may be the selection of a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice.

Info-gap decision theory seeks to optimize robustness to failure under severe uncertainty, in particular applying sensitivity analysis of the stability radius type to perturbations in the value of a given estimate of the parameter of interest. It has some connections with Wald's maximin model; some authors distinguish them, others consider them instances of the same principle.

Ancestral reconstruction is the extrapolation back in time from measured characteristics of individuals to their common ancestors. It is an important application of phylogenetics, the reconstruction and study of the evolutionary relationships among individuals, populations or species to their ancestors. In the context of evolutionary biology, ancestral reconstruction can be used to recover different kinds of ancestral character states of organisms that lived millions of years ago. These states include the genetic sequence, the amino acid sequence of a protein, the composition of a genome, a measurable characteristic of an organism (phenotype), and the geographic range of an ancestral population or species. This is desirable because it allows us to examine parts of phylogenetic trees corresponding to the distant past, clarifying the evolutionary history of the species in the tree. Since modern genetic sequences are essentially a variation of ancient ones, access to ancient sequences may identify other variations and organisms which could have arisen from those sequences. In addition to genetic sequences, one might attempt to track the changing of one character trait to another, such as fins turning to legs.

Approximate Bayesian computation (ABC) constitutes a class of computational methods rooted in Bayesian statistics that can be used to estimate the posterior distributions of model parameters.

In applied mathematics, topological data analysis (TDA) is an approach to the analysis of datasets using techniques from topology. Extraction of information from datasets that are high-dimensional, incomplete and noisy is generally challenging. TDA provides a general framework to analyze such data in a manner that is insensitive to the particular metric chosen and provides dimensionality reduction and robustness to noise. Beyond this, it inherits functoriality, a fundamental concept of modern mathematics, from its topological nature, which allows it to adapt to new mathematical tools.

Information is an abstract concept that refers to that which has the power to inform. At the most fundamental level, information pertains to the interpretation of that which may be sensed, or their abstractions. Any natural process that is not completely random and any observable pattern in any medium can be said to convey some amount of information. Whereas digital signals and other data use discrete signs to convey information, other phenomena and artifacts such as analogue signals, poems, pictures, music or other sounds, and currents convey information in a more continuous form. Information is not knowledge itself, but the meaning that may be derived from a representation through interpretation.

In statistics and machine learning, ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. Unlike a statistical ensemble in statistical mechanics, which is usually infinite, a machine learning ensemble consists of only a concrete finite set of alternative models, but typically allows for much more flexible structure to exist among those alternatives.

The free energy principle is a theoretical framework suggesting that the brain reduces surprise or uncertainty by making predictions based on internal models and updating them using sensory input. It highlights the brain's objective of aligning its internal model with the external world to enhance prediction accuracy. This principle integrates Bayesian inference with active inference, where actions are guided by predictions and sensory feedback refines them. It has wide-ranging implications for comprehending brain function, perception, and action.

In statistics, econometrics, epidemiology, genetics and related disciplines, causal graphs are probabilistic graphical models used to encode assumptions about the data-generating process.

Galit Shmueli is a data scientist who works in Taiwan as Tsing Hua Distinguished Professor at the Institute of Service Science, National Tsing Hua University. She is the author of many textbooks in business statistics and is known for her work on information quality, and on clarifying the difference between explanations and predictions in statistical analyses.

Probabilistic numerics is an active field of study at the intersection of applied mathematics, statistics, and machine learning centering on the concept of uncertainty in computation. In probabilistic numerics, tasks in numerical analysis such as finding numerical solutions for integration, linear algebra, optimization and simulation and differential equations are seen as problems of statistical, probabilistic, or Bayesian inference.