In mathematics, Jensen's inequality, named after the Danish mathematician Johan Jensen, relates the value of a convex function of an integral to the integral of the convex function. It was proved by Jensen in 1906, building on an earlier proof of the same inequality for doubly-differentiable functions by Otto Hölder in 1889. Given its generality, the inequality appears in many forms depending on the context, some of which are presented below. In its simplest form the inequality states that the convex transformation of a mean is less than or equal to the mean applied after convex transformation; it is a simple corollary that the opposite is true of concave transformations.

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the "amount of information" obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable.

In probability theory, the total variation distance is a distance measure for probability distributions. It is an example of a statistical distance metric, and is sometimes called the statistical distance, statistical difference or variational distance.

In mathematical statistics, the Kullback–Leibler divergence, denoted , is a type of statistical distance: a measure of how one probability distribution P is different from a second, reference probability distribution Q. A simple interpretation of the KL divergence of P from Q is the expected excess surprise from using Q as a model when the actual distribution is P. While it is a measure of how different two distributions are, and in some sense is thus a "distance", it is not actually a metric, which is the most familiar and formal type of distance. In particular, it is not symmetric in the two distributions, and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for certain classes of distributions, it satisfies a generalized Pythagorean theorem.

In information theory, the Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision entropy, and min-entropy. The Rényi entropy is named after Alfréd Rényi, who looked for the most general way to quantify information while preserving additivity for independent events. In the context of fractal dimension estimation, the Rényi entropy forms the basis of the concept of generalized dimensions.

In information theory, the cross-entropy between two probability distributions and over the same underlying set of events measures the average number of bits needed to identify an event drawn from the set if a coding scheme used for the set is optimized for an estimated probability distribution , rather than the true distribution .

In information theory, Gibbs' inequality is a statement about the information entropy of a discrete probability distribution. Several other bounds on the entropy of probability distributions are derived from Gibbs' inequality, including Fano's inequality. It was first presented by J. Willard Gibbs in the 19th century.

Differential entropy is a concept in information theory that began as an attempt by Claude Shannon to extend the idea of (Shannon) entropy, a measure of average (surprisal) of a random variable, to continuous probability distributions. Unfortunately, Shannon did not derive this formula, and rather just assumed it was the correct continuous analogue of discrete entropy, but it is not. The actual continuous version of discrete entropy is the limiting density of discrete points (LDDP). Differential entropy is commonly encountered in the literature, but it is a limiting case of the LDDP, and one that loses its fundamental association with discrete entropy.

In mathematics, specifically statistics and information geometry, a Bregman divergence or Bregman distance is a measure of difference between two points, defined in terms of a strictly convex function; they form an important class of divergences. When the points are interpreted as probability distributions – notably as either values of the parameter of a parametric model or as a data set of observed values – the resulting distance is a statistical distance. The most basic Bregman divergence is the squared Euclidean distance.

This article discusses how information theory is related to measure theory.

In quantum information theory, quantum relative entropy is a measure of distinguishability between two quantum states. It is the quantum mechanical analog of relative entropy.

The mathematical theory of information is based on probability theory and statistics, and measures information with several quantities of information. The choice of logarithmic base in the following formulae determines the unit of information entropy that is used. The most common unit of information is the bit, or more correctly the shannon, based on the binary logarithm. Although "bit" is more frequently used in place of "shannon", its name is not distinguished from the bit as used in data-processing to refer to a binary value or stream regardless of its entropy Other units include the nat, based on the natural logarithm, and the hartley, based on the base 10 or common logarithm.

Inequalities are very important in the study of information theory. There are a number of different contexts in which these inequalities appear.

In information theory, Pinsker's inequality, named after its inventor Mark Semenovich Pinsker, is an inequality that bounds the total variation distance in terms of the Kullback–Leibler divergence. The inequality is tight up to constant factors.

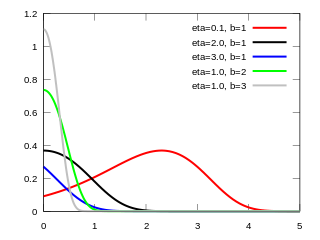

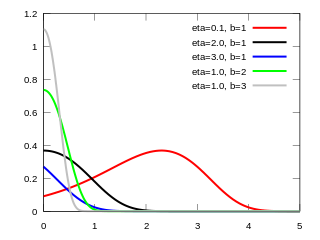

In probability and statistics, the Gompertz distribution is a continuous probability distribution, named after Benjamin Gompertz. The Gompertz distribution is often applied to describe the distribution of adult lifespans by demographers and actuaries. Related fields of science such as biology and gerontology also considered the Gompertz distribution for the analysis of survival. More recently, computer scientists have also started to model the failure rates of computer code by the Gompertz distribution. In Marketing Science, it has been used as an individual-level simulation for customer lifetime value modeling. In network theory, particularly the Erdős–Rényi model, the walk length of a random self-avoiding walk (SAW) is distributed according to the Gompertz distribution.

In information theory and statistics, Kullback's inequality is a lower bound on the Kullback–Leibler divergence expressed in terms of the large deviations rate function. If P and Q are probability distributions on the real line, such that P is absolutely continuous with respect to Q, i.e. P << Q, and whose first moments exist, then

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known constant mean rate and independently of the time since the last event. It is named after French mathematician Siméon Denis Poisson. The Poisson distribution can also be used for the number of events in other specified interval types such as distance, area, or volume. It plays an important role for discrete-stable distributions.

In information geometry, a divergence is a kind of statistical distance: a binary function which establishes the separation from one probability distribution to another on a statistical manifold.

In variational Bayesian methods, the evidence lower bound is a useful lower bound on the log-likelihood of some observed data.

In information theory, the Bretagnolle–Huber inequality bounds the total variation distance between two probability distributions and by a concave and bounded function of the Kullback–Leibler divergence . The bound can be viewed as an alternative to the well-known Pinsker's inequality: when is large, Pinsker's inequality is vacuous, while Bretagnolle–Huber remains bounded and hence non-vacuous. It is used in statistics and machine learning to prove information-theoretic lower bounds relying on hypothesis testing