In computer science, the clique problem is the computational problem of finding cliques in a graph. It has several different formulations depending on which cliques, and what information about the cliques, should be found. Common formulations of the clique problem include finding a maximum clique, finding a maximum weight clique in a weighted graph, listing all maximal cliques, and solving the decision problem of testing whether a graph contains a clique larger than a given size.

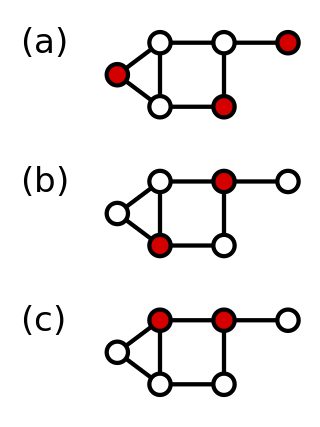

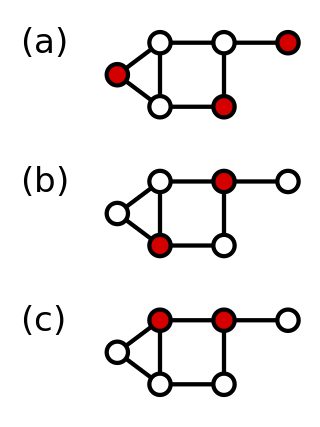

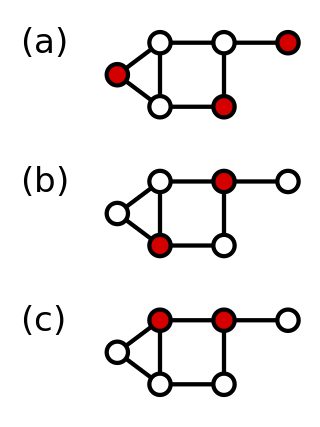

In graph theory, a vertex cover of a graph is a set of vertices that includes at least one endpoint of every edge of the graph.

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are also many approximation algorithms that provide an additive guarantee on the quality of the returned solution. A notable example of an approximation algorithm that provides both is the classic approximation algorithm of Lenstra, Shmoys and Tardos for scheduling on unrelated parallel machines.

In computer science, parameterized complexity is a branch of computational complexity theory that focuses on classifying computational problems according to their inherent difficulty with respect to multiple parameters of the input or output. The complexity of a problem is then measured as a function of those parameters. This allows the classification of NP-hard problems on a finer scale than in the classical setting, where the complexity of a problem is only measured as a function of the number of bits in the input. This appears to have been first demonstrated in Gurevich, Stockmeyer & Vishkin (1984). The first systematic work on parameterized complexity was done by Downey & Fellows (1999).

In graph theory, the metric dimension of a graph G is the minimum cardinality of a subset S of vertices such that all other vertices are uniquely determined by their distances to the vertices in S. Finding the metric dimension of a graph is an NP-hard problem; the decision version, determining whether the metric dimension is less than a given value, is NP-complete.

In graph theory, a dominating set for a graph G is a subset D of its vertices, such that any vertex of G is either in D, or has a neighbor in D. The domination numberγ(G) is the number of vertices in a smallest dominating set for G.

In graph theory, a connected dominating set and a maximum leaf spanning tree are two closely related structures defined on an undirected graph.

In computer science, a kernelization is a technique for designing efficient algorithms that achieve their efficiency by a preprocessing stage in which inputs to the algorithm are replaced by a smaller input, called a "kernel". The result of solving the problem on the kernel should either be the same as on the original input, or it should be easy to transform the output on the kernel to the desired output for the original problem.

For a graph, a maximum cut is a cut whose size is at least the size of any other cut. That is, it is a partition of the graph's vertices into two complementary sets S and T, such that the number of edges between S and T is as large as possible. Finding such a cut is known as the max-cut problem.

In computational complexity theory, the exponential time hypothesis is an unproven computational hardness assumption that was formulated by Impagliazzo & Paturi (1999). It states that satisfiability of 3-CNF Boolean formulas cannot be solved in subexponential time, . More precisely, the usual form of the hypothesis asserts the existence of a number such that all algorithms that correctly solve this problem require time at least . The exponential time hypothesis, if true, would imply that P ≠ NP, but it is a stronger statement. It implies that many computational problems are equivalent in complexity, in the sense that if one of them has a subexponential time algorithm then they all do, and that many known algorithms for these problems have optimal or near-optimal time complexity.

Bidimensionality theory characterizes a broad range of graph problems (bidimensional) that admit efficient approximate, fixed-parameter or kernel solutions in a broad range of graphs. These graph classes include planar graphs, map graphs, bounded-genus graphs and graphs excluding any fixed minor. In particular, bidimensionality theory builds on the graph minor theory of Robertson and Seymour by extending the mathematical results and building new algorithmic tools. The theory was introduced in the work of Demaine, Fomin, Hajiaghayi, and Thilikos, for which the authors received the Nerode Prize in 2015.

In the study of graph algorithms, Courcelle's theorem is the statement that every graph property definable in the monadic second-order logic of graphs can be decided in linear time on graphs of bounded treewidth. The result was first proved by Bruno Courcelle in 1990 and independently rediscovered by Borie, Parker & Tovey (1992). It is considered the archetype of algorithmic meta-theorems.

In computer science, iterative compression is an algorithmic technique for the design of fixed-parameter tractable algorithms, in which one element is added to the problem in each step, and a small solution for the problem prior to the addition is used to help find a small solution to the problem after the step.

In graph theory, a nonblocker is a subset of vertices in an undirected graph, all of which are adjacent to vertices outside of the subset. Equivalently, a nonblocker is the complement of a dominating set.

In graph theory, an odd cycle transversal of an undirected graph is a set of vertices of the graph that has a nonempty intersection with every odd cycle in the graph. Removing the vertices of an odd cycle transversal from a graph leaves a bipartite graph as the remaining induced subgraph.

The vertex k-center problem is a classical NP-hard problem in computer science. It has application in facility location and clustering. Basically, the vertex k-center problem models the following real problem: given a city with facilities, find the best facilities where to build fire stations. Since firemen must attend any emergency as quickly as possible, the distance from the farthest facility to its nearest fire station has to be as small as possible. In other words, the position of the fire stations must be such that every possible fire is attended as quickly as possible.

In graph theory, the cutwidth of an undirected graph is the smallest integer with the following property: there is an ordering of the vertices of the graph, such that every cut obtained by partitioning the vertices into earlier and later subsets of the ordering is crossed by at most edges. That is, if the vertices are numbered , then for every , the number of edges with and is at most .

The twin-width of an undirected graph is a natural number associated with the graph, used to study the parameterized complexity of graph algorithms. Intuitively, it measures how similar the graph is to a cograph, a type of graph that can be reduced to a single vertex by repeatedly merging together twins, vertices that have the same neighbors. The twin-width is defined from a sequence of repeated mergers where the vertices are not required to be twins, but have nearly equal sets of neighbors.

A parameterized approximation algorithm is a type of algorithm that aims to find approximate solutions to NP-hard optimization problems in polynomial time in the input size and a function of a specific parameter. These algorithms are designed to combine the best aspects of both traditional approximation algorithms and fixed-parameter tractability.

The highway dimension is a graph parameter modelling transportation networks, such as road networks or public transportation networks. It was first formally defined by Abraham et al. based on the observation by Bast et al. that any road network has a sparse set of "transit nodes", such that driving from a point A to a sufficiently far away point B along the shortest route will always pass through one of these transit nodes. It has also been proposed that the highway dimension captures the properties of public transportation networks well, given that longer routes using busses, trains, or airplanes will typically be serviced by larger transit hubs. This relates to the spoke–hub distribution paradigm in transport topology optimization.