Related Research Articles

A cognitive bias is a systematic pattern of deviation from norm or rationality in judgment. Individuals create their own "subjective reality" from their perception of the input. An individual's construction of reality, not the objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, and irrationality.

Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one's prior beliefs or values. People display this bias when they select information that supports their views, ignoring contrary information, or when they interpret ambiguous evidence as supporting their existing attitudes. The effect is strongest for desired outcomes, for emotionally charged issues, and for deeply entrenched beliefs. Confirmation bias is insuperable for most people, but they can manage it, for example, by education and training in critical thinking skills.

In psychology, cognitivism is a theoretical framework for understanding the mind that gained credence in the 1950s. The movement was a response to behaviorism, which cognitivists said neglected to explain cognition. Cognitive psychology derived its name from the Latin cognoscere, referring to knowing and information, thus cognitive psychology is an information-processing psychology derived in part from earlier traditions of the investigation of thought and problem solving.

Hindsight bias, also known as the knew-it-all-along phenomenon or creeping determinism, is the common tendency for people to perceive past events as having been more predictable than they were.

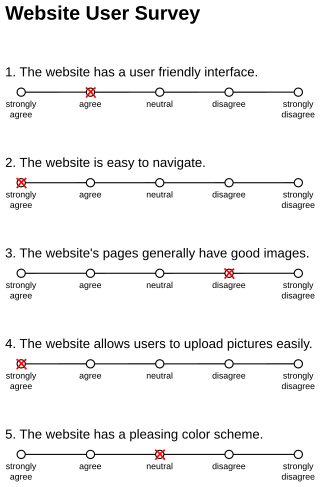

Response bias is a general term for a wide range of tendencies for participants to respond inaccurately or falsely to questions. These biases are prevalent in research involving participant self-report, such as structured interviews or surveys. Response biases can have a large impact on the validity of questionnaires or surveys.

In psychology and cognitive science, a schema describes a pattern of thought or behavior that organizes categories of information and the relationships among them. It can also be described as a mental structure of preconceived ideas, a framework representing some aspect of the world, or a system of organizing and perceiving new information, such as a mental schema or conceptual model. Schemata influence attention and the absorption of new knowledge: people are more likely to notice things that fit into their schema, while re-interpreting contradictions to the schema as exceptions or distorting them to fit. Schemata have a tendency to remain unchanged, even in the face of contradictory information. Schemata can help in understanding the world and the rapidly changing environment. People can organize new perceptions into schemata quickly as most situations do not require complex thought when using schema, since automatic thought is all that is required.

Dual-coding theory is a theory of cognition that suggests that the mind processes information along two different channels; verbal and nonverbal. It was hypothesized by Allan Paivio of the University of Western Ontario in 1971. In developing this theory, Paivio used the idea that the formation of mental imagery aids learning through the picture superiority effect.

Inattentional blindness or perceptual blindness occurs when an individual fails to perceive an unexpected stimulus in plain sight, purely as a result of a lack of attention rather than any vision defects or deficits. When it becomes impossible to attend to all the stimuli in a given situation, a temporary "blindness" effect can occur, as individuals fail to see unexpected but often salient objects or stimuli.

Belief bias is the tendency to judge the strength of arguments based on the plausibility of their conclusion rather than how strongly they justify that conclusion. A person is more likely to accept an argument that supports a conclusion that aligns with their values, beliefs and prior knowledge, while rejecting counter arguments to the conclusion. Belief bias is an extremely common and therefore significant form of error; we can easily be blinded by our beliefs and reach the wrong conclusion. Belief bias has been found to influence various reasoning tasks, including conditional reasoning, relation reasoning and transitive reasoning.

Choice-supportive bias or post-purchase rationalization is the tendency to retroactively ascribe positive attributes to an option one has selected and/or to demote the forgone options. It is part of cognitive science, and is a distinct cognitive bias that occurs once a decision is made. For example, if a person chooses option A instead of option B, they are likely to ignore or downplay the faults of option A while amplifying or ascribing new negative faults to option B. Conversely, they are also likely to notice and amplify the advantages of option A and not notice or de-emphasize those of option B.

The overconfidence effect is a well-established bias in which a person's subjective confidence in their judgments is reliably greater than the objective accuracy of those judgments, especially when confidence is relatively high. Overconfidence is one example of a miscalibration of subjective probabilities. Throughout the research literature, overconfidence has been defined in three distinct ways: (1) overestimation of one's actual performance; (2) overplacement of one's performance relative to others; and (3) overprecision in expressing unwarranted certainty in the accuracy of one's beliefs.

Lie detection is an assessment of a verbal statement with the goal to reveal a possible intentional deceit. Lie detection may refer to a cognitive process of detecting deception by evaluating message content as well as non-verbal cues. It also may refer to questioning techniques used along with technology that record physiological functions to ascertain truth and falsehood in response. The latter is commonly used by law enforcement in the United States, but rarely in other countries because it is based on pseudoscience.

In psychology, a dual process theory provides an account of how thought can arise in two different ways, or as a result of two different processes. Often, the two processes consist of an implicit (automatic), unconscious process and an explicit (controlled), conscious process. Verbalized explicit processes or attitudes and actions may change with persuasion or education; though implicit process or attitudes usually take a long amount of time to change with the forming of new habits. Dual process theories can be found in social, personality, cognitive, and clinical psychology. It has also been linked with economics via prospect theory and behavioral economics, and increasingly in sociology through cultural analysis.

The wisdom of the crowd is the collective opinion of a diverse independent group of individuals rather than that of a single expert. This process, while not new to the Information Age, has been pushed into the mainstream spotlight by social information sites such as Quora, Reddit, Stack Exchange, Wikipedia, Yahoo! Answers, and other web resources which rely on collective human knowledge. An explanation for this phenomenon is that there is idiosyncratic noise associated with each individual judgment, and taking the average over a large number of responses will go some way toward canceling the effect of this noise.

The introspection illusion is a cognitive bias in which people wrongly think they have direct insight into the origins of their mental states, while treating others' introspections as unreliable. The illusion has been examined in psychological experiments, and suggested as a basis for biases in how people compare themselves to others. These experiments have been interpreted as suggesting that, rather than offering direct access to the processes underlying mental states, introspection is a process of construction and inference, much as people indirectly infer others' mental states from their behaviour.

Processing fluency is the ease with which information is processed. Perceptual fluency is the ease of processing stimuli based on manipulations to perceptual quality. Retrieval fluency is the ease with which information can be retrieved from memory.

The rhyme-as-reason effect, or Eaton–Rosen phenomenon, is a cognitive bias whereupon a saying or aphorism is judged as more accurate or truthful when it is rewritten to rhyme.

The illusory truth effect is the tendency to believe false information to be correct after repeated exposure. This phenomenon was first identified in a 1977 study at Villanova University and Temple University. When truth is assessed, people rely on whether the information is in line with their understanding or if it feels familiar. The first condition is logical, as people compare new information with what they already know to be true. Repetition makes statements easier to process relative to new, unrepeated statements, leading people to believe that the repeated conclusion is more truthful. The illusory truth effect has also been linked to hindsight bias, in which the recollection of confidence is skewed after the truth has been received.

Truth-default theory (TDT) is a communication theory which predicts and explains the use of veracity and deception detection in humans. It was developed upon the discovery of the veracity effect - whereby the proportion of truths versus lies presented in a judgement study on deception will drive accuracy rates. This theory gets its name from its central idea which is the truth-default state. This idea suggests that people presume others to be honest because they either don't think of deception as a possibility during communicating or because there is insufficient evidence that they are being deceived. Emotions, arousal, strategic self-presentation, and cognitive effort are nonverbal behaviors that one might find in deception detection. Ultimately this theory predicts that speakers and listeners will default to use the truth to achieve their communicative goals. However, if the truth presents a problem, then deception will surface as a viable option for goal attainment.

References

- ↑ Bredart, Serge; Modolo, Karin (1988-05-01). "Moses strikes again: Focalization effect on a semantic illusion". Acta Psychologica. 67 (2): 135–144. doi:10.1016/0001-6918(88)90009-1.

- Rapp, D., & Braasch, J. L. (n.d.). Processing inaccurate information: Theoretical and applied perspectives from cognitive science and the educational sciences.

- Bottoms, H. C., Eslick, A. N., & Marsh, E. J. (2010). Memory and the Moses illusion: Failures to detect contradictions with stored knowledge yield negative memorial consequences. Memory, 18(6), 670-678

- Park, H., & Reder, L. M. (2004). Moses illusion: Implications for human cognition. In R. F. Pohl (Ed.), Cognitive illusions: A handbook on fallacies and biases in thinking, judgment, and memory (pp. 275"292). Hove, UK: Psychology Press.

- Knowledge does not protect against illusory truth. Fazio, Lisa K.; Brashier, Nadia M.; Payne, B. Keith; Marsh, Elizabeth J. Journal of Experimental Psychology: General, Vol 144(5), Oct 2015, 993-1002.

- Allison D. Cantor & Elizabeth J. Marsh (2016): Expertise effects in the Moses illusion: detecting contradictions with stored knowledge, Memory, doi:10.1080/09658211.2016.1152377

- Gilbert, D. T. (1991). How mental systems believe. American psychologist, 46(2), 107.

- Marsh, E. J., & Umanath, S. (2014). Knowledge Neglect: Failures to Notice Contradictions with Stored Knowledge. Chapter in D. N. Rapp and J. Braasch (Eds.) Processing Inaccurate Information: Theoretical and Applied Perspectives from Cognitive Science and the Educational Sciences. MIT Press.