Unicode, formally The Unicode Standard, is an information technology standard for the consistent encoding, representation, and handling of text expressed in most of the world's writing systems. The standard, which is maintained by the Unicode Consortium, defines as of the current version (15.0) 149,186 characters covering 161 modern and historic scripts, as well as symbols, emoji, and non-visual control and formatting codes.

UTF-8 is a variable-length character encoding used for electronic communication. Defined by the Unicode Standard, the name is derived from UnicodeTransformation Format – 8-bit.

UTF-16 (16-bit Unicode Transformation Format) is a character encoding capable of encoding all 1,112,064 valid code points of Unicode (in fact this number of code points is dictated by the design of UTF-16). The encoding is variable-length, as code points are encoded with one or two 16-bit code units. UTF-16 arose from an earlier obsolete fixed-width 16-bit encoding, now known as UCS-2 (for 2-byte Universal Character Set), once it became clear that more than 216 (65,536) code points were needed.

The Unicode Consortium is a 501(c)(3) non-profit organization incorporated and based in Mountain View, California. Its primary purpose is to maintain and publish the Unicode Standard which was developed with the intention of replacing existing character encoding schemes which are limited in size and scope, and are incompatible with multilingual environments. The consortium describes its overall purpose as:

...enabl[ing] people around the world to use computers in any language, by providing freely-available specifications and data to form the foundation for software internationalization in all major operating systems, search engines, applications, and the World Wide Web. An essential part of this purpose is to standardize, maintain, educate and engage academic and scientific communities, and the general public about, make publicly available, promote, and disseminate to the public a standard character encoding that provides for an allocation for more than a million characters.

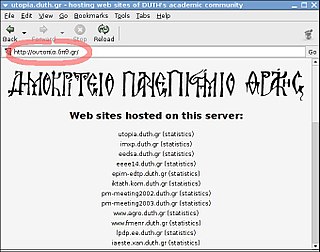

An internationalized domain name (IDN) is an Internet domain name that contains at least one label displayed in software applications, in whole or in part, in non-latin script or alphabet, such as Arabic, Bengali, Chinese, Cyrillic, Devanagari, Greek, Hebrew, Hindi, Tamil or Thai or in the Latin alphabet-based characters with diacritics or ligatures, such as French, German, Italian, Polish, Portuguese or Spanish. These writing systems are encoded by computers in multibyte Unicode. Internationalized domain names are stored in the Domain Name System (DNS) as ASCII strings using Punycode transcription.

Mojikyō, also known by its full name Konjaku Mojikyō, is a character encoding scheme. The Mojikyō Institute, which published the character set, also published computer software and TrueType fonts to accompany it. The Mojikyō Institute, chaired by Tadahisa Ishikawa (石川忠久), originally had its character set and related software and data redistributed on CD-ROM's sold in Kinokuniya stores.

In character encoding terminology, a code point, codepoint or code position is a numerical value that maps to a specific character. Code points usually represent a single grapheme—usually a letter, digit, punctuation mark, or whitespace—but sometimes represent symbols, control characters, or formatting. The set of all possible code points within a given encoding/character set make up that encoding's codespace.

The Compatibility Encoding Scheme for UTF-16: 8-Bit (CESU-8) is a variant of UTF-8 that is described in Unicode Technical Report #26. A Unicode code point from the Basic Multilingual Plane (BMP), i.e. a code point in the range U+0000 to U+FFFF, is encoded in the same way as in UTF-8. A Unicode supplementary character, i.e. a code point in the range U+10000 to U+10FFFF, is first represented as a surrogate pair, like in UTF-16, and then each surrogate code point is encoded in UTF-8. Therefore, CESU-8 needs six bytes for each Unicode supplementary character while UTF-8 needs only four. Though not specified in the technical report, unpaired surrogates are also encoded as 3 bytes each, and CESU-8 is exactly the same as applying an older UCS-2 to UTF-8 converter to UTF-16 data.

In Unicode, a Private Use Area (PUA) is a range of code points that, by definition, will not be assigned characters by the Unicode Consortium. Three private use areas are defined: one in the Basic Multilingual Plane, and one each in, and nearly covering, planes 15 and 16. The code points in these areas cannot be considered as standardized characters in Unicode itself. They are intentionally left undefined so that third parties may define their own characters without conflicting with Unicode Consortium assignments. Under the Unicode Stability Policy, the Private Use Areas will remain allocated for that purpose in all future Unicode versions.

In computer programming, whitespace is any character or series of characters that represent horizontal or vertical space in typography. When rendered, a whitespace character does not correspond to a visible mark, but typically does occupy an area on a page. For example, the common whitespace symbol U+0020 SPACE represents a blank space punctuation character in text, used as a word divider in Western scripts.

Letterlike Symbols is a Unicode block containing 80 characters which are constructed mainly from the glyphs of one or more letters. In addition to this block, Unicode includes full styled mathematical alphabets, although Unicode does not explicitly categorise these characters as being "letterlike".

In Unicode, the Sumero-Akkadian Cuneiform script is covered in three blocks in the Supplementary Multilingual Plane (SMP):

Joseph D. Becker is an American computer scientist and one of the co-founders of the Unicode project, and a Technical Vice President Emeritus of the Unicode Consortium. He has worked on artificial intelligence at BBN and multilingual workstation software at Xerox.

The regional indicator symbols are a set of 26 alphabetic Unicode characters (A–Z) intended to be used to encode ISO 3166-1 alpha-2 two-letter country codes in a way that allows optional special treatment.

Halfwidth and Fullwidth Forms is the name of a Unicode block U+FF00–FFEF, provided so that older encodings containing both halfwidth and fullwidth characters can have lossless translation to/from Unicode. It is the second-to-last block of the Basic Multilingual Plane, followed only by the short Specials block at U+FFF0–FFFF. Its block name in Unicode 1.0 was Halfwidth and Fullwidth Variants.

Robert L. Belleville is an American computer engineer who was an early head of engineering at Apple from 1982 until 1985.

The Xerox Character Code Standard (XCCS) is a historical 16-bit character encoding that was created by Xerox in 1980 for the exchange of information between elements of the Xerox Network Systems Architecture. It encodes the characters required for languages using the Latin, Arabic, Hebrew, Greek and Cyrillic scripts, the Chinese, Japanese and Korean writing systems, and technical symbols.

The Lotus Multi-Byte Character Set (LMBCS) is a proprietary multi-byte character encoding originally conceived in 1988 at Lotus Development Corporation with input from Bob Balaban and others. Created around the same time and addressing some of the same problems, LMBCS could be viewed as parallel development and possible alternative to Unicode. For maximum compatibility, later issues of LMBCS incorporate UTF-16 as a subset.

David G. Opstad is a retired American computer scientist specializing during his career in computer typography and information processing, leading to several breakthroughs. Opstad was a contributor to Unicode 1.0, together with Joe Becker, Lee Collins, Huan-mei Liao, and Nelson Ng.