Related Research Articles

Latency, from a general point of view, is a time delay between the cause and the effect of some physical change in the system being observed. Lag, as it is known in gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games.

Pinchas Zukerman is an Israeli-American violinist, violist and conductor.

DirectSound is a deprecated software component of the Microsoft DirectX library for the Windows operating system, superseded by XAudio2. It provides a low-latency interface to sound card drivers written for Windows 95 through Windows XP and can handle the mixing and recording of multiple audio streams. DirectSound was originally written for Microsoft by John Miles.

Audio Stream Input/Output (ASIO) is a computer sound card driver protocol for digital audio specified by Steinberg, providing a low-latency and high fidelity interface between a software application and a computer's sound card. Whereas Microsoft's DirectSound is commonly used as an intermediary signal path for non-professional users, ASIO allows musicians and sound engineers to access external hardware directly.

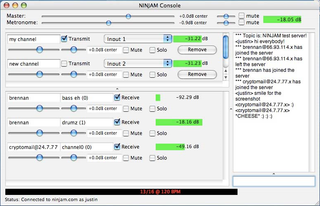

NINJAM stands for Novel Intervallic Network Jamming Architecture for Music. The software and systems comprising NINJAM provide a non-realtime mechanism for exchanging audio data across the internet, with a synchronisation mechanism based on musical form. It provides a way for musicians to "jam" (improvise) together over the Internet; it pioneered the concept of "virtual-time" jamming. It was originally developed by Brennan Underwood, Justin Frankel, and Tom Pepper.

VJing is a broad designation for realtime visual performance. Characteristics of VJing are the creation or manipulation of imagery in realtime through technological mediation and for an audience, in synchronization to music. VJing often takes place at events such as concerts, nightclubs, music festivals and sometimes in combination with other performative arts. This results in a live multimedia performance that can include music, actors and dancers. The term VJing became popular in its association with MTV's Video Jockey but its origins date back to the New York club scene of the 70s. In both situations VJing is the manipulation or selection of visuals, the same way DJing is a selection and manipulation of audio.

PulseAudio is a network-capable sound server program distributed via the freedesktop.org project. It runs mainly on Linux, various BSD distributions such as FreeBSD and OpenBSD, macOS, as well as Illumos distributions and the Solaris operating system.

Ubuntu Studio is a recognized flavor of the Ubuntu Linux distribution, which is geared to general multimedia production. The original version, based on Ubuntu 7.04, was released on 10 May 2007.

A software effect processor is a computer program which is able to modify the signal coming from a digital audio source in real time.

aptX is a family of proprietary audio codec compression algorithms owned by Qualcomm, with a heavy emphasis on wireless audio applications.

A networked music performance or network musical performance is a real-time interaction over a computer network that enables musicians in different locations to perform as if they were in the same room. These interactions can include performances, rehearsals, improvisation or jamming sessions, and situations for learning such as master classes. Participants may be connected by "high fidelity multichannel audio and video links" as well as MIDI data connections and specialized collaborative software tools. While not intended to be a replacement for traditional live stage performance, networked music performance supports musical interaction when co-presence is not possible and allows for novel forms of music expression. Remote audience members and possibly a conductor may also participate.

Opus is a lossy audio coding format developed by the Xiph.Org Foundation and standardized by the Internet Engineering Task Force, designed to efficiently code speech and general audio in a single format, while remaining low-latency enough for real-time interactive communication and low-complexity enough for low-end embedded processors. Opus replaces both Vorbis and Speex for new applications, and several blind listening tests have ranked it higher-quality than any other standard audio format at any given bitrate until transparency is reached, including MP3, AAC, and HE-AAC.

RTP-MIDI is a protocol to transport MIDI messages within RTP packets over Ethernet and WiFi networks. It is completely open and free, and is compatible both with LAN and WAN application fields. Compared to MIDI 1.0, RTP-MIDI includes new features like session management, device synchronization and detection of lost packets, with automatic regeneration of lost data. RTP-MIDI is compatible with real-time applications, and supports sample-accurate synchronization for each MIDI message.

The second decade of the 21st century has continued to usher in new technologies and devices built on the technological foundation established in the previous decade. Technologically speaking, our personal devices and lives have evolved symbiotically, with the personal computer at the center of our daily communications, entertainment, and education. What has changed is accessibility and versatility; users can now perform the same functions and activities of their personal computer on a wide range of devices: smartphones, tablets, and even more recently, smart watches. The increase in personal computing capacity has a profound impact on the way people listen to, promote, and create music.

Visual Cloud is the implementation of visual computing applications that rely on cloud computing architectures, cloud scale processing and storage, and ubiquitous broadband connectivity between connected devices, network edge devices and cloud data centers. It is a model for providing visual computing services to consumers and business users, while allowing service providers to realize the general benefits of cloud computing, such as low cost, elastic scalability, and high availability while providing optimized infrastructure for visual computing application requirements.

SMPTE 2110 is a suite of standards from the Society of Motion Picture and Television Engineers (SMPTE) that describes how to send digital media over an IP network.

Audio Video Bridging (AVB) is a common name for the set of technical standards which provide improved synchronization, low-latency, and reliability for switched Ethernet networks. AVB embodies the following technologies and standards:

Jamulus is open source (GPL) networked music performance software that enables live rehearsing, jamming and performing with musicians located anywhere on the internet. Jamulus is written by Volker Fischer and contributors using C++. The Software is based on the Qt framework and uses the OPUS audio codec. It was known as "llcon" until 2013.

When playing music remotely, musicians must reduce or eliminate the issue of audio latency in order to play in time together. While standard web conferencing software is designed to facilitate remote audio and video communication, it has too much latency for live musical performance. Connection-oriented Internet protocols subject audio signals to delays and other interference which presents a problem for keeping latency low enough for musicians to play together remotely.

JamKazam is proprietary networked music performance software that enables real-time rehearsing, jamming and performing with musicians at remote locations, overcoming latency - the time lapse that occurs while (compressed) audio streams travel to and from each musician.

References

- ↑ "LoLa suggested hardware" (PDF). lola.conts.it. Retrieved 6 January 2021.

- ↑ "LoLa, Low Latency Audio Visual Streaming System Installation & User's Manual, Version 2.0.0 (rev.001)" (PDF). lola.conts.it. Conservatorio di musica G. Tartini – Trieste, Italy. Retrieved 6 January 2021.

- ↑ "LOLA INSTALLATIONS". Google My Maps. Retrieved 6 January 2021.

- ↑ Siglin, Tim (14 June 2018). "Come Together: Streaming in Professional Music Production". Streaming Media Magazine. Retrieved 6 January 2021.

- ↑ Vnukowski, Daniel (22 December 2020). "As a concert pianist, the stage is my life. But the pandemic taught me to love the livestream". Los Angeles Times. Retrieved 6 January 2021.

- ↑ Pooran, Neil (26 October 2018). "Poppies will be projected on campus for Armistice concert". edinburghlive. Retrieved 6 January 2021.

- ↑ "Pinchas Zukerman's Secret — Staying Curious". San Francisco Classical Voice. 14 February 2017. Retrieved 6 January 2021.