A^+ B is close to 0

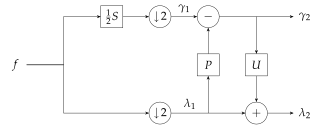

Shuang-ren Zhao defined a Local inverse [2] to solve the above problem. First consider the simplest solution

or

Here  is the correct data in which there is no influence of the outside object function. From this data it is easy to get the correct solution,

is the correct data in which there is no influence of the outside object function. From this data it is easy to get the correct solution,

Here  is a correct(or exact) solution for the unknown

is a correct(or exact) solution for the unknown  , which means

, which means  . In case that

. In case that  is not a square matrix or has no inverse, the generalized inverse can applied,

is not a square matrix or has no inverse, the generalized inverse can applied,

Since  is unknown, if it is set to

is unknown, if it is set to  , an approximate solution is obtained.

, an approximate solution is obtained.

In the above solution the result  is related to the unknown vector

is related to the unknown vector  . Since

. Since  can have any value the result

can have any value the result  has very strong artifacts, namely

has very strong artifacts, namely

.

.

These kind of artifacts are referred to as truncation artifacts in the field of CT image reconstruction. In order to minimize the above artifacts in the solution, a special matrix  is considered, which satisfies

is considered, which satisfies

and thus satisfies

Solving the above equation with Generalized inverse gives

Here  is the generalized inverse of

is the generalized inverse of  , and

, and  is a solution for

is a solution for  . It is easy to find a matrix Q which satisfies

. It is easy to find a matrix Q which satisfies  , specifically

, specifically  can be written as the following:

can be written as the following:

This matrix  is referred as the transverse projection of

is referred as the transverse projection of  , and

, and  is the generalized inverse of

is the generalized inverse of  . The matrix

. The matrix  satisfies

satisfies

from which it follows that

It is easy to prove that  :

:

and hence

Hence Q is also the generalized inverse of Q

That means

Hence,

or

The matrix

is referred to as the local inverse of the matrix  Using the local inverse instead of the generalized inverse or the inverse can avoid artifacts from unknown input data. Considering,

Using the local inverse instead of the generalized inverse or the inverse can avoid artifacts from unknown input data. Considering,

Hence there is

Hence  is only related to the correct data

is only related to the correct data  . This kind error can be calculated as

. This kind error can be calculated as

This kind error are called the bowl effect. The bowl effect is not related to the unknown object  , it is only related to the correct data

, it is only related to the correct data  .

.

In case the contribution of  to

to  is smaller than that of

is smaller than that of  , or

, or

the local inverse solution  is better than

is better than  for this kind of inverse problem. Using

for this kind of inverse problem. Using  instead of

instead of  , the truncation artifacts are replaced by the bowl effect. This result is the same as in local tomography, hence the local inverse is a direct extension of the concept of local tomography.

, the truncation artifacts are replaced by the bowl effect. This result is the same as in local tomography, hence the local inverse is a direct extension of the concept of local tomography.

It is well known that the solution of the generalized inverse is a minimal L2 norm method. From the above derivation it is clear that the solution of the local inverse is a minimal L2 norm method subject to the condition that the influence of the unknown object  is

is  . Hence the local inverse is also a direct extension of the concept of the generalized inverse.

. Hence the local inverse is also a direct extension of the concept of the generalized inverse.