In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable , which takes values in the alphabet and is distributed according to :

In theoretical computer science, communication complexity studies the amount of communication required to solve a problem when the input to the problem is distributed among two or more parties. The study of communication complexity was first introduced by Andrew Yao in 1979, while studying the problem of computation distributed among several machines. The problem is usually stated as follows: two parties each receive a -bit string and . The goal is for Alice to compute the value of a certain function, , that depends on both and , with the least amount of communication between them.

A binary symmetric channel is a common communications channel model used in coding theory and information theory. In this model, a transmitter wishes to send a bit, and the receiver will receive a bit. The bit will be "flipped" with a "crossover probability" of p, and otherwise is received correctly. This model can be applied to varied communication channels such as telephone lines or disk drive storage.

In probability and statistics, a Bernoulli process is a finite or infinite sequence of binary random variables, so it is a discrete-time stochastic process that takes only two values, canonically 0 and 1. The component Bernoulli variablesXi are identically distributed and independent. Prosaically, a Bernoulli process is a repeated coin flipping, possibly with an unfair coin. Every variable Xi in the sequence is associated with a Bernoulli trial or experiment. They all have the same Bernoulli distribution. Much of what can be said about the Bernoulli process can also be generalized to more than two outcomes ; this generalization is known as the Bernoulli scheme.

Golomb coding is a lossless data compression method using a family of data compression codes invented by Solomon W. Golomb in the 1960s. Alphabets following a geometric distribution will have a Golomb code as an optimal prefix code, making Golomb coding highly suitable for situations in which the occurrence of small values in the input stream is significantly more likely than large values.

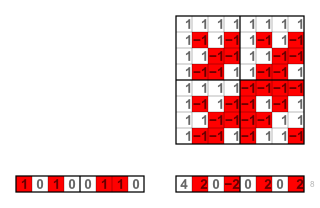

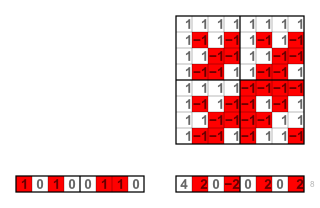

In mathematics, more specifically in harmonic analysis, Walsh functions form a complete orthogonal set of functions that can be used to represent any discrete function—just like trigonometric functions can be used to represent any continuous function in Fourier analysis. They can thus be viewed as a discrete, digital counterpart of the continuous, analog system of trigonometric functions on the unit interval. But unlike the sine and cosine functions, which are continuous, Walsh functions are piecewise constant. They take the values −1 and +1 only, on sub-intervals defined by dyadic fractions.

The Hadamard transform is an example of a generalized class of Fourier transforms. It performs an orthogonal, symmetric, involutive, linear operation on 2m real numbers.

In coding theory, block codes are a large and important family of error-correcting codes that encode data in blocks. There is a vast number of examples for block codes, many of which have a wide range of practical applications. The abstract definition of block codes is conceptually useful because it allows coding theorists, mathematicians, and computer scientists to study the limitations of all block codes in a unified way. Such limitations often take the form of bounds that relate different parameters of the block code to each other, such as its rate and its ability to detect and correct errors.

The dyadic transformation is the mapping

In mathematics, the jet is an operation that takes a differentiable function f and produces a polynomial, the truncated Taylor polynomial of f, at each point of its domain. Although this is the definition of a jet, the theory of jets regards these polynomials as being abstract polynomials rather than polynomial functions.

Reed–Muller codes are error-correcting codes that are used in wireless communications applications, particularly in deep-space communication. Moreover, the proposed 5G standard relies on the closely related polar codes for error correction in the control channel. Due to their favorable theoretical and mathematical properties, Reed–Muller codes have also been extensively studied in theoretical computer science.

The Hadamard code is an error-correcting code named after Jacques Hadamard that is used for error detection and correction when transmitting messages over very noisy or unreliable channels. In 1971, the code was used to transmit photos of Mars back to Earth from the NASA space probe Mariner 9. Because of its unique mathematical properties, the Hadamard code is not only used by engineers, but also intensely studied in coding theory, mathematics, and theoretical computer science. The Hadamard code is also known under the names Walsh code, Walsh family, and Walsh–Hadamard code in recognition of the American mathematician Joseph Leonard Walsh.

In mathematical physics, the primon gas or Riemann gas discovered by Bernard Julia is a model illustrating correspondences between number theory and methods in quantum field theory, statistical mechanics and dynamical systems such as the Lee-Yang theorem. It is a quantum field theory of a set of non-interacting particles, the primons; it is called a gas or a free model because the particles are non-interacting. The idea of the primon gas was independently discovered by Donald Spector. Later works by Ioannis Bakas and Mark Bowick, and Spector explored the connection of such systems to string theory.

In theoretical computer science, circuit complexity is a branch of computational complexity theory in which Boolean functions are classified according to the size or depth of the Boolean circuits that compute them. A related notion is the circuit complexity of a recursive language that is decided by a uniform family of circuits .

In theoretical computer science, a small-bias sample space is a probability distribution that fools parity functions. In other words, no parity function can distinguish between a small-bias sample space and the uniform distribution with high probability, and hence, small-bias sample spaces naturally give rise to pseudorandom generators for parity functions.

A randomness extractor, often simply called an "extractor", is a function, which being applied to output from a weak entropy source, together with a short, uniformly random seed, generates a highly random output that appears independent from the source and uniformly distributed. Examples of weakly random sources include radioactive decay or thermal noise; the only restriction on possible sources is that there is no way they can be fully controlled, calculated or predicted, and that a lower bound on their entropy rate can be established. For a given source, a randomness extractor can even be considered to be a true random number generator (TRNG); but there is no single extractor that has been proven to produce truly random output from any type of weakly random source.

A locally testable code is a type of error-correcting code for which it can be determined if a string is a word in that code by looking at a small number of bits of the string. In some situations, it is useful to know if the data is corrupted without decoding all of it so that appropriate action can be taken in response. For example, in communication, if the receiver encounters a corrupted code, it can request the data be re-sent, which could increase the accuracy of said data. Similarly, in data storage, these codes can allow for damaged data to be recovered and rewritten properly.

A locally decodable code (LDC) is an error-correcting code that allows a single bit of the original message to be decoded with high probability by only examining a small number of bits of a possibly corrupted codeword. This property could be useful, say, in a context where information is being transmitted over a noisy channel, and only a small subset of the data is required at a particular time and there is no need to decode the entire message at once. Note that locally decodable codes are not a subset of locally testable codes, though there is some overlap between the two.

In coding theory, Zemor's algorithm, designed and developed by Gilles Zemor, is a recursive low-complexity approach to code construction. It is an improvement over the algorithm of Sipser and Spielman.

The Bernstein–Vazirani algorithm, which solves the Bernstein–Vazirani problem, is a quantum algorithm invented by Ethan Bernstein and Umesh Vazirani in 1997. It is a restricted version of the Deutsch–Jozsa algorithm where instead of distinguishing between two different classes of functions, it tries to learn a string encoded in a function. The Bernstein–Vazirani algorithm was designed to prove an oracle separation between complexity classes BQP and BPP.