In algebra, a division ring, also called a skew field, is a nontrivial ring in which division by nonzero elements is defined. Specifically, it is a nontrivial ring in which every nonzero element a has a multiplicative inverse, that is, an element usually denoted a–1, such that a a–1 = a–1 a = 1. So, (right) division may be defined as a / b = a b–1, but this notation is avoided, as one may have a b–1 ≠ b–1 a.

Linear algebra is the branch of mathematics concerning linear equations such as:

In mathematics, the quaternion number system extends the complex numbers. Quaternions were first described by the Irish mathematician William Rowan Hamilton in 1843 and applied to mechanics in three-dimensional space. The algebra of quaternions is often denoted by H, or in blackboard bold by Quaternions are not a field, because multiplication of quaternions is not, in general, commutative. Quaternions provide a definition of the quotient of two vectors in a three-dimensional space. Quaternions are generally represented in the form

A computer algebra system (CAS) or symbolic algebra system (SAS) is any mathematical software with the ability to manipulate mathematical expressions in a way similar to the traditional manual computations of mathematicians and scientists. The development of the computer algebra systems in the second half of the 20th century is part of the discipline of "computer algebra" or "symbolic computation", which has spurred work in algorithms over mathematical objects such as polynomials.

In abstract algebra, a branch of mathematics, a simple ring is a non-zero ring that has no two-sided ideal besides the zero ideal and itself. In particular, a commutative ring is a simple ring if and only if it is a field.

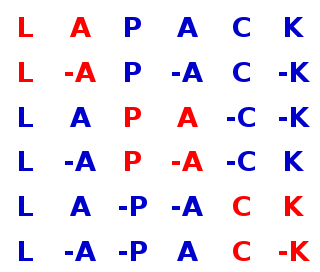

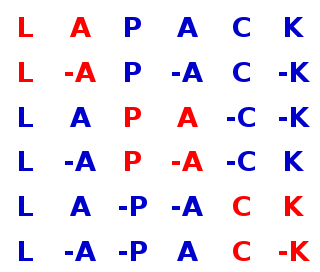

LAPACK is a standard software library for numerical linear algebra. It provides routines for solving systems of linear equations and linear least squares, eigenvalue problems, and singular value decomposition. It also includes routines to implement the associated matrix factorizations such as LU, QR, Cholesky and Schur decomposition. LAPACK was originally written in FORTRAN 77, but moved to Fortran 90 in version 3.2 (2008). The routines handle both real and complex matrices in both single and double precision. LAPACK relies on an underlying BLAS implementation to provide efficient and portable computational building blocks for its routines.

Basic Linear Algebra Subprograms (BLAS) is a specification that prescribes a set of low-level routines for performing common linear algebra operations such as vector addition, scalar multiplication, dot products, linear combinations, and matrix multiplication. They are the de facto standard low-level routines for linear algebra libraries; the routines have bindings for both C and Fortran. Although the BLAS specification is general, BLAS implementations are often optimized for speed on a particular machine, so using them can bring substantial performance benefits. BLAS implementations will take advantage of special floating point hardware such as vector registers or SIMD instructions.

Mathematical software is software used to model, analyze or calculate numeric, symbolic or geometric data.

The Template Numerical Toolkit is a software library for manipulating vectors and matrices in C++ created by the U.S. National Institute of Standards and Technology. TNT provides the fundamental linear algebra operations. TNT is analogous to the BLAS library used by LAPACK. Higher level algorithms, such as LU decomposition and singular value decomposition, are provided by JAMA, also developed at NIST, which uses TNT.

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible.

The FEniCS Project is a collection of free and open-source software components with the common goal to enable automated solution of differential equations. The components provide scientific computing tools for working with computational meshes, finite-element variational formulations of ordinary and partial differential equations, and numerical linear algebra.

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, with elements or entries arranged in rows and columns, which is used to represent a mathematical object or property of such an object.

In computer science, array is a data type that represents a collection of elements, each selected by one or more indices that can be computed at run time during program execution. Such a collection is usually called an array variable or array value. By analogy with the mathematical concepts vector and matrix, array types with one and two indices are often called vector type and matrix type, respectively. More generally, a multidimensional array type can be called a tensor type, by analogy with the physical concept, tensor.

Locally Optimal Block Preconditioned Conjugate Gradient (LOBPCG) is a matrix-free method for finding the largest eigenvalues and the corresponding eigenvectors of a symmetric generalized eigenvalue problem

In computer science, a 4D vector is a 4-component vector data type. Uses include homogeneous coordinates for 3-dimensional space in computer graphics, and red green blue alpha (RGBA) values for bitmap images with a color and alpha channel. They may also represent quaternions although the algebra they define is different.

GraphBLAS is an API specification that defines standard building blocks for graph algorithms in the language of linear algebra. GraphBLAS is built upon the notion that a sparse matrix can be used to represent graphs as either an adjacency matrix or an incidence matrix. The GraphBLAS specification describes how graph operations can be efficiently implemented via linear algebraic methods over different semirings.