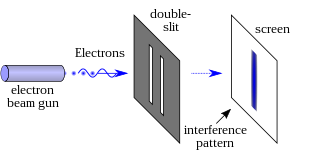

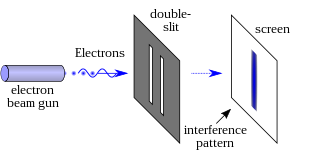

In modern physics, the double-slit experiment demonstrates that light and matter can exhibit behavior of both classical particles and classical waves. This type of experiment was first performed by Thomas Young in 1801, as a demonstration of the wave behavior of visible light. In 1927, Davisson and Germer and, independently, George Paget Thomson and his research student Alexander Reid demonstrated that electrons show the same behavior, which was later extended to atoms and molecules. Thomas Young's experiment with light was part of classical physics long before the development of quantum mechanics and the concept of wave–particle duality. He believed it demonstrated that the Christiaan Huygens' wave theory of light was correct, and his experiment is sometimes referred to as Young's experiment or Young's slits.

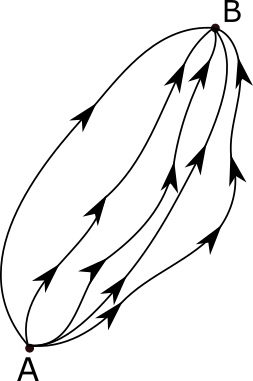

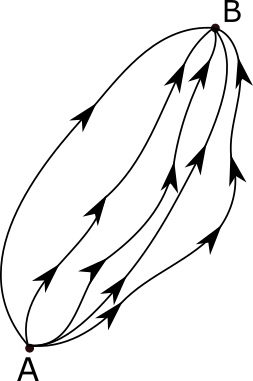

In theoretical physics, a Feynman diagram is a pictorial representation of the mathematical expressions describing the behavior and interaction of subatomic particles. The scheme is named after American physicist Richard Feynman, who introduced the diagrams in 1948. The interaction of subatomic particles can be complex and difficult to understand; Feynman diagrams give a simple visualization of what would otherwise be an arcane and abstract formula. According to David Kaiser, "Since the middle of the 20th century, theoretical physicists have increasingly turned to this tool to help them undertake critical calculations. Feynman diagrams have revolutionized nearly every aspect of theoretical physics." While the diagrams are applied primarily to quantum field theory, they can also be used in other areas of physics, such as solid-state theory. Frank Wilczek wrote that the calculations that won him the 2004 Nobel Prize in Physics "would have been literally unthinkable without Feynman diagrams, as would [Wilczek's] calculations that established a route to production and observation of the Higgs particle."

The Huygens–Fresnel principle states that every point on a wavefront is itself the source of spherical wavelets, and the secondary wavelets emanating from different points mutually interfere. The sum of these spherical wavelets forms a new wavefront. As such, the Huygens-Fresnel principle is a method of analysis applied to problems of luminous wave propagation both in the far-field limit and in near-field diffraction as well as reflection.

In mathematics, an integral is the continuous analog of a sum, which is used to calculate areas, volumes, and their generalizations. Integration, the process of computing an integral, is one of the two fundamental operations of calculus, the other being differentiation. Integration was initially used to solve problems in mathematics and physics, such as finding the area under a curve, or determining displacement from velocity. Usage of integration expanded to a wide variety of scientific fields thereafter.

The Novikov self-consistency principle, also known as the Novikov self-consistency conjecture and Larry Niven's law of conservation of history, is a principle developed by Russian physicist Igor Dmitriyevich Novikov in the mid-1980s. Novikov intended it to solve the problem of paradoxes in time travel, which is theoretically permitted in certain solutions of general relativity that contain what are known as closed timelike curves. The principle asserts that if an event exists that would cause a paradox or any "change" to the past whatsoever, then the probability of that event is zero. It would thus be impossible to create time paradoxes.

In physics, specifically statistical mechanics, an ensemble is an idealization consisting of a large number of virtual copies of a system, considered all at once, each of which represents a possible state that the real system might be in. In other words, a statistical ensemble is a set of systems of particles used in statistical mechanics to describe a single system. The concept of an ensemble was introduced by J. Willard Gibbs in 1902.

In quantum physics, a wave function is a mathematical description of the quantum state of an isolated quantum system. The most common symbols for a wave function are the Greek letters ψ and Ψ. Wave functions are complex-valued. For example, a wave function might assign a complex number to each point in a region of space. The Born rule provides the means to turn these complex probability amplitudes into actual probabilities. In one common form, it says that the squared modulus of a wave function that depends upon position is the probability density of measuring a particle as being at a given place. The integral of a wavefunction's squared modulus over all the system's degrees of freedom must be equal to 1, a condition called normalization. Since the wave function is complex-valued, only its relative phase and relative magnitude can be measured; its value does not, in isolation, tell anything about the magnitudes or directions of measurable observables. One has to apply quantum operators, whose eigenvalues correspond to sets of possible results of measurements, to the wave function ψ and calculate the statistical distributions for measurable quantities.

The phase space of a physical system is the set of all possible physical states of the system when described by a given parameterization. Each possible state corresponds uniquely to a point in the phase space. For mechanical systems, the phase space usually consists of all possible values of the position and momentum parameters. It is the direct product of direct space and reciprocal space. The concept of phase space was developed in the late 19th century by Ludwig Boltzmann, Henri Poincaré, and Josiah Willard Gibbs.

Functional integration is a collection of results in mathematics and physics where the domain of an integral is no longer a region of space, but a space of functions. Functional integrals arise in probability, in the study of partial differential equations, and in the path integral approach to the quantum mechanics of particles and fields.

In physics, action is a scalar quantity that describes how the balance of kinetic versus potential energy of a physical system changes with trajectory. Action is significant because it is an input to the principle of stationary action, an approach to classical mechanics that is simpler for multiple objects. Action and the variational principle are used in Feynman's formulation of quantum mechanics and in general relativity. For systems with small values of action similar to the Planck constant, quantum effects are significant.

In quantum physics an anomaly or quantum anomaly is the failure of a symmetry of a theory's classical action to be a symmetry of any regularization of the full quantum theory. In classical physics, a classical anomaly is the failure of a symmetry to be restored in the limit in which the symmetry-breaking parameter goes to zero. Perhaps the first known anomaly was the dissipative anomaly in turbulence: time-reversibility remains broken at the limit of vanishing viscosity.

In mathematics, an integral transform is a type of transform that maps a function from its original function space into another function space via integration, where some of the properties of the original function might be more easily characterized and manipulated than in the original function space. The transformed function can generally be mapped back to the original function space using the inverse transform.

The path integral formulation is a description in quantum mechanics that generalizes the stationary action principle of classical mechanics. It replaces the classical notion of a single, unique classical trajectory for a system with a sum, or functional integral, over an infinity of quantum-mechanically possible trajectories to compute a quantum amplitude.

In physics, the topological structure of spinfoam or spin foam consists of two-dimensional faces representing a configuration required by functional integration to obtain a Feynman's path integral description of quantum gravity. These structures are employed in loop quantum gravity as a version of quantum foam.

In physics, Faddeev–Popov ghosts are extraneous fields which are introduced into gauge quantum field theories to maintain the consistency of the path integral formulation. They are named after Ludvig Faddeev and Victor Popov.

In theoretical physics, Euclidean quantum gravity is a version of quantum gravity. It seeks to use the Wick rotation to describe the force of gravity according to the principles of quantum mechanics.

The Feynman checkerboard, or relativistic chessboard model, was Richard Feynman's sum-over-paths formulation of the kernel for a free spin-1/2 particle moving in one spatial dimension. It provides a representation of solutions of the Dirac equation in (1+1)-dimensional spacetime as discrete sums.

In mathematics, a line integral is an integral where the function to be integrated is evaluated along a curve. The terms path integral, curve integral, and curvilinear integral are also used; contour integral is used as well, although that is typically reserved for line integrals in the complex plane.

In quantum physics, a quantum state is a mathematical entity that embodies the knowledge of a quantum system. Quantum mechanics specifies the construction, evolution, and measurement of a quantum state. The result is a prediction for the system represented by the state. Knowledge of the quantum state, and the rules for the system's evolution in time, exhausts all that can be known about a quantum system.

In potential theory, a branch of mathematics, the Laplacian of the indicator of the domain D is a generalisation of the derivative of the Dirac delta function to higher dimensions, and is non-zero only on the surface of D. It can be viewed as the surface delta prime function. It is analogous to the second derivative of the Heaviside step function in one dimension. It can be obtained by letting the Laplace operator work on the indicator function of some domain D.