Related Research Articles

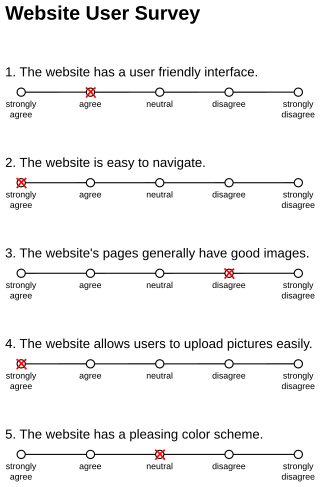

Questionnaire construction refers to the design of a questionnaire to gather statistically useful information about a given topic. When properly constructed and responsibly administered, questionnaires can provide valuable data about any given subject.

Survey methodology is "the study of survey methods". As a field of applied statistics concentrating on human-research surveys, survey methodology studies the sampling of individual units from a population and associated techniques of survey data collection, such as questionnaire construction and methods for improving the number and accuracy of responses to surveys. Survey methodology targets instruments or procedures that ask one or more questions that may or may not be answered.

An opinion poll, often simply referred to as a survey or a poll, is a human research survey of public opinion from a particular sample. Opinion polls are usually designed to represent the opinions of a population by conducting a series of questions and then extrapolating generalities in ratio or within confidence intervals. A person who conducts polls is referred to as a pollster.

A questionnaire is a research instrument that consists of a set of questions for the purpose of gathering information from respondents through survey or statistical study. A research questionnaire is typically a mix of close-ended questions and open-ended questions. Open-ended, long-term questions offer the respondent the ability to elaborate on their thoughts. The Research questionnaire was developed by the Statistical Society of London in 1838.

Response bias is a general term for a wide range of tendencies for participants to respond inaccurately or falsely to questions. These biases are prevalent in research involving participant self-report, such as structured interviews or surveys. Response biases can have a large impact on the validity of questionnaires or surveys.

A structured interview is a quantitative research method commonly employed in survey research. The aim of this approach is to ensure that each interview is presented with exactly the same questions in the same order. This ensures that answers can be reliably aggregated and that comparisons can be made with confidence between sample sub groups or between different survey periods.

In social science research, social-desirability bias is a type of response bias that is the tendency of survey respondents to answer questions in a manner that will be viewed favorably by others. It can take the form of over-reporting "good behavior" or under-reporting "bad", or undesirable behavior. The tendency poses a serious problem with conducting research with self-reports. This bias interferes with the interpretation of average tendencies as well as individual differences.

In survey research, response rate, also known as completion rate or return rate, is the number of people who answered the survey divided by the number of people in the sample. It is usually expressed in the form of a percentage. The term is also used in direct marketing to refer to the number of people who responded to an offer.

A self-report study is a type of survey, questionnaire, or poll in which respondents read the question and select a response by themselves without any outside interference. A self-report is any method which involves asking a participant about their feelings, attitudes, beliefs and so on. Examples of self-reports are questionnaires and interviews; self-reports are often used as a way of gaining participants' responses in observational studies and experiments.

Cognitive pretesting, or cognitive interviewing, is a field research method where data is collected on how the subject answers interview questions. It is the evaluation of a test or questionnaire before it's administered. It allows survey researchers to collect feedback regarding survey responses and is used in evaluating whether the question is measuring the construct the researcher intends. The data collected is then used to adjust problematic questions in the questionnaire before fielding the survey to the full sample of people.

The IQ Controversy, the Media and Public Policy is a book published by Smith College professor emeritus Stanley Rothman and Harvard researcher Mark Snyderman in 1988. Claiming to document liberal bias in media coverage of scientific findings regarding intelligence quotient (IQ), the book builds on a survey of the opinions of hundreds of North American psychologists, sociologists and educationalists conducted by the authors in 1984. The book also includes an analysis of the reporting on intelligence testing by the press and television in the US for the period 1969–1983, as well as an opinion poll of 207 journalists and 86 science editors about IQ testing.

Computer-assisted web interviewing (CAWI) is an Internet surveying technique in which the interviewee follows a script provided in a website. The questionnaires are made in a program for creating web interviews. The program allows for the questionnaire to contain pictures, audio and video clips, links to different web pages, etc. The website is able to customize the flow of the questionnaire based on the answers provided, as well as information already known about the participant. It is considered to be a cheaper way of surveying since one doesn't need to use people to hold surveys unlike computer-assisted telephone interviewing. With the increasing use of the Internet, online questionnaires have become a popular way of collecting information. The design of an online questionnaire has a dramatic effect on the quality of data gathered. There are many factors in designing an online questionnaire; guidelines, available question formats, administration, quality and ethic issues should be reviewed. Online questionnaires should be seen as a sub-set of a wider-range of online research methods.

A closed-ended question refers to any question for which a researcher provides research participants with options from which to choose a response. Closed-ended questions are sometimes phrased as a statement which requires a response.

In research of human subjects, a survey is a list of questions aimed for extracting specific data from a particular group of people. Surveys may be conducted by phone, mail, via the internet, and also at street corners or in malls. Surveys are used to gather or gain knowledge in fields such as social research and demography.

Participation bias or non-response bias is a phenomenon in which the results of elections, studies, polls, etc. become non-representative because the participants disproportionately possess certain traits which affect the outcome. These traits mean the sample is systematically different from the target population, potentially resulting in biased estimates.

Mode effect is a broad term referring to a phenomenon where a particular survey administration mode causes different data to be collected. For example, when asking a question using two different modes, responses to one mode may be significantly and substantially different from responses given in the other mode. Mode effects are a methodological artifact, limiting the ability to compare results from different modes of collection.

With the application of probability sampling in the 1930s, surveys became a standard tool for empirical research in social sciences, marketing, and official statistics. The methods involved in survey data collection are any of a number of ways in which data can be collected for a statistical survey. These are methods that are used to collect information from a sample of individuals in a systematic way. First there was the change from traditional paper-and-pencil interviewing (PAPI) to computer-assisted interviewing (CAI). Now, face-to-face surveys (CAPI), telephone surveys (CATI), and mail surveys are increasingly replaced by web surveys. In addition, remote interviewers could possibly keep the respondent engaged while reducing cost as compared to in-person interviewers.

Jon Alexander Krosnick is a professor of Political Science, Communication, and Psychology, and director of the Political Psychology Research Group (PPRG) at Stanford University. Additionally, he is the Frederic O. Glover Professor in Humanities and Social Sciences and an affiliate of the Woods Institute for the Environment. Krosnick has served as a consultant for government agencies, universities, and businesses, has testified as an expert in court proceedings, and has been an on-air television commentator on election night.

The interviewer effect is the distortion of response to a personal or telephone interview which results from differential reactions to the social style and personality of interviewers or to their presentation of particular questions. The use of fixed-wording questions is one method of reducing interviewer bias. Anthropological research and case-studies are also affected by the problem, which is exacerbated by the self-fulfilling prophecy, when the researcher is also the interviewer it is also any effect on data gathered from interviewing people that is caused by the behavior or characteristics of the interviewer.

Stanley Presser, a social scientist, is a Distinguished University Professor at the University of Maryland, where he teaches in the Sociology Department and the Joint Program in Survey Methodology (JPSM). He co-founded JPSM with colleagues at the University of Michigan and Westat, Inc., and served as its first director. He has also been editor of Public Opinion Quarterly and president of the American Association for Public Opinion Research.

References

- 1 2 3 4 "How Questions Affect Answers" p55. Retrieved July 17, 2018

- 1 2 Bishop, George F. (2005). The Illusion of Public Opinion. Rowman & Littlefield. p. 19. ISBN 9780742516458.

- 1 2 3 Payne, Stanley L. (1950). "Thoughts About Meaningless Questions". The Public Opinion Quarterly. 14 (4): 687–696. doi:10.1086/266248. JSTOR 2746245.

- 1 2 Inglis-Arkell, Esther (June 6, 2014). "The Metallic Metals Act Shows People Always Bluffed About Politics". IGN . Retrieved July 16, 2018.

- 1 2 Bishop, G.; Tuchfarber, A.; Oldendick, R. (1986). "Opinions on Fictitious Issues: The Pressure to Answer Survey Questions". The Public Opinion Quarterly. 50 (2): 240. doi:10.1086/268978. JSTOR 2748887.

- 1 2 Bishop, G.; Oldendick, R.; Tuchfarber, A.; Bennett, S. (1980). "Pseudo-Opinions on Public Affairs". The Public Opinion Quarterly. 44 (2): 198. doi:10.1086/268584. JSTOR 2748428.

- ↑ Schuman, Howard; Presser, Stanley (1996). Questions and Answers in Attitude Surveys. Sage. p. 147. ISBN 9780761903598.

- ↑ Lawless, Harry T.; Heymann, Hildegarde (August 31, 1999). Sensory Evaluation of Food. Springer Science & Business Media. p. 511. ISBN 9780834217522.

- ↑ Somin, Ilya (December 18, 2015). "Political ignorance and bombing Agrabah". The Washington Post . Retrieved July 16, 2018.

- ↑ Baker, Michael J. (1991). "Data collection — questionnaire design". Research for Marketing. London: Palgrave. pp. 132–158. doi:10.1007/978-1-349-21230-9_7. ISBN 978-0-333-47021-3.

- ↑ Daniels, Eugene (December 18, 2015). "The 'Bomb Agrabah' Survey Shows How Problematic Polling Can Be". KIVI-TV . Archived from the original on July 18, 2018. Retrieved July 17, 2018.

- ↑ Somin, Ilya (May 23, 2015). "Survey suggests that one third of US 8th graders believe that Canada, France, and Australia are dictatorships". The Washington Post . Retrieved July 17, 2018.

- ↑ Seymour, Richard (September 20, 2019). "The Polling Industry Doesn't Measure Public Opinion - It Produces It". The Guardian. Retrieved February 20, 2020.