Definition

Let be a locally finite measure on and let be a random variable with almost surely.

Then a random measure on is called a mixed Poisson process based on and iff conditionally on is a Poisson process on with intensity measure .

In probability theory, a mixed Poisson process is a special point process that is a generalization of a Poisson process. Mixed Poisson processes are simple example for Cox processes.

Let be a locally finite measure on and let be a random variable with almost surely.

Then a random measure on is called a mixed Poisson process based on and iff conditionally on is a Poisson process on with intensity measure .

Mixed Poisson processes are doubly stochastic in the sense that in a first step, the value of the random variable is determined. This value then determines the "second order stochasticity" by increasing or decreasing the original intensity measure .

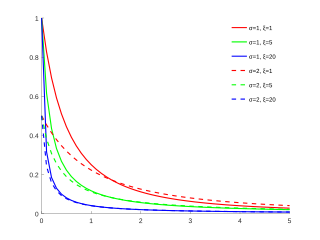

Conditional on mixed Poisson processes have the intensity measure and the Laplace transform

In probability theory and related fields, a stochastic or random process is a mathematical object usually defined as a family of random variables. Many stochastic processes can be represented by time series. However, a stochastic process is by nature continuous while a time series is a set of observations indexed by integers. A stochastic process may involve several related random variables.

In probability theory and statistics, a Gaussian process is a stochastic process, such that every finite collection of those random variables has a multivariate normal distribution, i.e. every finite linear combination of them is normally distributed. The distribution of a Gaussian process is the joint distribution of all those random variables, and as such, it is a distribution over functions with a continuous domain, e.g. time or space.

In probability theory, independent increments are a property of stochastic processes and random measures. Most of the time, a process or random measure has independent increments by definition, which underlines their importance. Some of the stochastic processes that by definition possess independent increments are the Wiener process, all Lévy processes, all additive process and the Poisson point process.

In probability theory, a Lévy process, named after the French mathematician Paul Lévy, is a stochastic process with independent, stationary increments: it represents the motion of a point whose successive displacements are random, in which displacements in pairwise disjoint time intervals are independent, and displacements in different time intervals of the same length have identical probability distributions. A Lévy process may thus be viewed as the continuous-time analog of a random walk.

In mathematics, particularly in functional analysis, a projection-valued measure (PVM) is a function defined on certain subsets of a fixed set and whose values are self-adjoint projections on a fixed Hilbert space. Projection-valued measures are formally similar to real-valued measures, except that their values are self-adjoint projections rather than real numbers. As in the case of ordinary measures, it is possible to integrate complex-valued functions with respect to a PVM; the result of such an integration is a linear operator on the given Hilbert space.

In probability theory and statistics, the generalized extreme value (GEV) distribution is a family of continuous probability distributions developed within extreme value theory to combine the Gumbel, Fréchet and Weibull families also known as type I, II and III extreme value distributions. By the extreme value theorem the GEV distribution is the only possible limit distribution of properly normalized maxima of a sequence of independent and identically distributed random variables. Note that a limit distribution needs to exist, which requires regularity conditions on the tail of the distribution. Despite this, the GEV distribution is often used as an approximation to model the maxima of long (finite) sequences of random variables.

In statistics and probability theory, a point process or point field is a collection of mathematical points randomly located on a mathematical space such as the real line or Euclidean space. Point processes can be used as mathematical models of phenomena or objects representable as points in some type of space.

Let be some measure space with -finite measure . The Poisson random measure with intensity measure is a family of random variables defined on some probability space such that

In probability theory, a Cox process, also known as a doubly stochastic Poisson process is a point process which is a generalization of a Poisson process where the intensity that varies across the underlying mathematical space is itself a stochastic process. The process is named after the statistician David Cox, who first published the model in 1955.

Expected shortfall (ES) is a risk measure—a concept used in the field of financial risk measurement to evaluate the market risk or credit risk of a portfolio. The "expected shortfall at q% level" is the expected return on the portfolio in the worst of cases. ES is an alternative to value at risk that is more sensitive to the shape of the tail of the loss distribution.

In statistics, the generalized Pareto distribution (GPD) is a family of continuous probability distributions. It is often used to model the tails of another distribution. It is specified by three parameters: location , scale , and shape . Sometimes it is specified by only scale and shape and sometimes only by its shape parameter. Some references give the shape parameter as .

In probability theory, a random measure is a measure-valued random element. Random measures are for example used in the theory of random processes, where they form many important point processes such as Poisson point processes and Cox processes.

Tail value at risk (TVaR), also known as tail conditional expectation (TCE) or conditional tail expectation (CTE), is a risk measure associated with the more general value at risk. It quantifies the expected value of the loss given that an event outside a given probability level has occurred.

In actuarial science and applied probability ruin theory uses mathematical models to describe an insurer's vulnerability to insolvency/ruin. In such models key quantities of interest are the probability of ruin, distribution of surplus immediately prior to ruin and deficit at time of ruin.

The generalized functional linear model (GFLM) is an extension of the generalized linear model (GLM) that allows one to regress univariate responses of various types on functional predictors, which are mostly random trajectories generated by a square-integrable stochastic processes. Similarly to GLM, a link function relates the expected value of the response variable to a linear predictor, which in case of GFLM is obtained by forming the scalar product of the random predictor function with a smooth parameter function . Functional Linear Regression, Functional Poisson Regression and Functional Binomial Regression, with the important Functional Logistic Regression included, are special cases of GFLM. Applications of GFLM include classification and discrimination of stochastic processes and functional data.

In mathematics, the Poisson boundary is a measure space associated to a random walk. It is an object designed to encode the asymptotic behaviour of the random walk, i.e. how trajectories diverge when the number of steps goes to infinity. Despite being called a boundary it is in general a purely measure-theoretical object and not a boundary in the topological sense. However, in the case where the random walk is on a topological space the Poisson boundary can be related to the Martin boundary which is an analytic construction yielding a genuine topological boundary. Both boundaries are related to harmonic functions on the space via generalisations of the Poisson formula.

A binomial process is a special point process in probability theory.

A mixed binomial process is a special point process in probability theory. They naturally arise from restrictions of (mixed) Poisson processes bounded intervals.

In the theory of stochastic processes, a ν-transform is an operation that transforms a measure or a point process into a different point process. Intuitively the ν-transform randomly relocates the points of the point process, with the type of relocation being dependent on the position of each point.

The mapping theorem is a theorem in the theory of point processes, a sub-discipline of probability theory. It describes how a Poisson point process is altered under measurable transformations. This allows to construct more complex Poisson point processes out of homogeneous Poisson point processes and can for example be used to simulate these more complex Poisson point processes in a similar manner than inverse transform sampling.