Slashdot is a social news website that originally billed itself as "News for Nerds. Stuff that Matters". It features news stories on science, technology, and politics that are submitted and evaluated by site users and editors. Each story has a comments section attached to it where users can add online comments. The website was founded in 1997 by Hope College students Rob Malda, also known as "CmdrTaco", and classmate Jeff Bates, also known as "Hemos". In 2012, they sold it to DHI Group, Inc.. In January 2016, BIZX acquired both slashdot.org and SourceForge. In December 2019, BIZX rebranded to Slashdot Media.

A website is a collection of web pages and related content that is identified by a common domain name and published on at least one web server. Examples of notable websites are Google, Facebook, Amazon, and Wikipedia.

Meta-moderation is a second level of comment moderation. A user is invited to rate a moderator's decision. He is shown a post that was moderated up or down and marks whether the moderator acted fairly. This is used to improve the quality of moderation.

Social software, also known as social apps, include communication and interactive tools often based on the Internet. Communication tools typically handle the capturing, storing and presentation of communication, usually written but increasingly including audio and video as well. Interactive tools handle mediated interactions between a pair or group of users. They focus on establishing and maintaining a connection among users, facilitating the mechanics of conversation and talk. Social software generally refers to software that makes collaborative behaviour, the organisation and moulding of communities, self-expression, social interaction and feedback possible for individuals. Another element of the existing definition of social software is that it allows for the structured mediation of opinion between people, in a centralized or self-regulating manner. The most improved area for social software is that Web 2.0 applications can all promote cooperation between people and the creation of online communities more than ever before. The opportunities offered by social software are instant connection and the opportunity to learn.An additional defining feature of social software is that apart from interaction and collaboration, it aggregates the collective behaviour of its users, allowing not only crowds to learn from an individual but individuals to learn from the crowds as well. Hence, the interactions enabled by social software can be one-on-one, one-to-many, or many-to-many.

An Internet forum, or message board, is an online discussion site where people can hold conversations in the form of posted messages. They differ from chat rooms in that messages are often longer than one line of text, and are at least temporarily archived. Also, depending on the access level of a user or the forum set-up, a posted message might need to be approved by a moderator before it becomes publicly visible.

MetaFilter, known as MeFi to its members, is a general-interest community weblog, founded in 1999 and based in the United States, featuring links to content that users have discovered on the web. Since 2003, it has included the popular question-and-answer subsite Ask MetaFilter. The site has eight paid staff members as of December 2021, including the owner. MetaFilter has about 12,000 active members as of early 2011.

Plastic.com (2001–2011) was a general-interest internet forum running under the motto 'Recycling the Web in Real Time'.

nofollow is a setting on a web page hyperlink that directs search engines not to use the link for page ranking calculations. It is specified in the page as a type of link relation; that is: <a rel="nofollow" ...>. Because search engines often calculate a site's importance according to the number of hyperlinks from other sites, the nofollow setting allows web site authors to indicate that the presence of a link is not an endorsement of the target site's importance.

Reddit is an American social news aggregation, content rating, and discussion website. Registered users submit content to the site such as links, text posts, images, and videos, which are then voted up or down by other members. Posts are organized by subject into user-created boards called "communities" or "subreddits". Submissions with more upvotes appear towards the top of their subreddit and, if they receive enough upvotes, ultimately on the site's front page. Reddit administrators moderate the communities. Moderation is also conducted by community-specific moderators, who are not Reddit employees.

User-generated content (UGC), alternatively known as user-created content (UCC), is any form of content, such as images, videos, text, and audio, that has been posted by users on online platforms such as social media, discussion forums and wikis. It is a product consumers create to disseminate information about online products or the firms that market them.

A social news website is a website that features user-posted stories. Such stories are ranked based on popularity, as voted on by other users of the site or by website administrators. Users typically comment online on the news posts and these comments may also be ranked in popularity. Since their emergence with the birth of Web 2.0, social news sites have been used to link many types of information, including news, humor, support, and discussion. All such websites allow the users to submit content and each site differs in how the content is moderated. On the Slashdot and Fark websites, administrators decide which articles are selected for the front page. On Reddit and Digg, the articles that get the most votes from the community of users will make it to the front page. Many social news websites also feature an online comment system, where users discuss the issues raised in an article. Some of these sites have also applied their voting system to the comments, so that the most popular comments are displayed first. Some social news websites also have a social networking service, in that users can set up a user profile and follow other users' online activity on the website.

On the Internet, a block or ban is a technical measure intended to restrict access to information or resources. Blocking and its inverse, unblocking, may be implemented by the owners of computers using software. Some countries, notably China and Singapore, block access to certain news information. In the United States, the Children's Internet Protection Act requires schools receiving federal funded discount rates for Internet access to install filter software that blocks obscene content, pornography, and, where applicable, content "harmful to minors".

Everything2 is a collaborative Web-based community consisting of a database of interlinked user-submitted written material. E2 is moderated for quality, but has no formal policy on subject matter. Writing on E2 covers a wide range of topics and genres, including encyclopedic articles, diary entries, poetry, humor, and fiction.

AbsolutePunk was a website, online community, and alternative music news source founded by Jason Tate. The website mainly focused on artists who are relatively unknown to mainstream audiences, but it was known to feature artists who have eventually achieved crossover success, including Blink-182, Fall Out Boy, My Chemical Romance, New Found Glory, Brand New, Taking Back Sunday, The Gaslight Anthem, Anberlin, Thrice, All Time Low, Jack's Mannequin, Yellowcard, Paramore, Relient K, and A Day to Remember. The primary musical genres of focus were emo and pop punk, but other genres were included.

Online participation is used to describe the interaction between users and online communities on the web. Online communities often involve members to provide content to the website and/or contribute in some way. Examples of such include wikis, blogs, online multiplayer games, and other types of social platforms. Online participation is currently a heavily researched field. It provides insight into fields such as web design, online marketing, crowdsourcing, and many areas of psychology. Some subcategories that fall under online participation are: commitment to online communities, coordination & interaction, and member recruitment.

Quora is a social question-and-answer website based in Mountain View, California. It was founded on June 25, 2009, and made available to the public on June 21, 2010. Users can collaborate by editing questions and commenting on answers that have been submitted by other users. As of 2020, the website was visited by 300 million users a month.

Reblogging is the mechanism in blogging which allows users to repost the content of another user's post with an indication that the source of the post is another user.

A like button, like option, or recommend button, is a feature in communication software such as social networking services, Internet forums, news websites and blogs where the user can express that they like, enjoy or support certain content. Internet services that feature like buttons usually display the number of users who liked each content, and may show a full or partial list of them. This is a quantitative alternative to other methods of expressing reaction to content, like writing a reply text. Some websites also include a dislike button, so the user can either vote in favor, against or neutrally. Other websites include more complex web content voting systems, for example five stars or reaction buttons to show a wider range of emotion to the content.

Minds is a blockchain-based social network. Users can earn money or cryptocurrency for using Minds, and tokens can be used to boost their posts or crowdfund other users. Minds has been described as more privacy-focused than mainstream social media networks. Writers in The New York Times, Engadget, and Vice have noted the volume of far-right users and content on the platform. Minds describes itself as focused on free speech, and minimally moderates the content on its platform. Its founders have said that they do not remove extremist content from the site out of a desire to deradicalize those who post it through civil discourse.

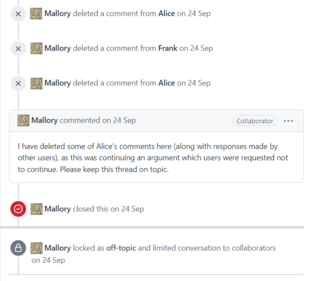

The comments section is a feature on most online blogs, news websites, and other websites in which the publishers invite the audience to comment on the published content. This is a continuation of the older practice of publishing letters to the editor. Despite this, comments sections can be used for more discussion between readers.