Related Research Articles

Meta elements are tags used in HTML and XHTML documents to provide structured metadata about a Web page. They are part of a web page's head section. Multiple Meta elements with different attributes can be used on the same page. Meta elements can be used to specify page description, keywords and any other metadata not provided through the other head elements and attributes.

PHP-Nuke is a web-based automated news publishing and content management system based on PHP and MySQL originally written by Francisco Burzi. The system is controlled using a web-based user interface. PHP-Nuke was originally a fork of the Thatware news portal system by David Norman.

A wiki is a form of hypertext publication on the internet which is collaboratively edited and managed by its audience directly through a web browser. A typical wiki contains multiple pages that can either be edited by the public or limited to use within an organization for maintaining its internal knowledge base.

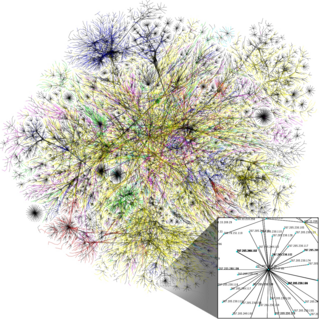

A Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing.

Spamdexing is the deliberate manipulation of search engine indexes. It involves a number of methods, such as link building and repeating related and/or unrelated phrases, to manipulate the relevance or prominence of resources indexed in a manner inconsistent with the purpose of the indexing system.

Search engine optimization (SEO) is the process of improving the quality and quantity of website traffic to a website or a web page from search engines. SEO targets unpaid search traffic rather than direct traffic, referral traffic, social media traffic, or paid traffic.

In the context of the World Wide Web, deep linking is the use of a hyperlink that links to a specific, generally searchable or indexed, piece of web content on a website, rather than the website's home page. The URL contains all the information needed to point to a particular item. Deep linking is different from mobile deep linking, which refers to directly linking to in-app content using a non-HTTP URI.

Social software, also known as social apps or social platform includes communications and interactive tools that are often based on the Internet. Communication tools typically handle capturing, storing and presenting communication, usually written but increasingly including audio and video as well. Interactive tools handle mediated interactions between a pair or group of users. They focus on establishing and maintaining a connection among users, facilitating the mechanics of conversation and talk. Social software generally refers to software that makes collaborative behaviour, the organisation and moulding of communities, self-expression, social interaction and feedback possible for individuals. Another element of the existing definition of social software is that it allows for the structured mediation of opinion between people, in a centralized or self-regulating manner. The most improved area for social software is that Web 2.0 applications can all promote co-operation between people and the creation of online communities more than ever before. The opportunities offered by social software are instant connections and opportunities to learn. An additional defining feature of social software is that apart from interaction and collaboration, it aggregates the collective behaviour of its users, allowing not only crowds to learn from an individual but individuals to learn from the crowds as well. Hence, the interactions enabled by social software can be one-to-one, one-to-many, or many-to-many.

The deep web, invisible web, or hidden web are parts of the World Wide Web whose contents are not indexed by standard web search-engine programs. This is in contrast to the "surface web", which is accessible to anyone using the Internet. Computer scientist Michael K. Bergman is credited with inventing the term in 2001 as a search-indexing term.

Microformats (μF) are predefined HTML markup created to serve as descriptive and consistent metadata about elements, designating them as representing a certain type of data. They allow software to process the information reliably by having set classes refer to a specific type of data rather than being arbitrary.

Sitemaps is a protocol in XML format meant for a webmaster to inform search engines about URLs on a website that are available for web crawling. It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs of the site. This allows search engines to crawl the site more efficiently and to find URLs that may be isolated from the rest of the site's content. The Sitemaps protocol is a URL inclusion protocol and complements robots.txt, a URL exclusion protocol.

This is a list of blogging terms. Blogging, like any hobby, has developed something of a specialized vocabulary. The following is an attempt to explain a few of the more common phrases and words, including etymologies when not obvious.

nofollow is a setting on a web page hyperlink that directs search engines not to use the link for page ranking calculations. It is specified in the page as a type of link relation; that is: <a rel="nofollow" ...>. Because search engines often calculate a site's importance according to the number of hyperlinks from other sites, the nofollow setting allows website authors to indicate that the presence of a link is not an endorsement of the target site's importance.

A search engine is a software system that provides hyperlinks to web pages and other relevant information on the Web in response to a user's query. The user inputs a query within a web browser or a mobile app, and the search results are often a list of hyperlinks, accompanied by textual summaries and images. Users also have the option of limiting the search to a specific type of results, such as images, videos, or news.

Petar Kajevski is a Macedonian IT and management expert. He is most famous as creator of the Macedonian search engine "Najdi!", but also achieved prominence as expert on software outsourcing.

Automated Content Access Protocol ("ACAP") was proposed in 2006 as a method of providing machine-readable permissions information for content, in the hope that it would have allowed automated processes to be compliant with publishers' policies without the need for human interpretation of legal terms. ACAP was developed by organisations that claimed to represent sections of the publishing industry. It was intended to provide support for more sophisticated online publishing business models, but was criticised for being biased towards the fears of publishers who see search and aggregation as a threat rather than as a source of traffic and new readers.

Internet censorship is the legal control or suppression of what can be accessed, published, or viewed on the Internet. Censorship is most often applied to specific internet domains but exceptionally may extend to all Internet resources located outside the jurisdiction of the censoring state. Internet censorship may also put restrictions on what information can be made internet accessible. Organizations providing internet access – such as schools and libraries – may choose to preclude access to material that they consider undesirable, offensive, age-inappropriate or even illegal, and regard this as ethical behavior rather than censorship. Individuals and organizations may engage in self-censorship of material they publish, for moral, religious, or business reasons, to conform to societal norms, political views, due to intimidation, or out of fear of legal or other consequences.

DMOZ was a multilingual open-content directory of World Wide Web links. The site and community who maintained it were also known as the Open Directory Project (ODP). It was owned by AOL but constructed and maintained by a community of volunteer editors.

The Wayback Machine is a digital archive of the World Wide Web founded by the Internet Archive, an American nonprofit organization based in San Francisco, California. Created in 1996 and launched to the public in 2001, it allows users to go "back in time" to see how websites looked in the past. Its founders, Brewster Kahle and Bruce Gilliat, developed the Wayback Machine to provide "universal access to all knowledge" by preserving archived copies of defunct web pages.

Skweezer, formally known as a mobile browser. It reformatted and compressed web content in order to reduce a web page's file size and make the downloaded content easier to view on a small screen. It was developed by Skweezer, Inc. and initially released in 2003. Skweezer's technology is used to mobilize Web content service by search engines, Web portals, and wireless carriers such as IAC/InterActiveCorp, Bloglines, and Orange SA. Skweezer was available in English, French, German, Italian, Portuguese, Spanish, and Japanese languages and serves customers in over 175 countries worldwide.

References

- ↑ Не барај, Најди! Archived 2007-09-27 at the Wayback Machine . (Do not search, find!) Vreme daily. 30 November 2004. URL accessed 6 June 2006.

- ↑ Interview with Petar Kajevski, Creator of Macedonian Search Engine Najdi!. Metamorphosis. June 27, 2005. URL accessed 6 June 2006.

- ↑ Najdi! Macedonian Blogs at Najdi!. Metamorphosis. June 27, 2005. URL accessed 6 June 2006.

- ↑ Najdi! Book Internet Service Promoted. Metamorphosis. 3 March 2006. URL accessed 6 June 2006.

- ↑ World Summit Award Winners Selected!. Metamorphosis. 18 September 2005. URL accessed 6 June 2006.