Speech synthesis is the artificial production of human speech. A computer system used for this purpose is called a speech synthesizer, and can be implemented in software or hardware products. A text-to-speech (TTS) system converts normal language text into speech; other systems render symbolic linguistic representations like phonetic transcriptions into speech. The reverse process is speech recognition.

A screen reader is a form of assistive technology (AT) that renders text and image content as speech or braille output. Screen readers are essential to people who are blind, and are useful to people who are visually impaired, illiterate, or have a learning disability. Screen readers are software applications that attempt to convey what people with normal eyesight see on a display to their users via non-visual means, like text-to-speech, sound icons, or a braille device. They do this by applying a wide variety of techniques that include, for example, interacting with dedicated accessibility APIs, using various operating system features, and employing hooking techniques.

PlainTalk is the collective name for several speech synthesis (MacinTalk) and speech recognition technologies developed by Apple Inc. In 1990, Apple invested a lot of work and money in speech recognition technology, hiring many researchers in the field. The result was "PlainTalk", released with the AV models in the Macintosh Quadra series from 1993. It was made a standard system component in System 7.1.2, and has since been shipped on all PowerPC and some 68k Macintoshes.

Speech Synthesis Markup Language (SSML) is an XML-based markup language for speech synthesis applications. It is a recommendation of the W3C's Voice Browser Working Group. SSML is often embedded in VoiceXML scripts to drive interactive telephony systems. However, it also may be used alone, such as for creating audio books. For desktop applications, other markup languages are popular, including Apple's embedded speech commands, and Microsoft's SAPI Text to speech (TTS) markup, also an XML language. It is also used to produce sounds via Azure Cognitive Services' Text to Speech API or when writing third-party skills for Google Assistant or Amazon Alexa.

Emacspeak is a free computer application, a speech interface, and an audio desktop. It employs Emacs, Emacs Lisp, and Tcl. Developed principally by T. V. Raman, it was first released in April 1995. It is portable to all POSIX-compatible OSs. It is tightly integrated with Emacs, allowing it to render intelligible and useful content rather than parsing the graphics ; its default voice synthesizer can be replaced with other software synthesizers when a server module is installed. Emacspeak is one of the most popular speech interfaces for Linux, bundled with most major distributions. In 2014, Raman wrote an article describing how the software's design was impacted by shifts in computer technology and its general usage over 20 years.

The Speech Application Programming Interface or SAPI is an API developed by Microsoft to allow the use of speech recognition and speech synthesis within Windows applications. To date, a number of versions of the API have been released, which have shipped either as part of a Speech SDK or as part of the Windows OS itself. Applications that use SAPI include Microsoft Office, Microsoft Agent and Microsoft Speech Server.

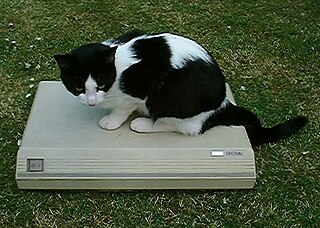

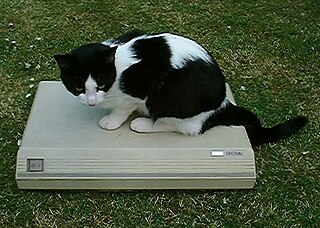

DECtalk was a speech synthesizer and text-to-speech technology developed by Digital Equipment Corporation in 1983, based largely on the work of Dennis Klatt at MIT, whose source-filter algorithm was variously known as KlattTalk or MITalk.

Hoya Corporation is a Japanese company manufacturing optical products such as photomasks, photomask blanks and hard disk drive platters, contact lenses and eyeglass lenses for the health-care market, medical photonics, lasers, photographic filters, medical flexible endoscopy equipment, and software. Hoya Corporation is one of the Forbes Global 2000 Leading Companies and Industry Week 1000 Company.

Chinese speech synthesis is the application of speech synthesis to the Chinese language. It poses additional difficulties due to Chinese characters frequently having different pronunciations in different contexts and the complex prosody, which is essential to convey the meaning of words, and sometimes the difficulty in obtaining agreement among native speakers concerning what the correct pronunciation is of certain phonemes.

Speech-generating devices (SGDs), also known as voice output communication aids, are electronic augmentative and alternative communication (AAC) systems used to supplement or replace speech or writing for individuals with severe speech impairments, enabling them to verbally communicate. SGDs are important for people who have limited means of interacting verbally, as they allow individuals to become active participants in communication interactions. They are particularly helpful for patients with amyotrophic lateral sclerosis (ALS) but recently have been used for children with predicted speech deficiencies.

eSpeak is a free and open-source, cross-platform, compact, software speech synthesizer. It uses a formant synthesis method, providing many languages in a relatively small file size. eSpeakNG is a continuation of the original developer's project with more feedback from native speakers.

Gnuspeech is an extensible text-to-speech computer software package that produces artificial speech output based on real-time articulatory speech synthesis by rules. That is, it converts text strings into phonetic descriptions, aided by a pronouncing dictionary, letter-to-sound rules, and rhythm and intonation models; transforms the phonetic descriptions into parameters for a low-level articulatory speech synthesizer; uses these to drive an articulatory model of the human vocal tract producing an output suitable for the normal sound output devices used by various computer operating systems; and does this at the same or faster rate than the speech is spoken for adult speech.

The Echo II is a plug-in expansion card, speech synthesizer card for the Apple II and Apple IIe personal computers that allow applications to use speech synthesis. The Echo II provides a speaker/headphones jack on board, with a physical volume control adjustment.

The Microsoft text-to-speech voices are speech synthesizers provided for use with applications that use the Microsoft Speech API (SAPI) or the Microsoft Speech Server Platform. There are client, server, and mobile versions of Microsoft text-to-speech voices. Client voices are shipped with Windows operating systems; server voices are available for download for use with server applications such as Speech Server, Lync etc. for both Windows client and server platforms, and mobile voices are often shipped with more recent versions.

Cepstral is a provider of speech synthesis technology and services. It was founded in June 2000 by scientists from Carnegie Mellon University including the computer scientists Kevin Lenzo and Alan W. Black. It is a privately held corporation with headquarters in Pittsburgh, Pennsylvania.

Loquendo was an Italian multinational computer software technology corporation, headquartered in Torino, Italy, that provides speech recognition, speech synthesis, speaker verification and identification applications. Loquendo, which was founded in 2001 under the Telecom Italia Lab, also had offices in United Kingdom, Spain, Germany, France, and the United States.

CereProc is a speech synthesis company based in Edinburgh, Scotland, founded in 2005. The company specialises in creating natural and expressive-sounding text to speech voices, synthesis voices with regional accents, and in voice cloning.

Sensory, Inc. is an American company which develops software AI technologies for speech, sound and vision. It is based in Santa Clara, California.

Lessac Technologies, Inc. (LTI) is an American firm which develops voice synthesis software, licenses technology and sells synthesized novels as MP3 files. The firm currently has seven patents granted and three more pending for its automated methods of converting digital text into human-sounding speech, more accurately recognizing human speech and outputting the text representing the words and phrases of said speech, along with recognizing the speaker's emotional state.

WaveNet is a deep neural network for generating raw audio. It was created by researchers at London-based AI firm DeepMind. The technique, outlined in a paper in September 2016, is able to generate relatively realistic-sounding human-like voices by directly modelling waveforms using a neural network method trained with recordings of real speech. Tests with US English and Mandarin reportedly showed that the system outperforms Google's best existing text-to-speech (TTS) systems, although as of 2016 its text-to-speech synthesis still was less convincing than actual human speech. WaveNet's ability to generate raw waveforms means that it can model any kind of audio, including music.