Principles

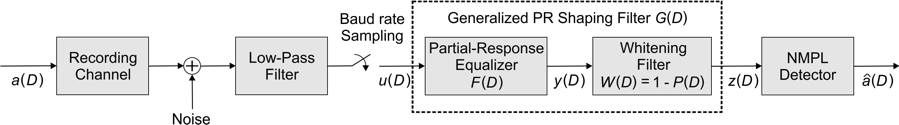

The NPML family of sequence-estimation data detectors arise by embedding a noise prediction/whitening process [2] [3] [4] into the branch metric computation of the Viterbi algorithm. The latter is a data detection technique for communication channels that exhibit intersymbol interference (ISI) with finite memory.

Reliable operation of the process is achieved by using hypothesized decisions associated with the branches of the trellis on which the Viterbi algorithm operates as well as tentative decisions corresponding to the path memory associated with each trellis state. NPML detectors can thus be viewed as reduced-state sequence-estimation detectors offering a range of implementation complexities. The complexity is governed by the number of detector states, which is equal to , , with denoting the maximum number of controlled ISI terms introduced by the combination of a partial-response shaping equalizer and the noise predictor. By judiciously choosing , practical NPML detectors can be devised that improve performance over PRML and EPRML detectors in terms of error rate and/or linear recording density. [2] [3] [4]

In the absence of noise enhancement or noise correlation, the PRML sequence detector performs maximum-likelihood sequence estimation. As the operating point moves to higher linear recording densities, optimality declines with linear partial-response (PR) equalization, which enhances noise and renders it correlated. A close match between the desired target polynomial and the physical channel can minimize losses. An effective way to achieve near optimal performance independently of the operating point—in terms of linear recording density—and the noise conditions is via noise prediction. In particular, the power of a stationary noise sequence , where the operator corresponds to a delay of one bit interval, at the output of a PR equalizer can be minimized by using an infinitely long predictor. A linear predictor with coefficients ,..., operating on the noise sequence produces the estimated noise sequence . Then, the prediction-error sequence given by

is white with minimum power. The optimum predictor

...

or the optimum noise-whitening filter

,

is the one that minimizes the prediction error sequence in a mean-square sense [2] [3] [4] [5] [6]

An infinitely long predictor filter would lead to a sequence detector structure that requires an unbounded number of states. Therefore, finite-length predictors that render the noise at the input of the sequence detector approximately white are of interest.

Generalized PR shaping polynomials of the form

,

where is a polynomial of order S and the noise-whitening filter has a finite order of , give rise to NPML systems when combined with sequence detection [2] [3] [4] [5] [6] In this case, the effective memory of the system is limited to

,

requiring a -state NPML detector if no reduced-state detection is employed.

As an example, if

then this corresponds to the classical PR4 signal shaping. Using a whitening filter , the generalized PR target becomes

,

and the effective ISI memory of the system is limited to

symbols. In this case, the full-state NMPL detector performs maximum likelihood sequence estimation (MLSE) using the -state trellis corresponding to .

The NPML detector is efficiently implemented via the Viterbi algorithm, which recursively computes the estimated data sequence. [2] [3] [4] [5] [6]

where denotes the binary sequence of recorded data bits and z(D) the signal sequence at the output of the noise whitening filter .

Reduced-state sequence-detection schemes [7] [8] [9] have been studied for application in the magnetic-recording channel [2] [4] and the references therein. For example, the NPML detectors with generalized PR target polynomials

can be viewed as a family of reduced-state detectors with embedded feedback. These detectors exist in a form in which the decision-feedback path can be realized by simple table look-up operations, whereby the contents of these tables can be updated as a function of the operating conditions. [2] Analytical and experimental studies have shown that a judicious tradeoff between performance and state complexity leads to practical schemes with considerable performance gains. Thus, reduced-state approaches are promising for increasing linear density.

Depending on the surface roughness and particle size, particulate media might exhibit nonstationary data-dependent transition or medium noise rather than colored stationary medium noise. Improvements o\in the quality of the readback head as well as the incorporation of low-noise preamplifiers may render the data-dependent medium noise a significant component of the total noise affecting performance. Because medium noise is correlated and data-dependent, information about noise and data patterns in past samples can provide information about noise in other samples. Thus, the concept of noise prediction for stationary Gaussian noise sources developed in [2] [6] can be naturally extended to the case where noise characteristics depend highly on local data patterns. [1] [10] [11] [12]

By modeling the data-dependent noise as a finite-order Markov process, the optimum MLSE for channels with ISI has been derived. [11] In particular, it when the data-dependent noise is conditionally Gauss–Markov, the branch metrics can be computed from the conditional second-order statistics of the noise process. In other words, the optimum MLSE can be implemented efficiently by using the Viterbi algorithm, in which the branch-metric computation involves data-dependent noise prediction. [11] Because the predictor coefficients and prediction error both depend on the local data pattern, the resulting structure has been called a data-dependent NPML detector. [1] [12] [10] Reduced-state sequence detection schemes can be applied to data-dependent NPML, reducing implementation complexity.

NPML and its various forms represent the core read-channel and detection technology used in recording systems employing advanced error-correcting codes that lend themselves to soft decoding, such as low-density parity check (LDPC) codes. For example, if noise-predictive detection is performed in conjunction with a maximum a posteriori (MAP) detection algorithm such as the BCJR algorithm [13] then NPML and NPML-like detection allow the computation of soft reliability information on individual code symbols, while retaining all the performance advantages associated with noise-predictive techniques. The soft information generated in this manner is used for soft decoding of the error-correcting code. Moreover, the soft information computed by the decoder can be fed back again to the soft detector to improve detection performance. In this way it is possible to iteratively improve the error-rate performance at the decoder output in successive soft detection/decoding rounds.