Related Research Articles

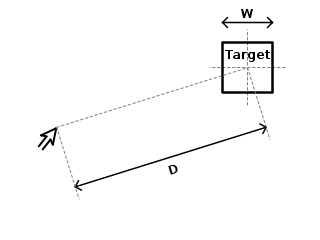

Fitts's law is a predictive model of human movement primarily used in human–computer interaction and ergonomics. The law predicts that the time required to rapidly move to a target area is a function of the ratio between the distance to the target and the width of the target. Fitts's law is used to model the act of pointing, either by physically touching an object with a hand or finger, or virtually, by pointing to an object on a computer monitor using a pointing device. It was initially developed by Paul Fitts.

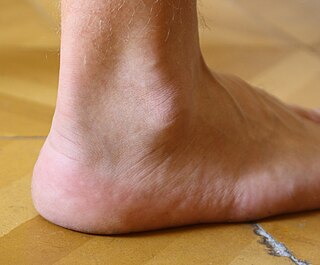

The ankle, the talocrural region or the jumping bone (informal) is the area where the foot and the leg meet. The ankle includes three joints: the ankle joint proper or talocrural joint, the subtalar joint, and the inferior tibiofibular joint. The movements produced at this joint are dorsiflexion and plantarflexion of the foot. In common usage, the term ankle refers exclusively to the ankle region. In medical terminology, "ankle" can refer broadly to the region or specifically to the talocrural joint.

Athetosis is a symptom characterized by slow, involuntary, convoluted, writhing movements of the fingers, hands, toes, and feet and in some cases, arms, legs, neck and tongue. Movements typical of athetosis are sometimes called athetoid movements. Lesions to the brain are most often the direct cause of the symptoms, particularly to the corpus striatum. This symptom does not occur alone and is often accompanied by the symptoms of cerebral palsy, as it is often a result of this physical disability. Treatments for athetosis are not very effective, and in most cases are simply aimed at managing the uncontrollable movement, rather than the cause itself.

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures.

Psychomotor agitation is a symptom in various disorders and health conditions. It is characterized by unintentional and purposeless motions and restlessness, often but not always accompanied by emotional distress and is always an indicative for discharge. Typical manifestations include pacing around, wringing of the hands, uncontrolled tongue movement, pulling off clothing and putting it back on, and other similar actions. In more severe cases, the motions may become harmful to the individual, and may involve things such as ripping, tearing, or chewing at the skin around one's fingernails, lips, or other body parts to the point of bleeding. Psychomotor agitation is typically found in various mental disorders, especially in psychotic and mood disorders. It can be a result of drug intoxication or withdrawal. It can also be caused by severe hyponatremia. People with existing psychiatric disorders and men under the age of 40 are at a higher risk of developing psychomotor agitation.

Periodic limb movement disorder (PLMD) is a sleep disorder where the patient moves limbs involuntarily and periodically during sleep, and has symptoms or problems related to the movement. PLMD should not be confused with restless legs syndrome (RLS), which is characterized by a voluntary response to an urge to move legs due to discomfort. PLMD on the other hand is involuntary, and the patient is often unaware of these movements altogether. Periodic limb movements (PLMs) occurring during daytime period can be found but are considered as a symptom of RLS; only PLMs during sleep can suggest a diagnosis of PLMD.

Actroid is a type of android with strong visual human-likeness developed by Osaka University and manufactured by Kokoro Company Ltd.. It was first unveiled at the 2003 International Robot Exhibition in Tokyo, Japan. Several different versions of the product have been produced since then. In most cases, the robot's appearance has been modeled after an average young woman of Japanese descent.

In artificial intelligence, an embodied agent, also sometimes referred to as an interface agent, is an intelligent agent that interacts with the environment through a physical body within that environment. Agents that are represented graphically with a body, for example a human or a cartoon animal, are also called embodied agents, although they have only virtual, not physical, embodiment. A branch of artificial intelligence focuses on empowering such agents to interact autonomously with human beings and the environment. Mobile robots are one example of physically embodied agents; Ananova and Microsoft Agent are examples of graphically embodied agents. Embodied conversational agents are embodied agents that are capable of engaging in conversation with one another and with humans employing the same verbal and nonverbal means that humans do.

In physiology, motor coordination is the orchestrated movement of multiple body parts as required to accomplish intended actions, like walking. This coordination is achieved by adjusting kinematic and kinetic parameters associated with each body part involved in the intended movement. The modifications of these parameters typically relies on sensory feedback from one or more sensory modalities, such as proprioception and vision.

The sensorimotor mu rhythm, also known as mu wave, comb or wicket rhythms or arciform rhythms, are synchronized patterns of electrical activity involving large numbers of neurons, probably of the pyramidal type, in the part of the brain that controls voluntary movement. These patterns as measured by electroencephalography (EEG), magnetoencephalography (MEG), or electrocorticography (ECoG), repeat at a frequency of 7.5–12.5 Hz, and are most prominent when the body is physically at rest. Unlike the alpha wave, which occurs at a similar frequency over the resting visual cortex at the back of the scalp, the mu rhythm is found over the motor cortex, in a band approximately from ear to ear. People suppress mu rhythms when they perform motor actions or, with practice, when they visualize performing motor actions. This suppression is called desynchronization of the wave because EEG wave forms are caused by large numbers of neurons firing in synchrony. The mu rhythm is even suppressed when one observes another person performing a motor action or an abstract motion with biological characteristics. Researchers such as V. S. Ramachandran and colleagues have suggested that this is a sign that the mirror neuron system is involved in mu rhythm suppression, although others disagree.

The supplementary motor area (SMA) is a part of the motor cortex of primates that contributes to the control of movement. It is located on the midline surface of the hemisphere just in front of the primary motor cortex leg representation. In monkeys, the SMA contains a rough map of the body. In humans, the body map is not apparent. Neurons in the SMA project directly to the spinal cord and may play a role in the direct control of movement. Possible functions attributed to the SMA include the postural stabilization of the body, the coordination of both sides of the body such as during bimanual action, the control of movements that are internally generated rather than triggered by sensory events, and the control of sequences of movements. All of these proposed functions remain hypotheses. The precise role or roles of the SMA is not yet known.

Mind-wandering is broadly defined as thoughts unrelated to the task at hand. Mind-wandering consists of thoughts that are task-unrelated and stimulus-independent. This can be in the form of three different subtypes: positive constructive daydreaming, guilty fear of failure, and poor attentional control.

Eye–hand coordination is the coordinated motor control of eye movement with hand movement and the processing of visual input to guide reaching and grasping along with the use of proprioception of the hands to guide the eyes, a modality of multisensory integration. Eye–hand coordination has been studied in activities as diverse as the movement of solid objects such as wooden blocks, archery, sporting performance, music reading, computer gaming, copy-typing, and even tea-making. It is part of the mechanisms of performing everyday tasks; in its absence, most people would not be able to carry out even the simplest of actions such as picking up a book from a table.

Hiroshi Ishiguro is a Japanese roboticist and engineer. He is the director of the Intelligent Robotics Laboratory, part of the Department of Systems Innovation in the Graduate School of Engineering Science at Osaka University, Japan. A notable development of the laboratory is the Actroid, a humanoid robot with lifelike appearance and visible behaviour such as facial movements.

Fidgeting is the act of moving about restlessly in a way that is not essential to ongoing tasks or events. Fidgeting may involve playing with one's fingers, hair, or personal objects. In this sense, it may be considered twiddling or fiddling. Fidgeting is commonly used as a label for unexplained or subconscious activities and postural movements that people perform while seated or standing idle.

Social presence theory explores how the "sense of being with another" is influenced by digital interfaces in human-computer interactions. Developed from the foundations of interpersonal communication and symbolic interactionism, social presence theory was first formally introduced by John Short, Ederyn Williams, and Bruce Christie in The Social Psychology of Telecommunications. Research on social presence theory has recently developed to examine the efficacy of telecommunications media, including SNS communications. The theory notes that computer-based communication is lower in social presence than face-to-face communication, but different computer-based communications can affect the levels of social presence between communicators and receivers.

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface".

Non-verbal leakage is a form of non-verbal behavior that occurs when a person verbalizes one thing, but their body language indicates another, common forms of which include facial movements and hand-to-face gestures. The term "non-verbal leakage" got its origin in literature in 1968, leading to many subsequent studies on the topic throughout the 1970s, with related studies continuing today.

The psychology of dance is the set of mental states associated with dancing and watching others dance. The term names the interdisciplinary academic field that studies those who do. Areas of research include interventions to increase health for older adults, programs for stimulating children’s creativity, dance movement therapy, mate selection and emotional responses.

References

- 1 2 Witchel, Harry; Westling, Carina; Tee, Julian; Healy, Aoife; Needham, Rob; Chockalingam, Nachiappan (2014). "What does not happen: Quantifying embodied engagement using NIMI and self-adaptors" (PDF). Participations: Journal of Audience and Reception Studies. 11 (1): 304–331.

- ↑ Gurney-Read, Josie (2016-02-23). "Computers can detect boredom by how much you fidget". The Telegraph newspaper (London, UK). ISSN 0307-1235 . Retrieved 2017-11-28.

- ↑ Gregoire, Carolyn (2016-03-09). "Computers Can Now Read Our Body Language". Huffington Post. Retrieved 2017-11-28.

- ↑ Nuwer, Rachel (2016). "Now computers can tell when you are bored: That ability could lead to more engaging coursework and machines that better understand human emotions". Scientific American. 314 (5): 15. doi:10.1038/scientificamerican0516-15. PMID 27100240.

- ↑ Galton, Francis (1885-06-25). "The Measure of Fidget". Nature. 32 (817): 174–175. Bibcode:1885Natur..32..174G. doi: 10.1038/032174b0 . S2CID 30660123.

- ↑ D'Mello, Sidney; Chipman, Patrick; Grasesser, Art (2007). "Posture as a predictor of learner's affective engagement" (PDF). Proceedings of the 29th Annual Cognitive Science Society: 905–910.

- ↑ Seli, Paul; Carriere, Jonathan S. A.; Thomson, David R.; Cheyne, James Allan; Martens, Kaylena A. Ehgoetz; Smilek, Daniel (2014). "Restless mind, restless body". Journal of Experimental Psychology: Learning, Memory, and Cognition. 40 (3): 660–668. doi:10.1037/a0035260. PMID 24364721.

- ↑ Bianchi-Berthouze, Nadia (2013-01-01). "Understanding the Role of Body Movement in Player Engagement". Human–Computer Interaction. 28 (1): 40–75. doi:10.1080/07370024.2012.688468. ISSN 0737-0024. S2CID 9630093.

- 1 2 Witchel, Harry J.; Santos, Carlos P.; Ackah, James K.; Westling, Carina E. I.; Chockalingam, Nachiappan (2016). "Non-Instrumental Movement Inhibition (NIMI) Differentially Suppresses Head and Thigh Movements during Screenic Engagement: Dependence on Interaction". Frontiers in Psychology. 7: 157. doi: 10.3389/fpsyg.2016.00157 . ISSN 1664-1078. PMC 4762992 . PMID 26941666.

- ↑ Theodorou, Lida; Healey, Patrick (2017). "What can Hand Movements Tell us about Audience Engagement?" (PDF). Proceedings of Cognitive Sciences Society Annual Meeting, London 2017.

- ↑ Kapoor, Ashish; Burleson, Winslow; Picard, Rosalind W. (August 2007). "Automatic Prediction of Frustration". Int. J. Hum.-Comput. Stud. 65 (8): 724–736. CiteSeerX 10.1.1.150.1347 . doi:10.1016/j.ijhcs.2007.02.003. ISSN 1071-5819.