Related Research Articles

In computer programming, assembly language, often referred to simply as Assembly and commonly abbreviated as ASM or asm, is any low-level programming language with a very strong correspondence between the instructions in the language and the architecture's machine code instructions. Assembly language usually has one statement per machine instruction (1:1), but constants, comments, assembler directives, symbolic labels of, e.g., memory locations, registers, and macros are generally also supported.

In processor design, microcode (μcode) is a technique that interposes a layer of computer organization between the central processing unit (CPU) hardware and the programmer-visible instruction set architecture of a computer. Microcode is a layer of hardware-level instructions that implement higher-level machine code instructions or internal finite-state machine sequencing in many digital processing elements. Microcode is used in general-purpose central processing units, although in current desktop CPUs, it is only a fallback path for cases that the faster hardwired control unit cannot handle.

The PDP-8 is a 12-bit minicomputer that was produced by Digital Equipment Corporation (DEC). It was the first commercially successful minicomputer, with over 50,000 units being sold over the model's lifetime. Its basic design follows the pioneering LINC but has a smaller instruction set, which is an expanded version of the PDP-5 instruction set. Similar machines from DEC are the PDP-12 which is a modernized version of the PDP-8 and LINC concepts, and the PDP-14 industrial controller system.

In computing, booting is the process of starting a computer as initiated via hardware such as a button or by a software command. After it is switched on, a computer's central processing unit (CPU) has no software in its main memory, so some process must load software into memory before it can be executed. This may be done by hardware or firmware in the CPU, or by a separate processor in the computer system.

In computing, endianness is the order or sequence of bytes of a word of digital data in computer memory. Endianness is primarily expressed as big-endian (BE) or little-endian (LE). A big-endian system stores the most significant byte of a word at the smallest memory address and the least significant byte at the largest. A little-endian system, in contrast, stores the least-significant byte at the smallest address. Bi-endianness is a feature supported by numerous computer architectures that feature switchable endianness in data fetches and stores or for instruction fetches. Other orderings are generically called middle-endian or mixed-endian.

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an implementation.

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is a processor register that indicates where a computer is in its program sequence.

The IBM 1620 was announced by IBM on October 21, 1959, and marketed as an inexpensive scientific computer. After a total production of about two thousand machines, it was withdrawn on November 19, 1970. Modified versions of the 1620 were used as the CPU of the IBM 1710 and IBM 1720 Industrial Process Control Systems.

The IBM 1401 is a variable-wordlength decimal computer that was announced by IBM on October 5, 1959. The first member of the highly successful IBM 1400 series, it was aimed at replacing unit record equipment for processing data stored on punched cards and at providing peripheral services for larger computers. The 1401 is considered to be the Ford Model-T of the computer industry, because it was mass-produced and because of its sales volume. Over 12,000 units were produced and many were leased or resold after they were replaced with newer technology. The 1401 was withdrawn on February 8, 1971.

The IBM 650 Magnetic Drum Data-Processing Machine is an early digital computer produced by IBM in the mid-1950s. It was the first mass produced computer in the world. Almost 2,000 systems were produced, the last in 1962, and it was the first computer to make a meaningful profit. The first one was installed in late 1954 and it was the most-popular computer of the 1950s.

Drum memory was a magnetic data storage device invented by Gustav Tauschek in 1932 in Austria. Drums were widely used in the 1950s and into the 1960s as computer memory.

The IBM 700/7000 series is a series of large-scale (mainframe) computer systems that were made by IBM through the 1950s and early 1960s. The series includes several different, incompatible processor architectures. The 700s use vacuum-tube logic and were made obsolete by the introduction of the transistorized 7000s. The 7000s, in turn, were eventually replaced with System/360, which was announced in 1964. However the 360/65, the first 360 powerful enough to replace 7000s, did not become available until November 1965. Early problems with OS/360 and the high cost of converting software kept many 7000s in service for years afterward.

The IBM 1130 Computing System, introduced in 1965, was IBM's least expensive computer at that time. A binary 16-bit machine, it was marketed to price-sensitive, computing-intensive technical markets, like education and engineering, succeeding the decimal IBM 1620 in that market segment. Typical installations included a 1 megabyte disk drive that stored the operating system, compilers and object programs, with program source generated and maintained on punched cards. Fortran was the most common programming language used, but several others, including APL, were available.

Addressing modes are an aspect of the instruction set architecture in most central processing unit (CPU) designs. The various addressing modes that are defined in a given instruction set architecture define how the machine language instructions in that architecture identify the operand(s) of each instruction. An addressing mode specifies how to calculate the effective memory address of an operand by using information held in registers and/or constants contained within a machine instruction or elsewhere.

The DEUCE was one of the earliest British commercially available computers, built by English Electric from 1955. It was the production version of the Pilot ACE, itself a cut-down version of Alan Turing's ACE.

A branch is an instruction in a computer program that can cause a computer to begin executing a different instruction sequence and thus deviate from its default behavior of executing instructions in order. Branch may also refer to the act of switching execution to a different instruction sequence as a result of executing a branch instruction. Branch instructions are used to implement control flow in program loops and conditionals.

The Bendix G-15 is a computer introduced in 1956 by the Bendix Corporation, Computer Division, Los Angeles, California. It is about 5 by 3 by 3 feet and weighs about 966 pounds (438 kg). The G-15 has a drum memory of 2,160 29-bit words, along with 20 words used for special purposes and rapid-access storage. The base system, without peripherals, cost $49,500. A working model cost around $60,000. It could also be rented for $1,485 per month. It was meant for scientific and industrial markets. The series was gradually discontinued when Control Data Corporation took over the Bendix computer division in 1963.

A front panel was used on early electronic computers to display and allow the alteration of the state of the machine's internal registers and memory. The front panel usually consisted of arrays of indicator lamps, digit and symbol displays, toggle switches, dials, and push buttons mounted on a sheet metal face plate. In early machines, CRTs might also be present. Prior to the development of CRT system consoles, many computers such as the IBM 1620 had console typewriters.

In computing, an emulator is hardware or software that enables one computer system to behave like another computer system. An emulator typically enables the host system to run software or use peripheral devices designed for the guest system. Emulation refers to the ability of a computer program in an electronic device to emulate another program or device.

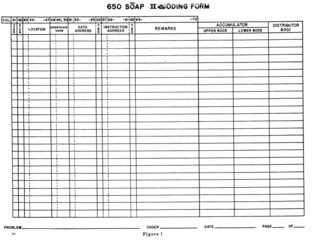

The Symbolic Optimal Assembly Program (SOAP) is an assembler for the IBM 650 Magnetic Drum Data-Processing Machine, an early computer first used in 1954. It was developed by Stan Poley at the IBM Thomas J. Watson Research Center. SOAP is called Optimal because it attempts to store generated instructions on the storage drum to minimize the access time from one instruction to the next. SOAP is a multi-pass assembler, that is, it processes the source program more than once in order to generate the object program.

References

- ↑ IBM SOAP Manual.

- ↑ Kugel, Herb (October 22, 2001). "The IBM 650". Dr. Dobb's.