Related Research Articles

Distributed artificial intelligence (DAI) also called Decentralized Artificial Intelligence is a subfield of artificial intelligence research dedicated to the development of distributed solutions for problems. DAI is closely related to and a predecessor of the field of multi-agent systems.

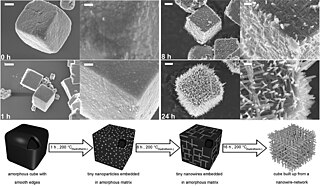

Self-organization, also called spontaneous order in the social sciences, is a process where some form of overall order arises from local interactions between parts of an initially disordered system. The process can be spontaneous when sufficient energy is available, not needing control by any external agent. It is often triggered by seemingly random fluctuations, amplified by positive feedback. The resulting organization is wholly decentralized, distributed over all the components of the system. As such, the organization is typically robust and able to survive or self-repair substantial perturbation. Chaos theory discusses self-organization in terms of islands of predictability in a sea of chaotic unpredictability.

Context awareness refers, in information and communication technologies, to a capability to take into account the situation of entities, which may be users or devices, but are not limited to those. Location is only the most obvious element of this situation. Narrowly defined for mobile devices, context awareness does thus generalize location awareness. Whereas location may determine how certain processes around a contributing device operate, context may be applied more flexibly with mobile users, especially with users of smart phones. Context awareness originated as a term from ubiquitous computing or as so-called pervasive computing which sought to deal with linking changes in the environment with computer systems, which are otherwise static. The term has also been applied to business theory in relation to contextual application design and business process management issues.

Bio-inspired computing, short for biologically inspired computing, is a field of study which seeks to solve computer science problems using models of biology. It relates to connectionism, social behavior, and emergence. Within computer science, bio-inspired computing relates to artificial intelligence and machine learning. Bio-inspired computing is a major subset of natural computation.

In computer science, a software agent is a computer program that acts for a user or another program in a relationship of agency.

Autonomic computing (AC) is distributed computing resources with self-managing characteristics, adapting to unpredictable changes while hiding intrinsic complexity to operators and users. Initiated by IBM in 2001, this initiative ultimately aimed to develop computer systems capable of self-management, to overcome the rapidly growing complexity of computing systems management, and to reduce the barrier that complexity poses to further growth.

In systems engineering, dependability is a measure of a system's availability, reliability, maintainability, and in some cases, other characteristics such as durability, safety and security. In real-time computing, dependability is the ability to provide services that can be trusted within a time-period. The service guarantees must hold even when the system is subject to attacks or natural failures.

A multi-agent system is a computerized system composed of multiple interacting intelligent agents. Multi-agent systems can solve problems that are difficult or impossible for an individual agent or a monolithic system to solve. Intelligence may include methodic, functional, procedural approaches, algorithmic search or reinforcement learning. With advancements in Large language model (LLMs), LLM-based multi-agent systems have emerged as a new area of research, enabling more sophisticated interactions and coordination among agents.

Swarm robotics is the study of how to design independent systems of robots without centralized control. The emerging swarming behavior of robotic swarms is created through the interactions between individual robots and the environment. This idea emerged on the field of artificial swarm intelligence, as well as the studies of insects, ants and other fields in nature, where swarm behavior occurs.

Özalp Babaoğlu, is a Turkish computer scientist. He is currently professor of computer science at the University of Bologna, Italy. He received a Ph.D. in 1981 from the University of California at Berkeley. He is the recipient of 1982 Sakrison Memorial Award, 1989 UNIX InternationalRecognition Award and 1993 USENIX AssociationLifetime Achievement Award for his contributions to the UNIX system community and to Open Industry Standards. Before moving to Bologna in 1988, Babaoğlu was an associate professor in the Department of Computer Science at Cornell University. He has participated in several European research projects in distributed computing and complex systems. Babaoğlu is an ACM Fellow and has served as a resident fellow of the Institute of Advanced Studies at the University of Bologna and on the editorial boards for ACM Transactions on Computer Systems, ACM Transactions on Autonomous and Adaptive Systems and Springer-Verlag Distributed Computing.

Gender HCI is a subfield of human-computer interaction that focuses on the design and evaluation of interactive systems for humans. The specific emphasis in gender HCI is on variations in how people of different genders interact with computers.

A network-centric organization is a network governance pattern which empowers knowledge workers to create and leverage information to increase competitive advantage through the collaboration of small and agile self-directed teams. It is emerging in many progressive 21st century enterprises. This implies new ways of working, with consequences for the enterprise’s infrastructure, processes, people and culture.

Autonomous logistics describes systems that provide unmanned, autonomous transfer of equipment, baggage, people, information or resources from point-to-point with minimal human intervention. Autonomous logistics is a new area being researched and currently there are few papers on the topic, with even fewer systems developed or deployed. With web enabled cloud software there are companies focused on developing and deploying such systems which will begin coming online in 2018.

Lateral computing is a lateral thinking approach to solving computing problems. Lateral thinking has been made popular by Edward de Bono. This thinking technique is applied to generate creative ideas and solve problems. Similarly, by applying lateral-computing techniques to a problem, it can become much easier to arrive at a computationally inexpensive, easy to implement, efficient, innovative or unconventional solution.

In software engineering, a microservice architecture is an architectural pattern that arranges an application as a collection of loosely coupled, fine-grained services, communicating through lightweight protocols. A microservice-based architecture enables teams to develop and deploy their services independently, reduce code interdependency and increase readability and modularity within a codebase. This is achieved by reducing several dependencies in the codebase, allowing developers to evolve their services with limited restrictions, and reducing additional complexity. Consequently, organizations can develop software with rapid growth and scalability, as well as implement off-the-shelf services more easily. These benefits come with the cost of needing to maintain a decoupled structure within the codebase, which means its initial implementation is more complex than that of a monolithic codebase. Interfaces need to be designed carefully and treated as APIs.

Cloud robotics is a field of robotics that attempts to invoke cloud technologies such as cloud computing, cloud storage, and other Internet technologies centered on the benefits of converged infrastructure and shared services for robotics. When connected to the cloud, robots can benefit from the powerful computation, storage, and communication resources of modern data center in the cloud, which can process and share information from various robots or agent. Humans can also delegate tasks to robots remotely through networks. Cloud computing technologies enable robot systems to be endowed with powerful capability whilst reducing costs through cloud technologies. Thus, it is possible to build lightweight, low-cost, smarter robots with an intelligent "brain" in the cloud. The "brain" consists of data center, knowledge base, task planners, deep learning, information processing, environment models, communication support, etc.

An autonomous aircraft is an aircraft which flies under the control of on-board autonomous robotic systems and needs no intervention from a human pilot or remote control. Most contemporary autonomous aircraft are unmanned aerial vehicles (drones) with pre-programmed algorithms to perform designated tasks, but advancements in artificial intelligence technologies mean that autonomous control systems are reaching a point where several air taxis and associated regulatory regimes are being developed.

Martin Wirsing is a German computer scientist, and Professor at the Ludwig-Maximilians-Universität München, Germany.

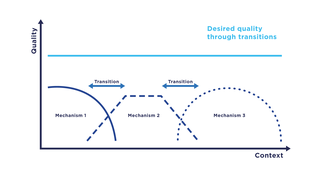

Transition refers to a computer science paradigm in the context of communication systems which describes the change of communication mechanisms, i.e., functions of a communication system, in particular, service and protocol components. In a transition, communication mechanisms within a system are replaced by functionally comparable mechanisms with the aim to ensure the highest possible quality, e.g., as captured by the quality of service.

The Internet of Military Things (IoMT) is a class of Internet of things for combat operations and warfare. It is a complex network of interconnected entities, or "things", in the military domain that continually communicate with each other to coordinate, learn, and interact with the physical environment to accomplish a broad range of activities in a more efficient and informed manner. The concept of IoMT is largely driven by the idea that future military battles will be dominated by machine intelligence and cyber warfare and will likely take place in urban environments. By creating a miniature ecosystem of smart technology capable of distilling sensory information and autonomously governing multiple tasks at once, the IoMT is conceptually designed to offload much of the physical and mental burden that warfighters encounter in a combat setting.

References

- Müller-Schloer, Christian; v.d. Malsburg, Christoph and Würtz, Rolf P. Organic Computing. Aktuelles Schlagwort in Informatik Spektrum (2004) pp. 332–336.

- Müller-Schloer, Christian. Organic Computing – On the Feasibility of Controlled Emergence. CODES + ISSS 2004 Proceedings (2004) pp 2–5, ACM Press, ISBN 1-58113-937-3.

- Rochner, Fabian and Müller-Schloer, Christian. Emergence in Technical Systems. it Special Issue on Organic Computing (2005) pp. 188–200, Oldenbourg Verlag, Jahrgang 47, ISSN 1611-2776.

- Schmeck, Hartmut. Organic Computing – A New Vision for Distributed Embedded Systems. Proceedings of the Eighth IEEE International Symposium on Object-Oriented Real-Time Distributed Computing (ISORC’05) (2005) pp. 201–203, IEEE, IEEE Computer Society 2005.

- Würtz, Rolf P. (Editor): Organic Computing (Understanding Complex Systems). Springer, 2008. ISBN 978-3642096426.