Related Research Articles

In 3D computer graphics, normal mapping, or Dot3 bump mapping, is a texture mapping technique used for faking the lighting of bumps and dents – an implementation of bump mapping. It is used to add details without using more polygons. A common use of this technique is to greatly enhance the appearance and details of a low polygon model by generating a normal map from a high polygon model or height map.

Shadow volume is a technique used in 3D computer graphics to add shadows to a rendered scene. They were first proposed by Frank Crow in 1977 as the geometry describing the 3D shape of the region occluded from a light source. A shadow volume divides the virtual world in two: areas that are in shadow and areas that are not.

CryEngine is a game engine designed by the German game developer Crytek. It has been used in all of their titles with the initial version being used in Far Cry, and continues to be updated to support new consoles and hardware for their games. It has also been used for many third-party games under Crytek's licensing scheme, including Sniper: Ghost Warrior 2 and SNOW. Warhorse Studios uses a modified version of the engine for their medieval RPG Kingdom Come: Deliverance. Ubisoft maintains an in-house, heavily modified version of CryEngine from the original Far Cry called the Dunia Engine, which is used in their later iterations of the Far Cry series. The Dunia engine would in turn be further modified and used in games such as The Crew 2.

Parallax mapping is an enhancement of the bump mapping or normal mapping techniques applied to textures in 3D rendering applications such as video games. To the end user, this means that textures such as stone walls will have more apparent depth and thus greater realism with less of an influence on the performance of the simulation. Parallax mapping was introduced by Tomomichi Kaneko et al., in 2001.

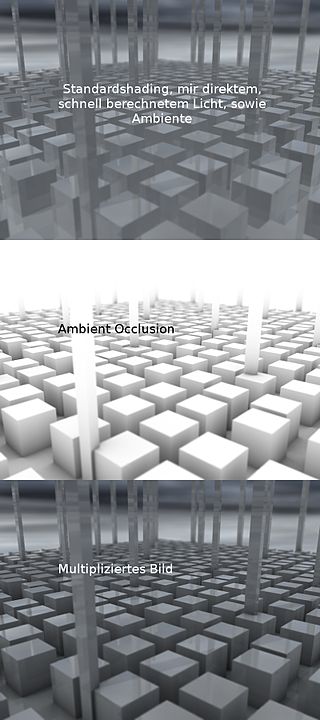

In 3D computer graphics, modeling, and animation, ambient occlusion is a shading and rendering technique used to calculate how exposed each point in a scene is to ambient lighting. For example, the interior of a tube is typically more occluded than the exposed outer surfaces, and becomes darker the deeper inside the tube one goes.

High-dynamic-range rendering, also known as high-dynamic-range lighting, is the rendering of computer graphics scenes by using lighting calculations done in high dynamic range (HDR). This allows preservation of details that may be lost due to limiting contrast ratios. Video games and computer-generated movies and special effects benefit from this as it creates more realistic scenes than with more simplistic lighting models.

In computer graphics, per-pixel lighting refers to any technique for lighting an image or scene that calculates illumination for each pixel on a rendered image. This is in contrast to other popular methods of lighting such as vertex lighting, which calculates illumination at each vertex of a 3D model and then interpolates the resulting values over the model's faces to calculate the final per-pixel color values.

UNIGINE is a proprietary cross-platform game engine developed by UNIGINE Company used in simulators, virtual reality systems, serious games and visualization. It supports OpenGL 4, Vulkan and DirectX 12.

In computer graphics, relief mapping is a texture mapping technique first introduced in 2000 used to render the surface details of three-dimensional objects accurately and efficiently. It can produce accurate depictions of self-occlusion, self-shadowing, and parallax. It is a form of short-distance ray tracing done in a pixel shader. Relief mapping is highly comparable in both function and approach to another displacement texture mapping technique, Parallax occlusion mapping, considering that they both rely on ray tracing, though the two are not to be confused with each other, as parallax occlusion mapping uses reverse heightmap tracing.

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles.

In computer graphics, a texture mapping unit (TMU) is a component in modern graphics processing units (GPUs). They are able to rotate, resize, and distort a bitmap image to be placed onto an arbitrary plane of a given 3D model as a texture, in a process called texture mapping. In modern graphics cards it is implemented as a discrete stage in a graphics pipeline, whereas when first introduced it was implemented as a separate processor, e.g. as seen on the Voodoo2 graphics card.

Self-Shadowing is a computer graphics lighting effect, used in 3D rendering applications such as computer animation and video games. Self-shadowing allows non-static objects in the environment, such as game characters and interactive objects, to cast shadows on themselves and each other. For example, without self-shadowing, if a character puts their right arm over the left, the right arm will not cast a shadow over the left arm. If that same character places a hand over a ball, that hand will cast a shadow over the ball.

In the field of 3D computer graphics, deferred shading is a screen-space shading technique that is performed on a second rendering pass, after the vertex and pixel shaders are rendered. It was first suggested by Michael Deering in 1988.

Screen space ambient occlusion (SSAO) is a computer graphics technique for efficiently approximating the ambient occlusion effect in real time. It was developed by Vladimir Kajalin while working at Crytek and was used for the first time in 2007 by the video game Crysis, also developed by Crytek.

Computer graphics deals with generating images and art with the aid of computers. Computer graphics is a core technology in digital photography, film, video games, digital art, cell phone and computer displays, and many specialized applications. A great deal of specialized hardware and software has been developed, with the displays of most devices being driven by computer graphics hardware. It is a vast and recently developed area of computer science. The phrase was coined in 1960 by computer graphics researchers Verne Hudson and William Fetter of Boeing. It is often abbreviated as CG, or typically in the context of film as computer generated imagery (CGI). The non-artistic aspects of computer graphics are the subject of computer science research.

PICA200 is a graphics processing unit (GPU) designed by Digital Media Professionals Inc. (DMP), a Japanese GPU design startup company, for use in embedded devices such as vehicle systems, mobile phones, cameras, and game consoles. The PICA200 is an IP Core which can be licensed to other companies to incorporate into their SOCs. It was most notably licensed for use in the Nintendo 3DS.

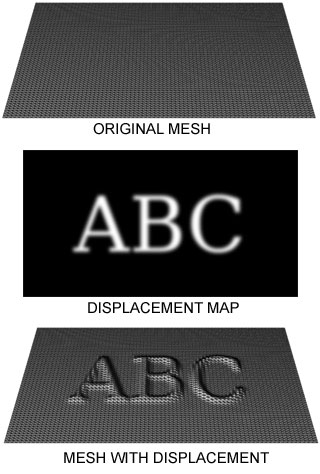

Displacement mapping is an alternative computer graphics technique in contrast to bump, normal, and parallax mapping, using a texture or height map to cause an effect where the actual geometric position of points over the textured surface are displaced, often along the local surface normal, according to the value the texture function evaluates to at each point on the surface. It gives surfaces a great sense of depth and detail, permitting in particular self-occlusion, self-shadowing and silhouettes; on the other hand, it is the most costly of this class of techniques owing to the large amount of additional geometry.

2D to 3D video conversion is the process of transforming 2D ("flat") film to 3D form, which in almost all cases is stereo, so it is the process of creating imagery for each eye from one 2D image.

This is a glossary of terms relating to computer graphics.

Physically based rendering (PBR) is a computer graphics approach that seeks to render images in a way that models the lights and surfaces with optics in the real world. It is often referred to as "Physically Based Lighting" or "Physically Based Shading". Many PBR pipelines aim to achieve photorealism. Feasible and quick approximations of the bidirectional reflectance distribution function and rendering equation are of mathematical importance in this field. Photogrammetry may be used to help discover and encode accurate optical properties of materials. PBR principles may be implemented in real-time applications using Shaders or offline applications using ray tracing or path tracing.

References

- 1 2 Engel, Wolfgang F. (2005). ShaderX3: Advanced Rendering with DirectX and OpenGL. Charles River Media. ISBN 978-1-58450-357-6.

- ↑ "Unknown" (PDF).[ permanent dead link ]

- ↑ "Sketches | Conference: SIGGRAPH 2005". Archived from the original on 2015-02-21. Retrieved 2008-11-29.

- ↑ "Computing - Buying Advice & Guides".

- ↑ "SDK Editions and Pricing | UNIGINE: Real-time 3D engine".

- ↑ "Crytek | CryENGINE2". Archived from the original on 2009-11-30. Retrieved 2009-12-10.

- ↑ "Crytek | MyCryENGINE". Archived from the original on 2009-10-17. Retrieved 2009-12-10.

- ↑ "Generating stereoscopic images with parallax occlusion mapping". Archived from the original on 2012-02-19. Retrieved 2008-11-29.