In mathematics, an equation is a formula that expresses the equality of two expressions, by connecting them with the equals sign =. The word equation and its cognates in other languages may have subtly different meanings; for example, in French an équation is defined as containing one or more variables, while in English, any well-formed formula consisting of two expressions related with an equals sign is an equation.

Quadratic programming (QP) is the process of solving certain mathematical optimization problems involving quadratic functions. Specifically, one seeks to optimize a multivariate quadratic function subject to linear constraints on the variables. Quadratic programming is a type of nonlinear programming.

Mathematical optimization or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems of sorts arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries.

In mathematical optimization and decision theory, a loss function or cost function is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite, in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy.

In mathematics, nonlinear programming (NLP) is the process of solving an optimization problem where some of the constraints or the objective function are nonlinear. An optimization problem is one of calculation of the extrema of an objective function over a set of unknown real variables and conditional to the satisfaction of a system of equalities and inequalities, collectively termed constraints. It is the sub-field of mathematical optimization that deals with problems that are not linear.

Model predictive control (MPC) is an advanced method of process control that is used to control a process while satisfying a set of constraints. It has been in use in the process industries in chemical plants and oil refineries since the 1980s. In recent years it has also been used in power system balancing models and in power electronics. Model predictive controllers rely on dynamic models of the process, most often linear empirical models obtained by system identification. The main advantage of MPC is the fact that it allows the current timeslot to be optimized, while keeping future timeslots in account. This is achieved by optimizing a finite time-horizon, but only implementing the current timeslot and then optimizing again, repeatedly, thus differing from a linear–quadratic regulator (LQR). Also MPC has the ability to anticipate future events and can take control actions accordingly. PID controllers do not have this predictive ability. MPC is nearly universally implemented as a digital control, although there is research into achieving faster response times with specially designed analog circuitry.

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets. Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard.

In Bayesian statistics, a maximum a posteriori probability (MAP) estimate is an estimate of an unknown quantity, that equals the mode of the posterior distribution. The MAP can be used to obtain a point estimate of an unobserved quantity on the basis of empirical data. It is closely related to the method of maximum likelihood (ML) estimation, but employs an augmented optimization objective which incorporates a prior distribution over the quantity one wants to estimate. MAP estimation can therefore be seen as a regularization of maximum likelihood estimation.

In mathematical optimization, constrained optimization is the process of optimizing an objective function with respect to some variables in the presence of constraints on those variables. The objective function is either a cost function or energy function, which is to be minimized, or a reward function or utility function, which is to be maximized. Constraints can be either hard constraints, which set conditions for the variables that are required to be satisfied, or soft constraints, which have some variable values that are penalized in the objective function if, and based on the extent that, the conditions on the variables are not satisfied.

In statistics, M-estimators are a broad class of extremum estimators for which the objective function is a sample average. Both non-linear least squares and maximum likelihood estimation are special cases of M-estimators. The definition of M-estimators was motivated by robust statistics, which contributed new types of M-estimators. The statistical procedure of evaluating an M-estimator on a data set is called M-estimation. 48 samples of robust M-estimators can be found in a recent review study.

The cross-entropy (CE) method is a Monte Carlo method for importance sampling and optimization. It is applicable to both combinatorial and continuous problems, with either a static or noisy objective.

Multi-objective optimization is an area of multiple criteria decision making that is concerned with mathematical optimization problems involving more than one objective function to be optimized simultaneously. Multi-objective optimization has been applied in many fields of science, including engineering, economics and logistics where optimal decisions need to be taken in the presence of trade-offs between two or more conflicting objectives. Minimizing cost while maximizing comfort while buying a car, and maximizing performance whilst minimizing fuel consumption and emission of pollutants of a vehicle are examples of multi-objective optimization problems involving two and three objectives, respectively. In practical problems, there can be more than three objectives.

Advanced process monitor (APMonitor) is a modeling language for differential algebraic (DAE) equations. It is a free web-service or local server for solving representations of physical systems in the form of implicit DAE models. APMonitor is suited for large-scale problems and solves linear programming, integer programming, nonlinear programming, nonlinear mixed integer programming, dynamic simulation, moving horizon estimation, and nonlinear model predictive control. APMonitor does not solve the problems directly, but calls nonlinear programming solvers such as APOPT, BPOPT, IPOPT, MINOS, and SNOPT. The APMonitor API provides exact first and second derivatives of continuous functions to the solvers through automatic differentiation and in sparse matrix form.

In mathematics and its applications, a parametric family or a parameterized family is a family of objects whose differences depend only on the chosen values for a set of parameters.

In applied mathematics, Graver bases enable iterative solutions of linear and various nonlinear integer programming problems in polynomial time. They were introduced by Jack E. Graver. Their connection to the theory of Gröbner bases was discussed by Bernd Sturmfels. The algorithmic theory of Graver bases and its application to integer programming is described by Shmuel Onn.

Efstratios N. (Stratos) Pistikopoulos is a distinguished research professor at the Department of Chemical Engineering at Texas A&M University, as well as the director of the Texas A&M Energy Institute. From 1991-2015, he was a professor for chemical engineering at Imperial College, where he pioneered multi-parametric programming and invented the concept of explicit or multi-parametric model predictive control. He has authored and co/authored more than 350 peer reviewed journal articles, authored and/or edited 9 books and has been an invited speaker to many academic conferences and lectures, including the 21st Professor Roger W. H. Sargent lecture at Imperial College London entitled "Multi-Parametric Programming & Control 25 years later: what is next?". Additionally, Pistikopoulos has been elected a fellow of the Royal Academy of Engineering in 2013.

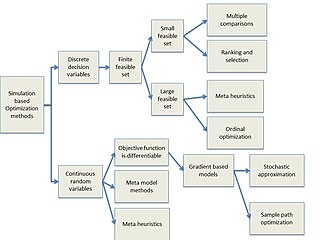

Simulation-based optimization integrates optimization techniques into simulation modeling and analysis. Because of the complexity of the simulation, the objective function may become difficult and expensive to evaluate. Usually, the underlying simulation model is stochastic, so that the objective function must be estimated using statistical estimation techniques.

The GEKKO Python package solves large-scale mixed-integer and differential algebraic equations with nonlinear programming solvers. Modes of operation include machine learning, data reconciliation, real-time optimization, dynamic simulation, and nonlinear model predictive control. In addition, the package solves Linear programming (LP), Quadratic programming (QP), Quadratically constrained quadratic program (QCQP), Nonlinear programming (NLP), Mixed integer programming (MIP), and Mixed integer linear programming (MILP). GEKKO is available in Python and installed with pip from PyPI of the Python Software Foundation.

In dual decomposition a problem is broken into smaller subproblems and a solution to the relaxed problem is found. This method can be employed for MRF optimization. Dual decomposition is applied to markov logic programs as an inference technique.