A microphone, colloquially called a mic, or mike, is a transducer that converts sound into an electrical signal. Microphones are used in many applications such as telephones, hearing aids, public address systems for concert halls and public events, motion picture production, live and recorded audio engineering, sound recording, two-way radios, megaphones, and radio and television broadcasting. They are also used in computers and other electronic devices, such as mobile phones, for recording sounds, speech recognition, VoIP, and other purposes, such as ultrasonic sensors or knock sensors.

Binaural recording is a method of recording sound that uses two microphones, arranged with the intent to create a 3D stereo sound sensation for the listener of actually being in the room with the performers or instruments. This effect is often created using a technique known as dummy head recording, wherein a mannequin head is fitted with a microphone in each ear. Binaural recording is intended for replay using headphones and will not translate properly over stereo speakers. This idea of a three-dimensional or "internal" form of sound has also translated into useful advancement of technology in many things such as stethoscopes creating "in-head" acoustics and IMAX movies being able to create a three-dimensional acoustic experience.

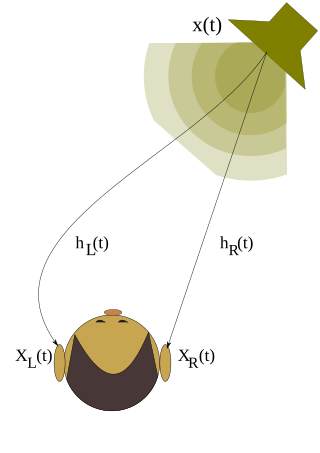

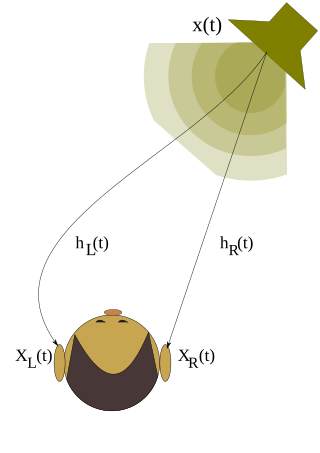

A head-related transfer function (HRTF) is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person.

Headphones are a pair of small loudspeaker drivers worn on or around the head over a user's ears. They are electroacoustic transducers, which convert an electrical signal to a corresponding sound. Headphones let a single user listen to an audio source privately, in contrast to a loudspeaker, which emits sound into the open air for anyone nearby to hear. Headphones are also known as earphones or, colloquially, cans. Circumaural and supra-aural headphones use a band over the top of the head to hold the drivers in place. Another type, known as earbuds or earpieces, consists of individual units that plug into the user's ear canal. A third type are bone conduction headphones, which typically wrap around the back of the head and rest in front of the ear canal, leaving the ear canal open. In the context of telecommunication, a headset is a combination of a headphone and microphone.

A mixing console or mixing desk is an electronic device for mixing audio signals, used in sound recording and reproduction and sound reinforcement systems. Inputs to the console include microphones, signals from electric or electronic instruments, or recorded sounds. Mixers may control analog or digital signals. The modified signals are summed to produce the combined output signals, which can then be broadcast, amplified through a sound reinforcement system or recorded.

Ambisonics is a full-sphere surround sound format: in addition to the horizontal plane, it covers sound sources above and below the listener.

Surround sound is a technique for enriching the fidelity and depth of sound reproduction by using multiple audio channels from speakers that surround the listener. Its first application was in movie theaters. Prior to surround sound, theater sound systems commonly had three screen channels of sound that played from three loudspeakers located in front of the audience. Surround sound adds one or more channels from loudspeakers to the side or behind the listener that are able to create the sensation of sound coming from any horizontal direction around the listener.

The low-frequency effects (LFE) channel is a band-limited audio track that is used for reproducing deep and intense low-frequency sounds in the 3–120 Hz frequency range.

Dolby Pro Logic is a surround sound processing technology developed by Dolby Laboratories, designed to decode soundtracks encoded with Dolby Surround. The terms Dolby Stereo and LtRt are also used to describe soundtracks that are encoded using this technique.

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance.

An equal-loudness contour is a measure of sound pressure level, over the frequency spectrum, for which a listener perceives a constant loudness when presented with pure steady tones. The unit of measurement for loudness levels is the phon and is arrived at by reference to equal-loudness contours. By definition, two sine waves of differing frequencies are said to have equal-loudness level measured in phons if they are perceived as equally loud by the average young person without significant hearing impairment.

Stereophonic sound, or more commonly stereo, is a method of sound reproduction that recreates a multi-directional, 3-dimensional audible perspective. This is usually achieved by using two independent audio channels through a configuration of two loudspeakers in such a way as to create the impression of sound heard from various directions, as in natural hearing.

Virtual acoustic space (VAS), also known as virtual auditory space, is a technique in which sounds presented over headphones appear to originate from any desired direction in space. The illusion of a virtual sound source outside the listener's head is created.

Wave field synthesis (WFS) is a spatial audio rendering technique, characterized by creation of virtual acoustic environments. It produces artificial wavefronts synthesized by a large number of individually driven loudspeakers from elementary waves. Such wavefronts seem to originate from a virtual starting point, the virtual sound source. Contrary to traditional phantom sound sources, the localization of WFS established virtual sound sources does not depend on the listener's position. Like as a genuine sound source the virtual source remains at fixed starting point.

Panning is the distribution of an audio signal into a new stereo or multi-channel sound field determined by a pan control setting. A typical physical recording console has a pan control for each incoming source channel. A pan control or pan pot is an analog control with a position indicator that can range continuously from the 7 o'clock when fully left to the 5 o'clock position fully right. Audio mixing software replaces pan pots with on-screen virtual knobs or sliders which function like their physical counterparts.

There are a number of well-developed microphone techniques used for recording musical, film, or voice sources or picking up sounds as part of sound reinforcement systems. The choice of technique depends on a number of factors, including:

A stage monitor system is a set of performer-facing loudspeakers called monitor speakers, stage monitors, floor monitors, wedges, or foldbacks on stage during live music performances in which a sound reinforcement system is used to amplify a performance for the audience. The monitor system allows musicians to hear themselves and fellow band members clearly.

In sound recording and reproduction, audio mixing is the process of optimizing and combining multitrack recordings into a final mono, stereo or surround sound product. In the process of combining the separate tracks, their relative levels are adjusted and balanced and various processes such as equalization and compression are commonly applied to individual tracks, groups of tracks, and the overall mix. In stereo and surround sound mixing, the placement of the tracks within the stereo field are adjusted and balanced. Audio mixing techniques and approaches vary widely and have a significant influence on the final product.

In audio engineering, joint encoding refers to a joining of several channels of similar information during encoding in order to obtain higher quality, a smaller file size, or both.

Transaural Stereo is a technology suite of analog circuits and digital signal processing algorithms related to the field of sound playback for audio communication and entertainment. It is based on the concept of crosstalk cancellation but in some versions can embody other processes such as binaural synthesis and equalization.