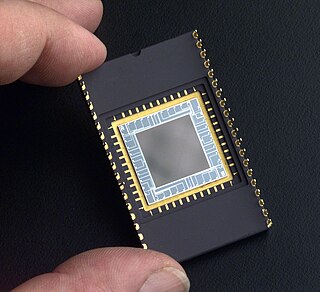

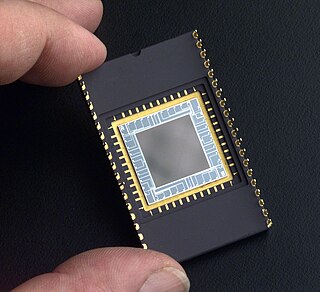

A charge-coupled device (CCD) is an integrated circuit containing an array of linked, or coupled, capacitors. Under the control of an external circuit, each capacitor can transfer its electric charge to a neighboring capacitor. CCD sensors are a major technology used in digital imaging.

A Bayer filter mosaic is a color filter array (CFA) for arranging RGB color filters on a square grid of photosensors. Its particular arrangement of color filters is used in most single-chip digital image sensors used in digital cameras, and camcorders to create a color image. The filter pattern is half green, one quarter red and one quarter blue, hence is also called BGGR, RGBG, GRBG, or RGGB.

A light field, or lightfield, is a vector function that describes the amount of light flowing in every direction through every point in a space. The space of all possible light rays is given by the five-dimensional plenoptic function, and the magnitude of each ray is given by its radiance. Michael Faraday was the first to propose that light should be interpreted as a field, much like the magnetic fields on which he had been working. The term light field was coined by Andrey Gershun in a classic 1936 paper on the radiometric properties of light in three-dimensional space.

Computational photography refers to digital image capture and processing techniques that use digital computation instead of optical processes. Computational photography can improve the capabilities of a camera, or introduce features that were not possible at all with film-based photography, or reduce the cost or size of camera elements. Examples of computational photography include in-camera computation of digital panoramas, high-dynamic-range images, and light field cameras. Light field cameras use novel optical elements to capture three dimensional scene information which can then be used to produce 3D images, enhanced depth-of-field, and selective de-focusing. Enhanced depth-of-field reduces the need for mechanical focusing systems. All of these features use computational imaging techniques.

In physics, the wavefront of a time-varying wave field is the set (locus) of all points having the same phase. The term is generally meaningful only for fields that, at each point, vary sinusoidally in time with a single temporal frequency.

Inverse synthetic-aperture radar (ISAR) is a radar technique using radar imaging to generate a two-dimensional high resolution image of a target. It is analogous to conventional SAR, except that ISAR technology uses the movement of the target rather than the emitter to create the synthetic aperture. ISAR radars have a significant role aboard maritime patrol aircraft to provide them with radar image of sufficient quality to allow it to be used for target recognition purposes. In situations where other radars display only a single unidentifiable bright moving pixel, the ISAR image is often adequate to discriminate between various missiles, military aircraft, and civilian aircraft.

A light field camera, also known as a plenoptic camera, is a camera that captures information about the light field emanating from a scene; that is, the intensity of light in a scene, and also the precise direction that the light rays are traveling in space. This contrasts with conventional cameras, which record only light intensity at various wavelengths.

An image sensor or imager is a sensor that detects and conveys information used to form an image. It does so by converting the variable attenuation of light waves into signals, small bursts of current that convey the information. The waves can be light or other electromagnetic radiation. Image sensors are used in electronic imaging devices of both analog and digital types, which include digital cameras, camera modules, camera phones, optical mouse devices, medical imaging equipment, night vision equipment such as thermal imaging devices, radar, sonar, and others. As technology changes, electronic and digital imaging tends to replace chemical and analog imaging.

The optical transfer function (OTF) of an optical system such as a camera, microscope, human eye, or projector specifies how different spatial frequencies are captured or transmitted. It is used by optical engineers to describe how the optics project light from the object or scene onto a photographic film, detector array, retina, screen, or simply the next item in the optical transmission chain. A variant, the modulation transfer function (MTF), neglects phase effects, but is equivalent to the OTF in many situations.

The following are common definitions related to the machine vision field.

An active-pixel sensor (APS) is an image sensor, which was invented by Peter J.W. Noble in 1968, where each pixel sensor unit cell has a photodetector and one or more active transistors. In a metal–oxide–semiconductor (MOS) active-pixel sensor, MOS field-effect transistors (MOSFETs) are used as amplifiers. There are different types of APS, including the early NMOS APS and the now much more common complementary MOS (CMOS) APS, also known as the CMOS sensor. CMOS sensors are used in digital camera technologies such as cell phone cameras, web cameras, most modern digital pocket cameras, most digital single-lens reflex cameras (DSLRs), mirrorless interchangeable-lens cameras (MILCs), and lensless imaging for cells.

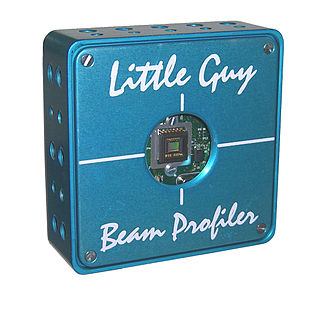

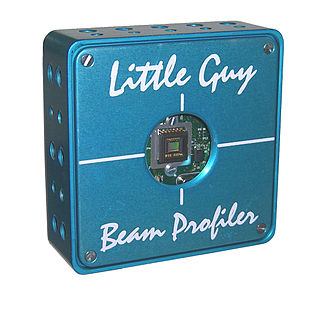

A laser beam profiler captures, displays, and records the spatial intensity profile of a laser beam at a particular plane transverse to the beam propagation path. Since there are many types of lasers—ultraviolet, visible, infrared, continuous wave, pulsed, high-power, low-power—there is an assortment of instrumentation for measuring laser beam profiles. No single laser beam profiler can handle every power level, pulse duration, repetition rate, wavelength, and beam size.

A microlens is a small lens, generally with a diameter less than a millimetre (mm) and often as small as 10 micrometres (μm). The small sizes of the lenses means that a simple design can give good optical quality but sometimes unwanted effects arise due to optical diffraction at the small features. A typical microlens may be a single element with one plane surface and one spherical convex surface to refract the light. Because micro-lenses are so small, the substrate that supports them is usually thicker than the lens and this has to be taken into account in the design. More sophisticated lenses may use aspherical surfaces and others may use several layers of optical material to achieve their design performance.

A structured-light 3D scanner is a 3D scanning device for measuring the three-dimensional shape of an object using projected light patterns and a camera system.

Optical heterodyne detection is a method of extracting information encoded as modulation of the phase, frequency or both of electromagnetic radiation in the wavelength band of visible or infrared light. The light signal is compared with standard or reference light from a "local oscillator" (LO) that would have a fixed offset in frequency and phase from the signal if the latter carried null information. "Heterodyne" signifies more than one frequency, in contrast to the single frequency employed in homodyne detection.

The study of image formation encompasses the radiometric and geometric processes by which 2D images of 3D objects are formed. In the case of digital images, the image formation process also includes analog to digital conversion and sampling.

An angle-sensitive pixel (ASP) is a CMOS sensor with a sensitivity to incoming light that is sinusoidal in incident angle.

In computer vision, rigid motion segmentation is the process of separating regions, features, or trajectories from a video sequence into coherent subsets of space and time. These subsets correspond to independent rigidly moving objects in the scene. The goal of this segmentation is to differentiate and extract the meaningful rigid motion from the background and analyze it. Image segmentation techniques labels the pixels to be a part of pixels with certain characteristics at a particular time. Here, the pixels are segmented depending on its relative movement over a period of time i.e. the time of the video sequence.

Lightfieldmicroscopy (LFM) is a scanning-free 3-dimensional (3D) microscopic imaging method based on the theory of light field. This technique allows sub-second (~10 Hz) large volumetric imaging with ~1 μm spatial resolution in the condition of weak scattering and semi-transparence, which has never been achieved by other methods. Just as in traditional light field rendering, there are two steps for LFM imaging: light field capture and processing. In most setups, a microlens array is used to capture the light field. As for processing, it can be based on two kinds of representations of light propagation: the ray optics picture and the wave optics picture. The Stanford University Computer Graphics Laboratory published their first prototype LFM in 2006 and has been working on the cutting edge since then.

A pixel format refers to the format in which the image data output by a digital camera is represented. In comparison to the raw pixel information captured by the image sensor, the output pixels could be formatted differently based on the active pixel format. For several digital cameras, this format is a user-configurable feature; the available pixel formats on a particular camera depends on the type and model of the camera.