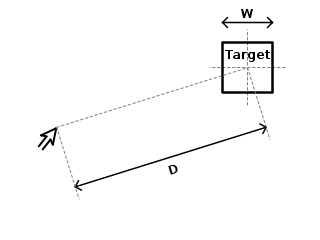

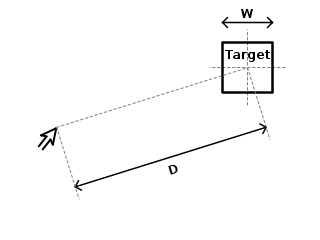

Fitts's law is a predictive model of human movement primarily used in human–computer interaction and ergonomics. The law predicts that the time required to rapidly move to a target area is a function of the ratio between the distance to the target and the width of the target. Fitts's law is used to model the act of pointing, either by physically touching an object with a hand or finger, or virtually, by pointing to an object on a computer monitor using a pointing device. It was initially developed by Paul Fitts.

In human–computer interaction, WIMP stands for "windows, icons, menus, pointer", denoting a style of interaction using these elements of the user interface. Other expansions are sometimes used, such as substituting "mouse" and "mice" for menus, or "pull-down menu" and "pointing" for pointer.

A recommender system, or a recommendation system, is a subclass of information filtering system that provides suggestions for items that are most pertinent to a particular user. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer.

Gesture recognition is an area of research and development in computer science and language technology concerned with the recognition and interpretation of human gestures. A subdiscipline of computer vision, it employs mathematical algorithms to interpret gestures.

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development is to empower collaboration, learning, and design by giving physical forms to digital information, thus taking advantage of the human ability to grasp and manipulate physical objects and materials.

Ben Shneiderman is an American computer scientist, a Distinguished University Professor in the University of Maryland Department of Computer Science, which is part of the University of Maryland College of Computer, Mathematical, and Natural Sciences at the University of Maryland, College Park, and the founding director (1983-2000) of the University of Maryland Human-Computer Interaction Lab. He conducted fundamental research in the field of human–computer interaction, developing new ideas, methods, and tools such as the direct manipulation interface, and his eight rules of design.

Backchannel is the use of networked computers to maintain a real-time online conversation alongside the primary group activity or live spoken remarks. The term was coined from the linguistics term to describe listeners' behaviours during verbal communication.

In computing, post-WIMP comprises work on user interfaces, mostly graphical user interfaces, which attempt to go beyond the paradigm of windows, icons, menus and a pointing device, i.e. WIMP interfaces.

A projection augmented model is an element sometimes employed in virtual reality systems. It consists of a physical three-dimensional model onto which a computer image is projected to create a realistic looking object. Importantly, the physical model is the same geometric shape as the object that the PA model depicts.

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

In computing, a natural user interface (NUI) or natural interface is a user interface that is effectively invisible, and remains invisible as the user continuously learns increasingly complex interactions. The word "natural" is used because most computer interfaces use artificial control devices whose operation has to be learned. Examples include voice assistants, such as Alexa and Siri, touch and multitouch interactions on today's mobile phones and tablets, but also touch interfaces invisibly integrated into the textiles furnitures.

Human–computer interaction (HCI) is research in the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. A device that allows interaction between human being and a computer is known as a "Human-computer Interface (HCI)".

Urban computing is an interdisciplinary field which pertains to the study and application of computing technology in urban areas. This involves the application of wireless networks, sensors, computational power, and data to improve the quality of densely populated areas. Urban computing is the technological framework for smart cities.

The DiamondTouch table is a multi-touch, interactive PC interface product from Circle Twelve Inc. It is a human interface device that has the capability of allowing multiple people to interact simultaneously while identifying which person is touching where. The technology was originally developed at Mitsubishi Electric Research Laboratories (MERL) in 2001 and later licensed to Circle Twelve Inc in 2008. The DiamondTouch table is used to facilitate face-to-face collaboration, brainstorming, and decision-making, and users include construction management company Parsons Brinckerhoff, the Methodist Hospital, and the US National Geospatial-Intelligence Agency (NGA).

Sonic interaction design is the study and exploitation of sound as one of the principal channels conveying information, meaning, and aesthetic/emotional qualities in interactive contexts. Sonic interaction design is at the intersection of interaction design and sound and music computing. If interaction design is about designing objects people interact with, and such interactions are facilitated by computational means, in sonic interaction design, sound is mediating interaction either as a display of processes or as an input medium.

Projector-camera systems (pro-cam), also called camera-projector systems, augment a local surface with a projected captured image of a remote surface, creating a shared workspace for remote collaboration and communication. Projector-camera systems may also be used for artistic and entertainment purposes. A pro-cam system consists of a vertical screen for implementing interpersonal space where front-facing videos are displayed, and a horizontal projected screen on the tabletop for implementing shared workspace where downward facing videos are overlapped. An automatically pre-warped image is sent to the projector to ensure that the horizontal screen appears undistorted.

Animal–computer interaction (ACI) is a field of research for the design and use of technology with, for and by animals covering different kinds of animals from wildlife, zoo and domesticated animals in different roles. It emerged from, and was heavily influenced by, the discipline of Human–computer interaction (HCI). As the field expanded, it has become increasingly multi-disciplinary, incorporating techniques and research from disciplines such as artificial intelligence (AI), requirements engineering (RE), and veterinary science.

Andrew Cockburn is currently working as a Professor in the Department of Computer Science and Software Engineering at the University of Canterbury in Christchurch, New Zealand. He is in charge of the Human Computer Interactions Lab where he conducts research focused on designing and testing user interfaces that integrate with inherent human factors.

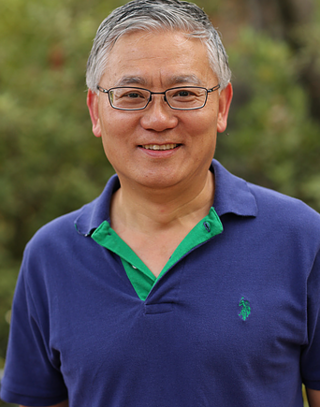

Shumin Zhai is a Chinese-born American Canadian Human–computer interaction (HCI) research scientist and inventor. He is known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. His studies have contributed to both foundational models and understandings of HCI and practical user interface designs and flagship products. He previously worked at IBM where he invented the ShapeWriter text entry method for smartphones, which is a predecessor to the modern Swype keyboard. Dr. Zhai's publications have won the ACM UIST Lasting Impact Award and the IEEE Computer Society Best Paper Award, among others, and he is most known for his research specifically on input devices and interaction methods, swipe-gesture-based touchscreen keyboards, eye-tracking interfaces, and models of human performance in human-computer interaction. Dr. Zhai is currently a Principal Scientist at Google where he leads and directs research, design, and development of human-device input methods and haptics systems.

Jofish Kaye is an American and British scientist specializing in human-computer interaction and artificial intelligence. He runs interaction design and user research at anthem.ai, and is an editor of Personal & Ubiquitous Computing.