Distributed computing is a field of computer science that studies distributed systems, defined as computer systems whose inter-communicating components are located on different networked computers.

In mathematics, graph theory is the study of graphs, which are mathematical structures used to model pairwise relations between objects. A graph in this context is made up of vertices which are connected by edges. A distinction is made between undirected graphs, where edges link two vertices symmetrically, and directed graphs, where edges link two vertices asymmetrically. Graphs are one of the principal objects of study in discrete mathematics.

Knowledge representation and reasoning is the field of artificial intelligence (AI) dedicated to representing information about the world in a form that a computer system can use to solve complex tasks such as diagnosing a medical condition or having a dialog in a natural language. Knowledge representation incorporates findings from psychology about how humans solve problems and represent knowledge, in order to design formalisms that will make complex systems easier to design and build. Knowledge representation and reasoning also incorporates findings from logic to automate various kinds of reasoning.

A semantic network, or frame network is a knowledge base that represents semantic relations between concepts in a network. This is often used as a form of knowledge representation. It is a directed or undirected graph consisting of vertices, which represent concepts, and edges, which represent semantic relations between concepts, mapping or connecting semantic fields. A semantic network may be instantiated as, for example, a graph database or a concept map. Typical standardized semantic networks are expressed as semantic triples.

The Semantic Web, sometimes known as Web 3.0, is an extension of the World Wide Web through standards set by the World Wide Web Consortium (W3C). The goal of the Semantic Web is to make Internet data machine-readable.

Universal Networking Language (UNL) is a declarative formal language specifically designed to represent semantic data extracted from natural language texts. It can be used as a pivot language in interlingual machine translation systems or as a knowledge representation language in information retrieval applications.

Computer simulation is the process of mathematical modelling, performed on a computer, which is designed to predict the behaviour of, or the outcome of, a real-world or physical system. The reliability of some mathematical models can be determined by comparing their results to the real-world outcomes they aim to predict. Computer simulations have become a useful tool for the mathematical modeling of many natural systems in physics, astrophysics, climatology, chemistry, biology and manufacturing, as well as human systems in economics, psychology, social science, health care and engineering. Simulation of a system is represented as the running of the system's model. It can be used to explore and gain new insights into new technology and to estimate the performance of systems too complex for analytical solutions.

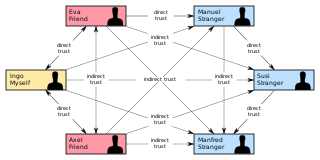

In psychology and sociology, a trust metric is a measurement or metric of the degree to which one social actor trusts another social actor. Trust metrics may be abstracted in a manner that can be implemented on computers, making them of interest for the study and engineering of virtual communities, such as Friendster and LiveJournal.

A multi-agent system is a computerized system composed of multiple interacting intelligent agents. Multi-agent systems can solve problems that are difficult or impossible for an individual agent or a monolithic system to solve. Intelligence may include methodic, functional, procedural approaches, algorithmic search or reinforcement learning.

In sociology, social complexity is a conceptual framework used in the analysis of society. In the sciences, contemporary definitions of complexity are found in systems theory, wherein the phenomenon being studied has many parts and many possible arrangements of the parts; simultaneously, what is complex and what is simple are relative and change in time.

CFEngine is a configuration management system, written by Mark Burgess. Its primary function is to provide automated configuration and maintenance of large-scale computer systems, including the unified management of servers, desktops, consumer and industrial devices, embedded network devices, mobile smartphones, and tablet computers.

Mark Burgess is an independent researcher and writer, formerly professor at Oslo University College in Norway and creator of the CFEngine software and company, who is known for work in computer science in the field of policy-based configuration management.

Johannes Aldert "Jan" Bergstra is a Dutch computer scientist. His work has focussed on logic and the theoretical foundations of software engineering, especially on formal methods for system design. He is best known as an expert on algebraic methods for the specification of data and computational processes in general.

In information security, computational trust is the generation of trusted authorities or user trust through cryptography. In centralised systems, security is typically based on the authenticated identity of external parties. Rigid authentication mechanisms, such as public key infrastructures (PKIs) or Kerberos, have allowed this model to be extended to distributed systems within a few closely collaborating domains or within a single administrative domain. During recent years, computer science has moved from centralised systems to distributed computing. This evolution has several implications for security models, policies and mechanisms needed to protect users’ information and resources in an increasingly interconnected computing infrastructure.

Agent-based social simulation consists of social simulations that are based on agent-based modeling, and implemented using artificial agent technologies. Agent-based social simulation is a scientific discipline concerned with simulation of social phenomena, using computer-based multiagent models. In these simulations, persons or group of persons are represented by agents. MABSS is a combination of social science, multiagent simulation and computer simulation.

In Australia, the Australian Artificial Intelligence Institute (Australian AI Institute, AAII, or A2I2) was a government-funded research and development laboratory for investigating and commercializing artificial intelligence (AI), specifically intelligent software agents.

Forest informatics is the combined science of forestry and informatics, with a special emphasis on collection, management, and processing of data, information and knowledge, and the incorporation of informatic concepts and theories specific to enrich forest management and forest science; it has a similar relationship to library science and information science.

A social network is a social structure made up of a set of social actors, sets of dyadic ties, and other social interactions between actors. The social network perspective provides a set of methods for analyzing the structure of whole social entities as well as a variety of theories explaining the patterns observed in these structures. The study of these structures uses social network analysis to identify local and global patterns, locate influential entities, and examine network dynamics.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

Semantic spacetime is a theoretical framework for agent-based modelling of spacetime, based on Promise Theory. It is relevant both as a model of computer science and as an alternative network based formulation of physics in some areas.