Pruning is the practice of removing unwanted portions from a plant.

Pruning may also refer to:

Pruning is the practice of removing unwanted portions from a plant.

Pruning may also refer to:

In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Typically data is collected in text corpora, using either rule-based, statistical or neural-based approaches in machine learning and deep learning.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. Advances in the field of deep learning have allowed neural networks to surpass many previous approaches in performance.

Computational neuroscience is a branch of neuroscience which employs mathematics, computer science, theoretical analysis and abstractions of the brain to understand the principles that govern the development, structure, physiology and cognitive abilities of the nervous system.

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

NeuroEvolution of Augmenting Topologies (NEAT) is a genetic algorithm (GA) for the generation of evolving artificial neural networks developed by Kenneth Stanley and Risto Miikkulainen in 2002 while at The University of Texas at Austin. It alters both the weighting parameters and structures of networks, attempting to find a balance between the fitness of evolved solutions and their diversity. It is based on applying three key techniques: tracking genes with history markers to allow crossover among topologies, applying speciation to preserve innovations, and developing topologies incrementally from simple initial structures ("complexifying").

Decision tree learning is a supervised learning approach used in statistics, data mining and machine learning. In this formalism, a classification or regression decision tree is used as a predictive model to draw conclusions about a set of observations.

Gene expression programming (GEP) in computer programming is an evolutionary algorithm that creates computer programs or models. These computer programs are complex tree structures that learn and adapt by changing their sizes, shapes, and composition, much like a living organism. And like living organisms, the computer programs of GEP are also encoded in simple linear chromosomes of fixed length. Thus, GEP is a genotype–phenotype system, benefiting from a simple genome to keep and transmit the genetic information and a complex phenotype to explore the environment and adapt to it.

Semantic neural network (SNN) is based on John von Neumann's neural network [von Neumann, 1966] and Nikolai Amosov M-Network. There are limitations to a link topology for the von Neumann’s network but SNN accept a case without these limitations. Only logical values can be processed, but SNN accept that fuzzy values can be processed too. All neurons into the von Neumann network are synchronized by tacts. For further use of self-synchronizing circuit technique SNN accepts neurons can be self-running or synchronized.

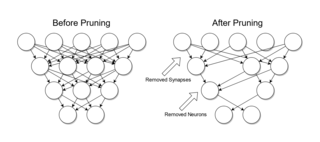

Pruning is a data compression technique in machine learning and search algorithms that reduces the size of decision trees by removing sections of the tree that are non-critical and redundant to classify instances. Pruning reduces the complexity of the final classifier, and hence improves predictive accuracy by the reduction of overfitting.

Synaptic pruning, a phase in the development of the nervous system, is the process of synapse elimination that occurs between early childhood and the onset of puberty in many mammals, including humans. Pruning starts near the time of birth and continues into the late-20s. During the pruning of a synapse, both the axon and the dendrite decay and die off. Synaptic pruning was traditionally considered to be complete by the time of sexual maturation, but MRI studies have discounted this idea.

Grafting is the process of adding nodes to inferred decision trees to improve the predictive accuracy. A decision tree is a graphical model that is used as a support tool for decision process.

The fields of marketing and artificial intelligence converge in systems which assist in areas such as market forecasting, and automation of processes and decision making, along with increased efficiency of tasks which would usually be performed by humans. The science behind these systems can be explained through neural networks and expert systems, computer programs that process input and provide valuable output for marketers.

In computer science, Monte Carlo tree search (MCTS) is a heuristic search algorithm for some kinds of decision processes, most notably those employed in software that plays board games. In that context MCTS is used to solve the game tree.

mlpack is a free, open-source and header-only software library for machine learning and artificial intelligence written in C++, built on top of the Armadillo library and the ensmallen numerical optimization library. mlpack has an emphasis on scalability, speed, and ease-of-use. Its aim is to make machine learning possible for novice users by means of a simple, consistent API, while simultaneously exploiting C++ language features to provide maximum performance and maximum flexibility for expert users. mlpack has also a light deployment infrastructure with minimum dependencies, making it perfect for embedded systems and low resource devices. Its intended target users are scientists and engineers.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence (AI), its subdisciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

In artificial intelligence, a differentiable neural computer (DNC) is a memory augmented neural network architecture (MANN), which is typically recurrent in its implementation. The model was published in 2016 by Alex Graves et al. of DeepMind.

In computer science, incremental learning is a method of machine learning in which input data is continuously used to extend the existing model's knowledge i.e. to further train the model. It represents a dynamic technique of supervised learning and unsupervised learning that can be applied when training data becomes available gradually over time or its size is out of system memory limits. Algorithms that can facilitate incremental learning are known as incremental machine learning algorithms.

The following outline is provided as an overview of, and topical guide to, machine learning:

Automated machine learning (AutoML) is the process of automating the tasks of applying machine learning to real-world problems. It is the combination of automation and ML.