Bash is a Unix shell and command language written by Brian Fox for the GNU Project as a free software replacement for the Bourne shell. The shell's name is an acronym for Bourne-Again SHell, a pun on the name of the Bourne shell that it replaces and the notion of being "born again". First released in 1989, it has been used as the default login shell for most Linux distributions and it was one of the first programs Linus Torvalds ported to Linux, alongside GCC. It is available on nearly all modern operating systems.

The Bourne shell (sh) is a shell command-line interpreter for computer operating systems.

The C shell is a Unix shell created by Bill Joy while he was a graduate student at University of California, Berkeley in the late 1970s. It has been widely distributed, beginning with the 2BSD release of the Berkeley Software Distribution (BSD) which Joy first distributed in 1978. Other early contributors to the ideas or the code were Michael Ubell, Eric Allman, Mike O'Brien and Jim Kulp.

rc is the command line interpreter for Version 10 Unix and Plan 9 from Bell Labs operating systems. It resembles the Bourne shell, but its syntax is somewhat simpler. It was created by Tom Duff, who is better known for an unusual C programming language construct.

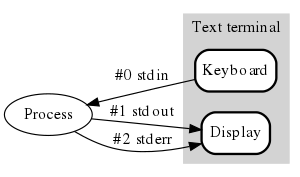

In computer programming, standard streams are preconnected input and output communication channels between a computer program and its environment when it begins execution. The three input/output (I/O) connections are called standard input (stdin), standard output (stdout) and standard error (stderr). Originally I/O happened via a physically connected system console, but standard streams abstract this. When a command is executed via an interactive shell, the streams are typically connected to the text terminal on which the shell is running, but can be changed with redirection or a pipeline. More generally, a child process inherits the standard streams of its parent process.

dd is a command-line utility for Unix, Plan 9, Inferno, and Unix-like operating systems and beyond, the primary purpose of which is to convert and copy files. On Unix, device drivers for hardware and special device files appear in the file system just like normal files; dd can also read and/or write from/to these files, provided that function is implemented in their respective driver. As a result, dd can be used for tasks such as backing up the boot sector of a hard drive, and obtaining a fixed amount of random data. The dd program can also perform conversions on the data as it is copied, including byte order swapping and conversion to and from the ASCII and EBCDIC text encodings.

In Unix and Unix-like computer operating systems, a file descriptor is a process-unique identifier (handle) for a file or other input/output resource, such as a pipe or network socket.

In Unix and Unix-like operating systems, iconv is a command-line program and a standardized application programming interface (API) used to convert between different character encodings. "It can convert from any of these encodings to any other, through Unicode conversion."

The Thompson shell was the first Unix shell, introduced in the first version of Unix in 1971, and was written by Ken Thompson. It was a simple command interpreter, not designed for scripting, but nonetheless introduced several innovative features to the command-line interface and led to the development of the later Unix shells.

Expect is an extension to the Tcl scripting language written by Don Libes. The program automates interactions with programs that expose a text terminal interface. Expect, originally written in 1990 for the Unix platform, has since become available for Microsoft Windows and other systems.

In Unix-like computer operating systems, a pipeline is a mechanism for inter-process communication using message passing. A pipeline is a set of processes chained together by their standard streams, so that the output text of each process (stdout) is passed directly as input (stdin) to the next one. The second process is started as the first process is still executing, and they are executed concurrently. The concept of pipelines was championed by Douglas McIlroy at Unix's ancestral home of Bell Labs, during the development of Unix, shaping its toolbox philosophy. It is named by analogy to a physical pipeline. A key feature of these pipelines is their "hiding of internals". This in turn allows for more clarity and simplicity in the system.

In Unix-like operating systems, find is a command-line utility that locates files based on some user-specified criteria and either prints the pathname of each matched object or, if another action is requested, performs that action on each matched object.

In computing, tee is a command in command-line interpreters (shells) using standard streams which reads standard input and writes it to both standard output and one or more files, effectively duplicating its input. It is primarily used in conjunction with pipes and filters. The command is named after the T-splitter used in plumbing.

test is a command-line utility found in Unix, Plan 9, and Unix-like operating systems that evaluates conditional expressions. test was turned into a shell builtin command in 1981 with UNIX System III and at the same time made available under the alternate name [.

In computing, sort is a standard command line program of Unix and Unix-like operating systems, that prints the lines of its input or concatenation of all files listed in its argument list in sorted order. Sorting is done based on one or more sort keys extracted from each line of input. By default, the entire input is taken as sort key. Blank space is the default field separator. The command supports a number of command-line options that can vary by implementation. For instance the "-r" flag will reverse the sort order.

In computing, exec is a functionality of an operating system that runs an executable file in the context of an already existing process, replacing the previous executable. This act is also referred to as an overlay. It is especially important in Unix-like systems, although it also exists elsewhere. As no new process is created, the process identifier (PID) does not change, but the machine code, data, heap, and stack of the process are replaced by those of the new program.

Toybox is a free and open-source software implementation of over 200 Unix command line utilities such as ls, cp, and mv. The Toybox project was started in 2006, and became a 0BSD licensed BusyBox alternative. Toybox is used for most of Android's command-line tools in all currently supported Android versions, and is also used to build Android on Linux and macOS. All of the tools are tested on Linux, and many of them also work on BSD and macOS.

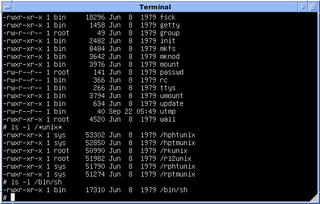

The script command is a Unix utility that records a terminal session. It dates back to the 1979 3.0 Berkeley Software Distribution (BSD).

In computing, process substitution is a form of inter-process communication that allows the input or output of a command to appear as a file. The command is substituted in-line, where a file name would normally occur, by the command shell. This allows programs that normally only accept files to directly read from or write to another program.

cat is a standard Unix utility that reads files sequentially, writing them to standard output. The name is derived from its function to (con)catenate files . It has been ported to a number of operating systems.