Related Research Articles

ext2, or second extended file system, is a file system for the Linux kernel. It was initially designed by French software developer Rémy Card as a replacement for the extended file system (ext). Having been designed according to the same principles as the Berkeley Fast File System from BSD, it was the first commercial-grade filesystem for Linux.

DNIX is a discontinued Unix-like real-time operating system from the Swedish company Dataindustrier AB (DIAB). A version named ABCenix was developed for the ABC 1600 computer from Luxor. Daisy Systems also had a system named Daisy DNIX on some of their computer-aided design (CAD) workstations. It was unrelated to DIAB's product.

Network File System (NFS) is a distributed file system protocol originally developed by Sun Microsystems (Sun) in 1984, allowing a user on a client computer to access files over a computer network much like local storage is accessed. NFS, like many other protocols, builds on the Open Network Computing Remote Procedure Call system. NFS is an open IETF standard defined in a Request for Comments (RFC), allowing anyone to implement the protocol.

The Maildir e-mail format is a common way of storing email messages on a file system, rather than in a database. Each message is assigned a file with a unique name, and each mail folder is a file system directory containing these files. Maildir was designed by Daniel J. Bernstein circa 1995, with a major goal of eliminating the need for program code to handle file locking and unlocking through use of the local filesystem. Maildir design reflects the fact that the only operations valid for an email message is that it be created, deleted or have its status changed in some way.

In computing, a symbolic link is a file whose purpose is to point to a file or directory by specifying a path thereto.

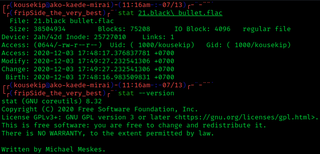

The inode is a data structure in a Unix-style file system that describes a file-system object such as a file or a directory. Each inode stores the attributes and disk block locations of the object's data. File-system object attributes may include metadata, as well as owner and permission data.

In Unix and Unix-like computer operating systems, a file descriptor is a process-unique identifier (handle) for a file or other input/output resource, such as a pipe or network socket.

stat is a Unix system call that returns file attributes about an inode. The semantics of stat vary between operating systems. As an example, Unix command ls uses this system call to retrieve information on files that includes:

In computing, a file system or filesystem governs file organization and access. A local file system is a capability of an operating system that services the applications running on the same computer. A distributed file system is a protocol that provides file access between networked computers.

The Write Anywhere File Layout (WAFL) is a proprietary file system that supports large, high-performance RAID arrays, quick restarts without lengthy consistency checks in the event of a crash or power failure, and growing the filesystems size quickly. It was designed by NetApp for use in its storage appliances like NetApp FAS, AFF, Cloud Volumes ONTAP and ONTAP Select.

File locking is a mechanism that restricts access to a computer file, or to a region of a file, by allowing only one user or process to modify or delete it at a specific time, and preventing reading of the file while it's being modified or deleted.

Unix-like operating systems identify a user by a value called a user identifier, often abbreviated to user ID or UID. The UID, along with the group identifier (GID) and other access control criteria, is used to determine which system resources a user can access. The password file maps textual user names to UIDs. UIDs are stored in the inodes of the Unix file system, running processes, tar archives, and the now-obsolete Network Information Service. In POSIX-compliant environments, the shell command id gives the current user's UID, as well as more information such as the user name, primary user group and group identifier (GID).

Lustre is a type of parallel distributed file system, generally used for large-scale cluster computing. The name Lustre is a portmanteau word derived from Linux and cluster. Lustre file system software is available under the GNU General Public License and provides high performance file systems for computer clusters ranging in size from small workgroup clusters to large-scale, multi-site systems. Since June 2005, Lustre has consistently been used by at least half of the top ten, and more than 60 of the top 100 fastest supercomputers in the world, including the world's No. 1 ranked TOP500 supercomputer in November 2022, Frontier, as well as previous top supercomputers such as Fugaku, Titan and Sequoia.

For most file systems, a program initializes access to a file in a file system using the open system call. This allocates resources associated to the file, and returns a handle that the process will use to refer to that file. In some cases the open is performed by the first access.

The following tables compare general and technical information for a number of file systems.

In computing, Self-certifying File System (SFS) is a global and decentralized, distributed file system for Unix-like operating systems, while also providing transparent encryption of communications as well as authentication. It aims to be the universal distributed file system by providing uniform access to any available server, however, the usefulness of SFS is limited by the low deployment of SFS clients. It was developed in the June 2000 doctoral thesis of David Mazières.

A clustered file system (CFS) is a file system which is shared by being simultaneously mounted on multiple servers. There are several approaches to clustering, most of which do not employ a clustered file system. Clustered file systems can provide features like location-independent addressing and redundancy which improve reliability or reduce the complexity of the other parts of the cluster. Parallel file systems are a type of clustered file system that spread data across multiple storage nodes, usually for redundancy or performance.

An automounter is any program or software facility which automatically mounts filesystems in response to access operations by user programs. An automounter system utility, when notified of file and directory access attempts under selectively monitored subdirectory trees, dynamically and transparently makes local or remote devices accessible.

The Newcastle Connection was a software subsystem from the early 1980s that could be added to each of a set of interconnected UNIX-like systems to build a distributed system. The latter would be functionally indistinguishable, at both user- and system-level, from a conventional UNIX system. It became a forerunner of Sun Microsystems' Network File System (NFS). The name derives from the research group at Newcastle University, under Brian Randell, which developed it.

References

- ↑ Rifkin, Andrew P.; Forbes, Michael P.; Hamilton, Richard L.; Sabrio, Michael; Shah, Suryakanta; Yueh, Kang (1987). "RFS architectural overview". Australian UNIX systems User Group Newsletter. Vol. 7.

- ↑ A. P. Rifkin, M. P. Forbes, R. L. Hamilton, Michail Sabrio, S. Shah, and K. Yueh, RFS Architectural Overview, USENIX Conference Proceedings (June 1986), Atlanta, GA

- ↑ Dennis M. Ritchie, A Stream Input-Output System, Bell Laboratories Technical Journal 63(8) (October 1984)