Related Research Articles

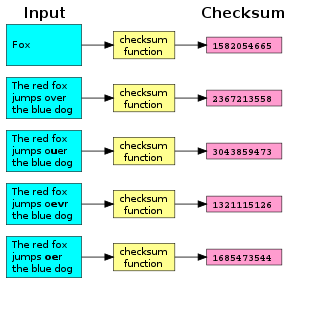

A checksum is a small-sized block of data derived from another block of digital data for the purpose of detecting errors that may have been introduced during its transmission or storage. By themselves, checksums are often used to verify data integrity but are not relied upon to verify data authenticity.

In information theory and coding theory with applications in computer science and telecommunication, error detection and correction or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases.

In communication networks, network throughput is the rate of successful message delivery over a communication channel, such as Ethernet or packet radio. The data these messages belong to may be delivered over a physical or logical link, or it can pass through a certain network node. Throughput is usually measured in bits per second, and sometimes in data packets per second or data packets per time slot.

In telecommunications and signal processing, baseband is the range of frequencies occupied by a signal that has not been modulated to higher frequencies. Baseband signals typically originate from transducers, converting some other variable into an electrical signal. For example, the output of a microphone is a baseband signal that is an analog of the received audio. In conventional analog radio broadcasting the baseband audio signal is used, after processing, to modulate a separate RF carrier signal at a much higher frequency.

In digital transmission, the number of bit errors is the number of received bits of a data stream over a communication channel that have been altered due to noise, interference, distortion or bit synchronization errors.

In electronics and telecommunications, jitter is the deviation from true periodicity of a presumably periodic signal, often in relation to a reference clock signal. In clock recovery applications it is called timing jitter. Jitter is a significant, and usually undesired, factor in the design of almost all communications links.

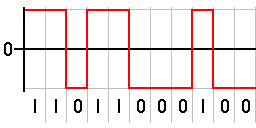

In telecommunication, a line code is a pattern of voltage, current, or photons used to represent digital data transmitted down a communication channel or written to a storage medium. This repertoire of signals is usually called a constrained code in data storage systems.

Data transmission and data reception or, more broadly, data communication or digital communications is the transfer and reception of data in the form of a digital bitstream or a digitized analog signal over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical fibers, wireless communication using radio spectrum, storage media and computer buses. The data are represented as an electromagnetic signal, such as an electrical voltage, radiowave, microwave, or infrared signal.

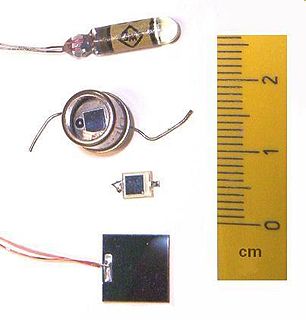

A photodiode is a semiconductor p–n junction device that converts light into an electrical current. The current is generated when photons are absorbed in the photodiode. Photodiodes may contain optical filters, built-in lenses, and may have large or small surface areas. Photodiodes usually have a slower response time as their surface area increases. The common, traditional solar cell used to generate electric solar power is a large area photodiode.

In the seven-layer OSI model of computer networking, the physical layer or layer 1 is the first and lowest layer; The layer most closely associated with the physical connection between devices. This layer may be implemented by a PHY chip.

The data link layer, or layer 2, is the second layer of the seven-layer OSI model of computer networking. This layer is the protocol layer that transfers data between nodes on a network segment across the physical layer. The data link layer provides the functional and procedural means to transfer data between network entities and may also provide the means to detect and possibly correct errors that can occur in the physical layer.

In telecommunications and computing, bit rate is the number of bits that are conveyed or processed per unit of time.

A mixed-signal integrated circuit is any integrated circuit that has both analog circuits and digital circuits on a single semiconductor die.

Peak signal-to-noise ratio (PSNR) is an engineering term for the ratio between the maximum possible power of a signal and the power of corrupting noise that affects the fidelity of its representation. Because many signals have a very wide dynamic range, PSNR is usually expressed as a logarithmic quantity using the decibel scale.

Voice activity detection (VAD), also known as speech activity detection or speech detection, is the detection of the presence or absence of human speech, used in speech processing. The main uses of VAD are in speech coding and speech recognition. It can facilitate speech processing, and can also be used to deactivate some processes during non-speech section of an audio session: it can avoid unnecessary coding/transmission of silence packets in Voice over Internet Protocol (VoIP) applications, saving on computation and on network bandwidth.

Hybrid automatic repeat request is a combination of high-rate forward error correction (FEC) and automatic repeat request (ARQ) error-control. In standard ARQ, redundant bits are added to data to be transmitted using an error-detecting (ED) code such as a cyclic redundancy check (CRC). Receivers detecting a corrupted message will request a new message from the sender. In Hybrid ARQ, the original data is encoded with an FEC code, and the parity bits are either immediately sent along with the message or only transmitted upon request when a receiver detects an erroneous message. The ED code may be omitted when a code is used that can perform both forward error correction (FEC) in addition to error detection, such as a Reed–Solomon code. The FEC code is chosen to correct an expected subset of all errors that may occur, while the ARQ method is used as a fall-back to correct errors that are uncorrectable using only the redundancy sent in the initial transmission. As a result, hybrid ARQ performs better than ordinary ARQ in poor signal conditions, but in its simplest form this comes at the expense of significantly lower throughput in good signal conditions. There is typically a signal quality cross-over point below which simple hybrid ARQ is better, and above which basic ARQ is better.

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code.

A multi-gigabit transceiver (MGT) is a SerDes capable of operating at serial bit rates above 1 Gigabit/second. MGTs are used increasingly for data communications because they can run over longer distances, use fewer wires, and thus have lower costs than parallel interfaces with equivalent data throughput.

Edholm's law, proposed by and named after Phil Edholm, refers to the observation that the three categories of telecommunication, namely wireless (mobile), nomadic and wired networks (fixed), are in lockstep and gradually converging. Edholm's law also holds that data rates for these telecommunications categories increase on similar exponential curves, with the slower rates trailing the faster ones by a predictable time lag. Edholm's law predicts that the bandwidth and data rates double every 18 months, which has proven to be true since the 1970s. The trend is evident in the cases of Internet, cellular (mobile), wireless LAN and wireless personal area networks.

RF CMOS is a metal–oxide–semiconductor (MOS) integrated circuit (IC) technology that integrates radio-frequency (RF), analog and digital electronics on a mixed-signal CMOS RF circuit chip. It is widely used in modern wireless telecommunications, such as cellular networks, Bluetooth, Wi-Fi, GPS receivers, broadcasting, vehicular communication systems, and the radio transceivers in all modern mobile phones and wireless networking devices. RF CMOS technology was pioneered by Pakistani engineer Asad Ali Abidi at UCLA during the late 1980s to early 1990s, and helped bring about the wireless revolution with the introduction of digital signal processing in wireless communications. The development and design of RF CMOS devices was enabled by van der Ziel's FET RF noise model, which was published in the early 1960s and remained largely forgotten until the 1990s.

References

- ↑ Smith, David Russell (2004). Digital transmission systems. Springer. pp. 47–48. ISBN 1-4020-7587-1.

- ↑ Crols, Jan; Steyaert, Michiel (1997). CMOS wireless transceiver design. Springer. ISBN 0-7923-9960-9.

- ↑ Crols, Jan; Steyaert, Michiel (1997). CMOS wireless transceiver design. Springer. p. 109. ISBN 0-7923-9960-9.