In computing, a hash table is a data structure that implements an associative array abstract data type, a structure that can map keys to values. A hash table uses a hash function to compute an index, also called a hash code, into an array of buckets or slots, from which the desired value can be found. During lookup, the key is hashed and the resulting hash indicates where the corresponding value is stored.

Ultraviolet–visible spectroscopy or ultraviolet–visible spectrophotometry refers to absorption spectroscopy or reflectance spectroscopy in part of the ultraviolet and the full, adjacent visible spectral regions. This means it uses light in the visible and adjacent ranges. The absorption or reflectance in the visible range directly affects the perceived color of the chemicals involved. In this region of the electromagnetic spectrum, atoms and molecules undergo electronic transitions. Absorption spectroscopy is complementary to fluorescence spectroscopy, in that fluorescence deals with transitions from the excited state to the ground state, while absorption measures transitions from the ground state to the excited state.

In mathematics, the sieve of Eratosthenes is an ancient algorithm for finding all prime numbers up to any given limit.

In computer science, an in-place algorithm is an algorithm which transforms input using no auxiliary data structure. However a small amount of extra storage space is allowed for auxiliary variables. The input is usually overwritten by the output as the algorithm executes. In-place algorithm updates input sequence only through replacement or swapping of elements. An algorithm which is not in-place is sometimes called not-in-place or out-of-place.

Relative humidity (RH) is the ratio of the partial pressure of water vapor to the equilibrium vapor pressure of water at a given temperature. Relative humidity depends on temperature and the pressure of the system of interest. The same amount of water vapor results in higher relative humidity in cool air than warm air. A related parameter is the dew point.

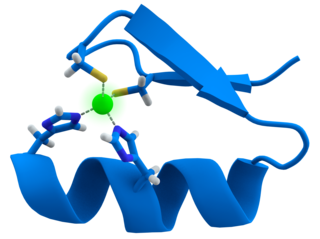

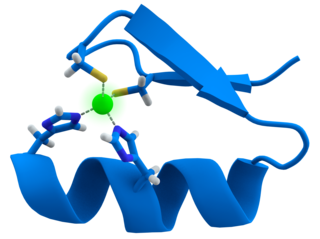

A zinc finger is a small protein structural motif that is characterized by the coordination of one or more zinc ions (Zn2+) in order to stabilize the fold. Originally coined to describe the finger-like appearance of a hypothesized structure from Xenopus laevis transcription factor IIIA, the zinc finger name has now come to encompass a wide variety of differing protein structures. Xenopus laevis TFIIIA was originally demonstrated to contain zinc and require the metal for function in 1983, the first such reported zinc requirement for a gene regulatory protein. It often appears as a metal-binding domain in multi-domain proteins.

A saturated fat is a type of fat in which the fatty acid chains have all or predominantly single bonds. A fat is made of two kinds of smaller molecules: glycerol and fatty acids. Fats are made of long chains of carbon (C) atoms. Some carbon atoms are linked by single bonds (-C-C-) and others are linked by double bonds (-C=C-). Double bonds can react with hydrogen to form single bonds. They are called saturated because the second bond is broken and each half of the bond is attached to a hydrogen atom.

The French paradox is a catchphrase first used in the late 1980s, that summarizes the apparently paradoxical epidemiological observation that French people have a relatively low incidence of coronary heart disease (CHD), while having a diet relatively rich in saturated fats, in apparent contradiction to the widely held belief that the high consumption of such fats is a risk factor for CHD. The paradox is that if the thesis linking saturated fats to CHD is valid, the French ought to have a higher rate of CHD than comparable countries where the per capita consumption of such fats is lower.

Taguchi methods are statistical methods, sometimes called robust design methods, developed by Genichi Taguchi to improve the quality of manufactured goods, and more recently also applied to engineering,biotechnology, marketing and advertising. Professional statisticians have welcomed the goals and improvements brought about by Taguchi methods, particularly by Taguchi's development of designs for studying variation, but have criticized the inefficiency of some of Taguchi's proposals.

Spectrophotometry is a branch of electromagnetic spectroscopy concerned with the quantitative measurement of the reflection or transmission properties of a material as a function of wavelength. Spectrophotometry uses photometers, known as spectrophotometers, that can measure the intensity of a light beam at different wavelengths. Although spectrophotometry is most commonly applied to ultraviolet, visible, and infrared radiation, modern spectrophotometers can interrogate wide swaths of the electromagnetic spectrum, including x-ray, ultraviolet, visible, infrared, and/or microwave wavelengths.

Combined hyperlipidemia is a commonly occurring form of hypercholesterolemia characterised by increased LDL and triglyceride concentrations, often accompanied by decreased HDL. On lipoprotein electrophoresis it shows as a hyperlipoproteinemia type IIB. It is the most commonly inherited lipid disorder, occurring in around one in 200 persons. In fact, almost one in five individuals who develop coronary heart disease before the age of 60 have this disorder.

The Schilling test is a medical investigation used for patients with vitamin B12 (cobalamin) deficiency. The purpose of the test is to determine how well the patient is able to absorb B12 from their intestinal tract. It is named for Robert F. Schilling.

In statistics, a full factorial experiment is an experiment whose design consists of two or more factors, each with discrete possible values or "levels", and whose experimental units take on all possible combinations of these levels across all such factors. A full factorial design may also be called a fully crossed design. Such an experiment allows the investigator to study the effect of each factor on the response variable, as well as the effects of interactions between factors on the response variable.

Hydraulic conductivity, symbolically represented as , is a property of vascular plants, soils and rocks, that describes the ease with which a fluid can move through pore spaces or fractures. It depends on the intrinsic permeability of the material, the degree of saturation, and on the density and viscosity of the fluid. Saturated hydraulic conductivity, Ksat, describes water movement through saturated media. By definition, hydraulic conductivity is the ratio of velocity to hydraulic gradient indicating permeability of porous media.

In computational number theory, a variety of algorithms make it possible to generate prime numbers efficiently. These are used in various applications, for example hashing, public-key cryptography, and search of prime factors in large numbers.

The programming language APL is distinctive in being symbolic rather than lexical: its primitives are denoted by symbols, not words. These symbols were originally devised as a mathematical notation to describe algorithms. APL programmers often assign informal names when discussing functions and operators but the core functions and operators provided by the language are denoted by non-textual symbols.

Orthogonal array testing is a black box testing technique that is a systematic, statistical way of software testing. It is used when the number of inputs to the system is relatively small, but too large to allow for exhaustive testing of every possible input to the systems. It is particularly effective in finding errors associated with faulty logic within computer software systems. Orthogonal arrays can be applied in user interface testing, system testing, regression testing, configuration testing and performance testing. The permutations of factor levels comprising a single treatment are so chosen that their responses are uncorrelated and therefore each treatment gives a unique piece of information. The net effects of organizing the experiment in such treatments is that the same piece of information is gathered in the minimum number of experiments.

A boiler or steam generator is a device used to create steam by applying heat energy to water. Although the definitions are somewhat flexible, it can be said that older steam generators were commonly termed boilers and worked at low to medium pressure but, at pressures above this, it is more usual to speak of a steam generator.

In mathematics, an orthogonal array is a "table" (array) whose entries come from a fixed finite set of symbols, arranged in such a way that there is an integer t so that for every selection of t columns of the table, all ordered t-tuples of the symbols, formed by taking the entries in each row restricted to these columns, appear the same number of times. The number t is called the strength of the orthogonal array. Here is a simple example of an orthogonal array with symbol set {1,2} and strength 2:

Log-linear analysis is a technique used in statistics to examine the relationship between more than two categorical variables. The technique is used for both hypothesis testing and model building. In both these uses, models are tested to find the most parsimonious model that best accounts for the variance in the observed frequencies.