Related Research Articles

In computer science, algorithmic efficiency is a property of an algorithm which relates to the amount of computational resources used by the algorithm. An algorithm must be analyzed to determine its resource usage, and the efficiency of an algorithm can be measured based on the usage of different resources. Algorithmic efficiency can be thought of as analogous to engineering productivity for a repeating or continuous process.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

In computer science and particularly in compiler design, loop nest optimization (LNO) is an optimization technique that applies a set of loop transformations for the purpose of locality optimization or parallelization or another loop overhead reduction of the loop nests. One classical usage is to reduce memory access latency or the cache bandwidth necessary due to cache reuse for some common linear algebra algorithms.

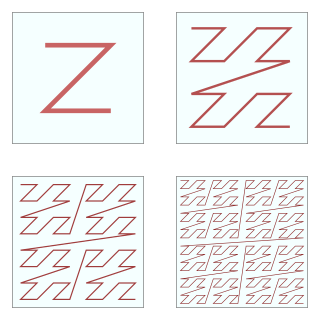

In mathematical analysis and computer science, functions which are Z-order, Lebesgue curve, Morton space-filling curve, Morton order or Morton code map multidimensional data to one dimension while preserving locality of the data points. It is named in France after Henri Lebesgue, who studied it in 1904, and named in the United States after Guy Macdonald Morton, who first applied the order to file sequencing in 1966. The z-value of a point in multidimensions is simply calculated by interleaving the binary representations of its coordinate values. Once the data are sorted into this ordering, any one-dimensional data structure can be used, such as simple one dimensional arrays, binary search trees, B-trees, skip lists or hash tables. The resulting ordering can equivalently be described as the order one would get from a depth-first traversal of a quadtree or octree.

In compiler theory, loop optimization is the process of increasing execution speed and reducing the overheads associated with loops. It plays an important role in improving cache performance and making effective use of parallel processing capabilities. Most execution time of a scientific program is spent on loops; as such, many compiler optimization techniques have been developed to make them faster.

In compiler theory, loop interchange is the process of exchanging the order of two iteration variables used by a nested loop. The variable used in the inner loop switches to the outer loop, and vice versa. It is often done to ensure that the elements of a multi-dimensional array are accessed in the order in which they are present in memory, improving locality of reference.

Automatic parallelization, also auto parallelization, or autoparallelization refers to converting sequential code into multi-threaded and/or vectorized code in order to use multiple processors simultaneously in a shared-memory multiprocessor (SMP) machine. Fully automatic parallelization of sequential programs is a challenge because it requires complex program analysis and the best approach may depend upon parameter values that are not known at compilation time.

In computer science, stream processing is a programming paradigm which views streams, or sequences of events in time, as the central input and output objects of computation. Stream processing encompasses dataflow programming, reactive programming, and distributed data processing. Stream processing systems aim to expose parallel processing for data streams and rely on streaming algorithms for efficient implementation. The software stack for these systems includes components such as programming models and query languages, for expressing computation; stream management systems, for distribution and scheduling; and hardware components for acceleration including floating-point units, graphics processing units, and field-programmable gate arrays.

The polyhedral model is a mathematical framework for programs that perform large numbers of operations -- too large to be explicitly enumerated -- thereby requiring a compact representation. Nested loop programs are the typical, but not the only example, and the most common use of the model is for loop nest optimization in program optimization. The polyhedral method treats each loop iteration within nested loops as lattice points inside mathematical objects called polyhedra, performs affine transformations or more general non-affine transformations such as tiling on the polytopes, and then converts the transformed polytopes into equivalent, but optimized, loop nests through polyhedra scanning.

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism.

Loop-level parallelism is a form of parallelism in software programming that is concerned with extracting parallel tasks from loops. The opportunity for loop-level parallelism often arises in computing programs where data is stored in random access data structures. Where a sequential program will iterate over the data structure and operate on indices one at a time, a program exploiting loop-level parallelism will use multiple threads or processes which operate on some or all of the indices at the same time. Such parallelism provides a speedup to overall execution time of the program, typically in line with Amdahl's law.

Iterative Stencil Loops (ISLs) are a class of numerical data processing solution which update array elements according to some fixed pattern, called a stencil. They are most commonly found in computer simulations, e.g. for computational fluid dynamics in the context of scientific and engineering applications. Other notable examples include solving partial differential equations, the Jacobi kernel, the Gauss–Seidel method, image processing and cellular automata. The regular structure of the arrays sets stencil techniques apart from other modeling methods such as the Finite element method. Most finite difference codes which operate on regular grids can be formulated as ISLs.

Use of the polyhedral model within a compiler requires software to represent the objects of this framework and perform operations upon them.

Because matrix multiplication is such a central operation in many numerical algorithms, much work has been invested in making matrix multiplication algorithms efficient. Applications of matrix multiplication in computational problems are found in many fields including scientific computing and pattern recognition and in seemingly unrelated problems such as counting the paths through a graph. Many different algorithms have been designed for multiplying matrices on different types of hardware, including parallel and distributed systems, where the computational work is spread over multiple processors.

Software is said to exhibit scalable parallelism if it can make use of additional processors to solve larger problems, i.e. this term refers to software for which Gustafson's law holds. Consider a program whose execution time is dominated by one or more loops, each of that updates every element of an array --- for example, the following finite difference heat equation stencil calculation:

for t := 0 to T dofor i := 1 to N-1 do new(i) := * .25 // explicit forward-difference with R = 0.25 endfor i := 1 to N-1 do A(i) := new(i) endend

Communication-avoiding algorithms minimize movement of data within a memory hierarchy for improving its running-time and energy consumption. These minimize the total of two costs : arithmetic and communication. Communication, in this context refers to moving data, either between levels of memory or between multiple processors over a network. It is much more expensive than arithmetic.

In computing, an array of structures (AoS), structure of arrays (SoA) or array of structures of arrays (AoSoA) are contrasting ways to arrange a sequence of records in memory, with regard to interleaving, and are of interest in SIMD and SIMT programming.

In computing, a memory access pattern or IO access pattern is the pattern with which a system or program reads and writes memory on secondary storage. These patterns differ in the level of locality of reference and drastically affect cache performance, and also have implications for the approach to parallelism and distribution of workload in shared memory systems. Further, cache coherency issues can affect multiprocessor performance, which means that certain memory access patterns place a ceiling on parallelism.

The Roofline model is an intuitive visual performance model used to provide performance estimates of a given compute kernel or application running on multi-core, many-core, or accelerator processor architectures, by showing inherent hardware limitations, and potential benefit and priority of optimizations. By combining locality, bandwidth, and different parallelization paradigms into a single performance figure, the model can be an effective alternative to assess the quality of attained performance instead of using simple percent-of-peak estimates, as it provides insights on both the implementation and inherent performance limitations.

DOPIPE parallelism is a method to perform loop-level parallelism by pipelining the statements in a loop. Pipelined parallelism may exist at different levels of abstraction like loops, functions and algorithmic stages. The extent of parallelism depends upon the programmers' ability to make best use of this concept. It also depends upon factors like identifying and separating the independent tasks and executing them parallelly.

References

- 1 2 3 David Wonnacott. Achieving Scalable Locality with Time Skewing. International Journal of Parallel Programming 30.3 (2002)

- 1 2 3 David Wonnacott. Using Time Skewing to eliminate idle time due to memory bandwidth and network limitations. International Parallel and Distributed Processing Symposium 2000

- 1 2 Yonghong Song and Zhiyuan Li. New tiling techniques to improve cache temporal locality. PLDI '99

- 1 2 3 Sriram Krishnamoorthy and Muthu Baskaran and Uday Bondhugula and J. Ramanujam and Atanas Rountev and P. Sadayappan. Effective automatic parallelization of stencil computations. PLDI '07