Knowledge representation and reasoning is a field of artificial intelligence (AI) dedicated to representing information about the world in a form that a computer system can use to solve complex tasks, such as diagnosing a medical condition or having a natural-language dialog. Knowledge representation incorporates findings from psychology about how humans solve problems and represent knowledge, in order to design formalisms that make complex systems easier to design and build. Knowledge representation and reasoning also incorporates findings from logic to automate various kinds of reasoning.

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Typically data is collected in text corpora, using either rule-based, statistical or neural-based approaches in machine learning and deep learning.

The Semantic Web, sometimes known as Web 3.0, is an extension of the World Wide Web through standards set by the World Wide Web Consortium (W3C). The goal of the Semantic Web is to make Internet data machine-readable.

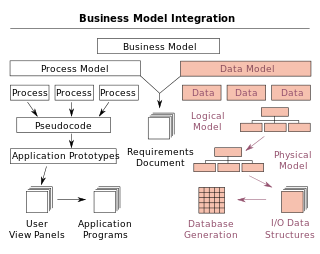

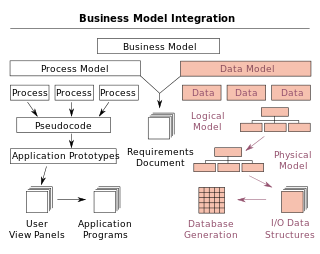

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be composed of a number of other elements which, in turn, represent the color and size of the car and define its owner.

Natural language understanding (NLU) or natural language interpretation (NLI) is a subset of natural language processing in artificial intelligence that deals with machine reading comprehension. NLU has been considered an AI-hard problem.

Business intelligence (BI) consists of strategies, methodologies, and technologies used by enterprises for data analysis and management of business information. Common functions of BI technologies include reporting, online analytical processing, analytics, dashboard development, data mining, process mining, complex event processing, business performance management, benchmarking, text mining, predictive analytics, and prescriptive analytics.

A management information system (MIS) is an information system used for decision-making, and for the coordination, control, analysis, and visualization of information in an organization. The study of the management information systems involves people, processes and technology in an organizational context. In other words, it serves, as the functions of controlling, planning, decision making in the management level setting.

A decision support system (DSS) is an information system that supports business or organizational decision-making activities. DSSs serve the management, operations and planning levels of an organization and help people make decisions about problems that may be rapidly changing and not easily specified in advance—i.e., unstructured and semi-structured decision problems. Decision support systems can be either fully computerized or human-powered, or a combination of both.

Analytics is the systematic computational analysis of data or statistics. It is used for the discovery, interpretation, and communication of meaningful patterns in data, which also falls under and directly relates to the umbrella term, data science. Analytics also entails applying data patterns toward effective decision-making. It can be valuable in areas rich with recorded information; analytics relies on the simultaneous application of statistics, computer programming, and operations research to quantify performance.

Enterprise integration is a technical field of enterprise architecture, which is focused on the study of topics such as system interconnection, electronic data interchange, product data exchange and distributed computing environments.

Frames are an artificial intelligence data structure used to divide knowledge into substructures by representing "stereotyped situations".

BasisTech is a software company specializing in applying artificial intelligence techniques to understanding documents and unstructured data written in different languages. It has headquarters in Somerville, Massachusetts with a subsidiary office in Tokyo. Its legal name is BasisTech LLC.

The concept of the Social Semantic Web subsumes developments in which social interactions on the Web lead to the creation of explicit and semantically rich knowledge representations. The Social Semantic Web can be seen as a Web of collective knowledge systems, which are able to provide useful information based on human contributions and which get better as more people participate. The Social Semantic Web combines technologies, strategies and methodologies from the Semantic Web, social software and the Web 2.0.

Master data management (MDM) is a discipline in which business and information technology collaborate to ensure the uniformity, accuracy, stewardship, semantic consistency, and accountability of the enterprise's official shared master data assets.

A semantic data model (SDM) is a high-level semantics-based database description and structuring formalism for databases. This database model is designed to capture more of the meaning of an application environment than is possible with contemporary database models. An SDM specification describes a database in terms of the kinds of entities that exist in the application environment, the classifications and groupings of those entities, and the structural interconnections among them. SDM provides a collection of high-level modeling primitives to capture the semantics of an application environment. By accommodating derived information in a database structural specification, SDM allows the same information to be viewed in several ways; this makes it possible to directly accommodate the variety of needs and processing requirements typically present in database applications. The design of the present SDM is based on our experience in using a preliminary version of it. SDM is designed to enhance the effectiveness and usability of database systems. An SDM database description can serve as a formal specification and documentation tool for a database; it can provide a basis for supporting a variety of powerful user interface facilities, it can serve as a conceptual database model in the database design process; and, it can be used as the database model for a new kind of database management system.

BORO is an approach to developing ontological or semantic models for large complex operational applications that consists of a top ontology as well as a process for constructing the ontology. It was originally developed as a method for mining ontologies from multiple legacy systems – as the first stage in an architectural transformation or software modernization. It has also been used to enable semantic interoperability between legacy systems. It is described in detail in. It is the analysis method used in the development and maintenance of the U.S. Department of Defense Architecture Framework (DoDAF) Meta Model (DM2), where a data modeling working group of over 350 members was able to systematically resolve a broad spectrum of knowledge representation issues.

Data virtualization is an approach to data management that allows an application to retrieve and manipulate data without requiring technical details about the data, such as how it is formatted at source, or where it is physically located, and can provide a single customer view of the overall data.

Knowledge extraction is the creation of knowledge from structured and unstructured sources. The resulting knowledge needs to be in a machine-readable and machine-interpretable format and must represent knowledge in a manner that facilitates inferencing. Although it is methodically similar to information extraction (NLP) and ETL, the main criterion is that the extraction result goes beyond the creation of structured information or the transformation into a relational schema. It requires either the reuse of existing formal knowledge or the generation of a schema based on the source data.

The following outline is provided as an overview of and topical guide to natural-language processing:

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence (AI), its subdisciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.