Related Research Articles

In computer science, a microkernel is the near-minimum amount of software that can provide the mechanisms needed to implement an operating system (OS). These mechanisms include low-level address space management, thread management, and inter-process communication (IPC).

Mach is an operating system kernel developed at Carnegie Mellon University by Richard Rashid and Avie Tevanian to support operating system research, primarily distributed and parallel computing. Mach is often considered one of the earliest examples of a microkernel. However, not all versions of Mach are microkernels. Mach's derivatives are the basis of the operating system kernel in GNU Hurd and of Apple's XNU kernel used in macOS, iOS, iPadOS, tvOS, and watchOS.

In distributed computing, a remote procedure call (RPC) is when a computer program causes a procedure (subroutine) to execute in a different address space, which is written as if it were a normal (local) procedure call, without the programmer explicitly writing the details for the remote interaction. That is, the programmer writes essentially the same code whether the subroutine is local to the executing program, or remote. This is a form of client–server interaction, typically implemented via a request–response message passing system. In the object-oriented programming paradigm, RPCs are represented by remote method invocation (RMI). The RPC model implies a level of location transparency, namely that calling procedures are largely the same whether they are local or remote, but usually, they are not identical, so local calls can be distinguished from remote calls. Remote calls are usually orders of magnitude slower and less reliable than local calls, so distinguishing them is important.

The trusted computing base (TCB) of a computer system is the set of all hardware, firmware, and/or software components that are critical to its security, in the sense that bugs or vulnerabilities occurring inside the TCB might jeopardize the security properties of the entire system. By contrast, parts of a computer system that lie outside the TCB must not be able to misbehave in a way that would leak any more privileges than are granted to them in accordance to the system's security policy.

In computer security, an access-control list (ACL) is a list of permissions associated with a system resource. An ACL specifies which users or system processes are granted access to resources, as well as what operations are allowed on given resources. Each entry in a typical ACL specifies a subject and an operation. For instance,

In computer systems security, role-based access control (RBAC) or role-based security is an approach to restricting system access to authorized users, and to implementing mandatory access control (MAC) or discretionary access control (DAC).

Exokernel is an operating system kernel developed by the MIT Parallel and Distributed Operating Systems group, and also a class of similar operating systems.

L4 is a family of second-generation microkernels, used to implement a variety of types of operating systems (OS), though mostly for Unix-like, Portable Operating System Interface (POSIX) compliant types.

Extremely Reliable Operating System (EROS) is an operating system developed starting in 1991 at the University of Pennsylvania, and then Johns Hopkins University, and The EROS Group, LLC. Features include automatic data and process persistence, some preliminary real-time support, and capability-based security. EROS is purely a research operating system, and was never deployed in real world use. As of 2005, development stopped in favor of a successor system, CapROS.

Regnecentralen (RC) was the first Danish computer company, founded on 12 October 1955. Through the 1950s and 1960s, they designed a series of computers, originally for their own use, and later to be sold commercially. Descendants of these systems sold well into the 1980s. They also developed a series of high-speed paper tape machines, and produced Data General Nova machines under license.

Per Brinch Hansen was a Danish-American computer scientist known for his work in operating systems, concurrent programming and parallel and distributed computing.

K42 is a discontinued open-source research operating system (OS) for cache-coherent 64-bit multiprocessor systems. It was developed primarily at IBM Thomas J. Watson Research Center in collaboration with the University of Toronto and University of New Mexico. The main focus of this OS is to address performance and scalability issues of system software on large-scale, shared memory, non-uniform memory access (NUMA) multiprocessing computers.

A hypervisor, also known as a virtual machine monitor (VMM) or virtualizer, is a type of computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called a host machine, and each virtual machine is called a guest machine. The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems. Unlike an emulator, the guest executes most instructions on the native hardware. Multiple instances of a variety of operating systems may share the virtualized hardware resources: for example, Linux, Windows, and macOS instances can all run on a single physical x86 machine. This contrasts with operating-system–level virtualization, where all instances must share a single kernel, though the guest operating systems can differ in user space, such as different Linux distributions with the same kernel.

Jochen Liedtke was a German computer scientist, noted for his work on microkernel operating systems, especially in creating the L4 microkernel family.

The RC 4000 Multiprogramming System is a discontinued operating system developed for the RC-4000 minicomputer in 1969. For clarity, this article mostly uses the term Monitor.

In computer science, capability-based addressing is a scheme used by some computers to control access to memory as an efficient implementation of capability-based security. Under a capability-based addressing scheme, pointers are replaced by protected objects which specify both a location in memory, along with access rights which define the set of operations which can be carried out on the memory location. Capabilities can only be created or modified through the use of privileged instructions which may be executed only by either the kernel or some other privileged process authorised to do so. Thus, a kernel can limit application code and other subsystems access to the minimum necessary portions of memory, without the need to use separate address spaces and therefore require a context switch when an access occurs.

Hydra is an early, discontinued, capability-based, object-oriented microkernel designed to support a wide range of possible operating systems to run on it. Hydra was created as part of the C.mmp project at Carnegie Mellon University in 1971.

A kernel is a computer program at the core of a computer's operating system that always has complete control over everything in the system. The kernel is also responsible for preventing and mitigating conflicts between different processes. It is the portion of the operating system code that is always resident in memory and facilitates interactions between hardware and software components. A full kernel controls all hardware resources via device drivers, arbitrates conflicts between processes concerning such resources, and optimizes the utilization of common resources e.g. CPU & cache usage, file systems, and network sockets. On most systems, the kernel is one of the first programs loaded on startup. It handles the rest of startup as well as memory, peripherals, and input/output (I/O) requests from software, translating them into data-processing instructions for the central processing unit.

A distributed operating system is system software over a collection of independent software, networked, communicating, and physically separate computational nodes. They handle jobs which are serviced by multiple CPUs. Each individual node holds a specific software subset of the global aggregate operating system. Each subset is a composite of two distinct service provisioners. The first is a ubiquitous minimal kernel, or microkernel, that directly controls that node's hardware. Second is a higher-level collection of system management components that coordinate the node's individual and collaborative activities. These components abstract microkernel functions and support user applications.

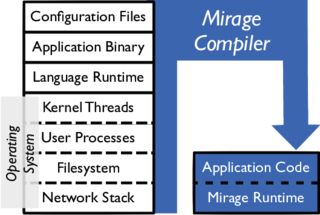

A unikernel is a type of computer program that is statically linked with the operating system code on which it depends. Unikernels are built with a specialized compiler that identifies the operating system services that a program uses and links it with one or more library operating systems that provide them. Such a program requires no separate operating system and can run instead as the guest of a hypervisor.

References

- Per Brinch Hansen (2001). "The evolution of operating systems" (PDF). Retrieved 2006-10-24. included in book: Per Brinch Hansen, ed. (2001) [2001]. "1" (PDF). Classic operating systems: from batch processing to distributed systems. New York: Springer-Verlag. pp. 1–36. ISBN 978-0-387-95113-3. (p. 18)

- Wulf, W.; E. Cohen; W. Corwin; A. Jones; R. Levin; C. Pierson; F. Pollack (June 1974). "HYDRA: the kernel of a multiprocessor operating system". Communications of the ACM. 17 (6): 337–345. doi: 10.1145/355616.364017 . ISSN 0001-0782. S2CID 8011765.

- Hansen, Per Brinch (April 1970). "The nucleus of a Multiprogramming System". Communications of the ACM. 13 (4): 238–241. CiteSeerX 10.1.1.105.4204 . doi:10.1145/362258.362278. ISSN 0001-0782. S2CID 9414037. (pp. 238–241)

- Levin, R.; E. Cohen; W. Corwin; F. Pollack; W. Wulf (1975). "Policy/Mechanism separation in Hydra". Proceedings of the fifth symposium on Operating systems principles - SOSP '75. Vol. 9. pp. 132–140. doi:10.1145/800213.806531. ISBN 9781450378635. S2CID 10524544.

- Chervenak et al. The data grid [ permanent dead link ] Journal of Network and Computer Applications, Volume 23, Issue 3, July 2000, Pages 187-200

- Artsy, Yeshayahu, and Livny, Miron, An Approach to the Design of Fully Open Computing Systems (University of Wisconsin / Madison, March 1987) Computer Sciences Technical Report #689.