The visual cortex of the brain is the area of the cerebral cortex that processes visual information. It is located in the occipital lobe. Sensory input originating from the eyes travels through the lateral geniculate nucleus in the thalamus and then reaches the visual cortex. The area of the visual cortex that receives the sensory input from the lateral geniculate nucleus is the primary visual cortex, also known as visual area 1 (V1), Brodmann area 17, or the striate cortex. The extrastriate areas consist of visual areas 2, 3, 4, and 5.

The lateral geniculate nucleus is a relay center in the thalamus for the visual pathway. It is a small, ovoid, ventral projection of the thalamus where the thalamus connects with the optic nerve. There are two LGNs, one on the left and another on the right side of the thalamus. In humans, both LGNs have six layers of neurons alternating with optic fibers.

The auditory system is the sensory system for the sense of hearing. It includes both the sensory organs and the auditory parts of the sensory system.

The receptive field, or sensory space, is a delimited medium where some physiological stimuli can evoke a sensory neuronal response in specific organisms.

The auditory cortex is the part of the temporal lobe that processes auditory information in humans and many other vertebrates. It is a part of the auditory system, performing basic and higher functions in hearing, such as possible relations to language switching. It is located bilaterally, roughly at the upper sides of the temporal lobes – in humans, curving down and onto the medial surface, on the superior temporal plane, within the lateral sulcus and comprising parts of the transverse temporal gyri, and the superior temporal gyrus, including the planum polare and planum temporale.

The inferior colliculus (IC) is the principal midbrain nucleus of the auditory pathway and receives input from several peripheral brainstem nuclei in the auditory pathway, as well as inputs from the auditory cortex. The inferior colliculus has three subdivisions: the central nucleus, a dorsal cortex by which it is surrounded, and an external cortex which is located laterally. Its bimodal neurons are implicated in auditory-somatosensory interaction, receiving projections from somatosensory nuclei. This multisensory integration may underlie a filtering of self-effected sounds from vocalization, chewing, or respiration activities.

Scale-space theory is a framework for multi-scale signal representation developed by the computer vision, image processing and signal processing communities with complementary motivations from physics and biological vision. It is a formal theory for handling image structures at different scales, by representing an image as a one-parameter family of smoothed images, the scale-space representation, parametrized by the size of the smoothing kernel used for suppressing fine-scale structures. The parameter in this family is referred to as the scale parameter, with the interpretation that image structures of spatial size smaller than about have largely been smoothed away in the scale-space level at scale .

Volley theory states that groups of neurons of the auditory system respond to a sound by firing action potentials slightly out of phase with one another so that when combined, a greater frequency of sound can be encoded and sent to the brain to be analyzed. The theory was proposed by Ernest Wever and Charles Bray in 1930 as a supplement to the frequency theory of hearing. It was later discovered that this only occurs in response to sounds that are about 500 Hz to 5000 Hz.

Sensory neuroscience is a subfield of neuroscience which explores the anatomy and physiology of neurons that are part of sensory systems such as vision, hearing, and olfaction. Neurons in sensory regions of the brain respond to stimuli by firing one or more nerve impulses following stimulus presentation. How is information about the outside world encoded by the rate, timing, and pattern of action potentials? This so-called neural code is currently poorly understood and sensory neuroscience plays an important role in the attempt to decipher it. Looking at early sensory processing is advantageous since brain regions that are "higher up" contain neurons which encode more abstract representations. However, the hope is that there are unifying principles which govern how the brain encodes and processes information. Studying sensory systems is an important stepping stone in our understanding of brain function in general.

In neurobiology, lateral inhibition is the capacity of an excited neuron to reduce the activity of its neighbors. Lateral inhibition disables the spreading of action potentials from excited neurons to neighboring neurons in the lateral direction. This creates a contrast in stimulation that allows increased sensory perception. It is also referred to as lateral antagonism and occurs primarily in visual processes, but also in tactile, auditory, and even olfactory processing. Cells that utilize lateral inhibition appear primarily in the cerebral cortex and thalamus and make up lateral inhibitory networks (LINs). Artificial lateral inhibition has been incorporated into artificial sensory systems, such as vision chips, hearing systems, and optical mice. An often under-appreciated point is that although lateral inhibition is visualised in a spatial sense, it is also thought to exist in what is known as "lateral inhibition across abstract dimensions." This refers to lateral inhibition between neurons that are not adjacent in a spatial sense, but in terms of modality of stimulus. This phenomenon is thought to aid in colour discrimination.

Neural coding is a neuroscience field concerned with characterising the hypothetical relationship between the stimulus and the individual or ensemble neuronal responses and the relationship among the electrical activity of the neurons in the ensemble. Based on the theory that sensory and other information is represented in the brain by networks of neurons, it is thought that neurons can encode both digital and analog information.

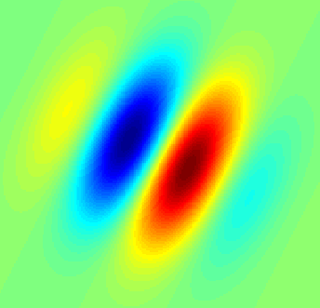

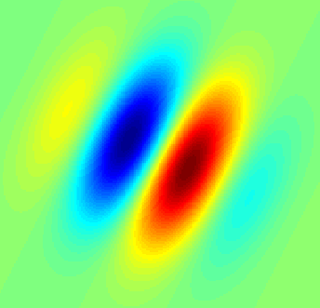

A simple cell in the primary visual cortex is a cell that responds primarily to oriented edges and gratings. These cells were discovered by Torsten Wiesel and David Hubel in the late 1950s.

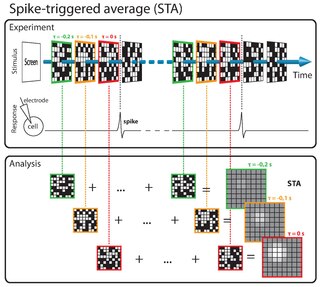

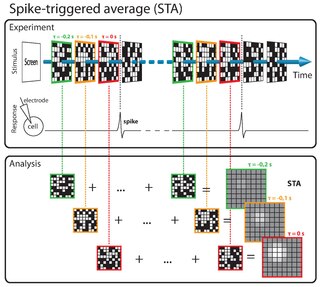

The spike-triggered average (STA) is a tool for characterizing the response properties of a neuron using the spikes emitted in response to a time-varying stimulus. The STA provides an estimate of a neuron's linear receptive field. It is a useful technique for the analysis of electrophysiological data.

Computational auditory scene analysis (CASA) is the study of auditory scene analysis by computational means. In essence, CASA systems are "machine listening" systems that aim to separate mixtures of sound sources in the same way that human listeners do. CASA differs from the field of blind signal separation in that it is based on the mechanisms of the human auditory system, and thus uses no more than two microphone recordings of an acoustic environment. It is related to the cocktail party problem.

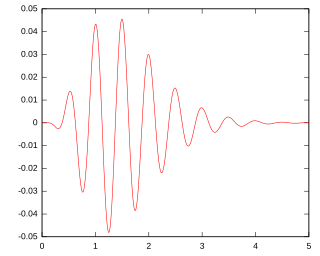

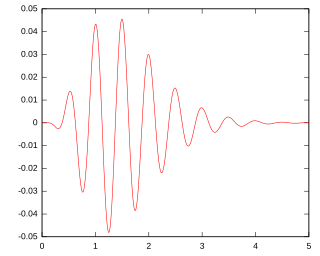

A gammatone filter is a linear filter described by an impulse response that is the product of a gamma distribution and sinusoidal tone. It is a widely used model of auditory filters in the auditory system.

The linear-nonlinear-Poisson (LNP) cascade model is a simplified functional model of neural spike responses. It has been successfully used to describe the response characteristics of neurons in early sensory pathways, especially the visual system. The LNP model is generally implicit when using reverse correlation or the spike-triggered average to characterize neural responses with white-noise stimuli.

The neuronal encoding of sound is the representation of auditory sensation and perception in the nervous system.

Conspecific song preference is the ability songbirds require to distinguish conspecific song from heterospecific song in order for females to choose an appropriate mate, and for juvenile males to choose an appropriate song tutor during vocal learning. Researchers studying the swamp sparrow have demonstrated that young birds are born with this ability, because juvenile males raised in acoustic isolation and tutored with artificial recordings choose to learn only songs that contain their own species' syllables. Studies conducted at later life stages indicate that conspecific song preference is further refined and strengthened throughout development as a function of social experience. The selective response properties of neurons in the songbird auditory pathway has been proposed as the mechanism responsible for both the innate and acquired components of this preference.

Sensory maps and brain development is a concept in neuroethology that links the development of the brain over an animal’s lifetime with the fact that there is spatial organization and pattern to an animal’s sensory processing. Sensory maps are the representations of sense organs as organized maps in the brain, and it is the fundamental organization of processing. Sensory maps are not always close to an exact topographic projection of the senses. The fact that the brain is organized into sensory maps has wide implications for processing, such as that lateral inhibition and coding for space are byproducts of mapping. The developmental process of an organism guides sensory map formation; the details are yet unknown. The development of sensory maps requires learning, long term potentiation, experience-dependent plasticity, and innate characteristics. There is significant evidence for experience-dependent development and maintenance of sensory maps, and there is growing evidence on the molecular basis, synaptic basis and computational basis of experience-dependent development.

Temporal envelope (ENV) and temporal fine structure (TFS) are changes in the amplitude and frequency of sound perceived by humans over time. These temporal changes are responsible for several aspects of auditory perception, including loudness, pitch and timbre perception and spatial hearing.