In physics, a Langevin equation is a stochastic differential equation describing how a system evolves when subjected to a combination of deterministic and fluctuating ("random") forces. The dependent variables in a Langevin equation typically are collective (macroscopic) variables changing only slowly in comparison to the other (microscopic) variables of the system. The fast (microscopic) variables are responsible for the stochastic nature of the Langevin equation. One application is to Brownian motion, which models the fluctuating motion of a small particle in a fluid.

In theoretical physics, the term renormalization group (RG) refers to a formal apparatus that allows systematic investigation of the changes of a physical system as viewed at different scales. In particle physics, it reflects the changes in the underlying force laws as the energy scale at which physical processes occur varies, energy/momentum and resolution distance scales being effectively conjugate under the uncertainty principle.

Global optimization is a branch of applied mathematics and numerical analysis that attempts to find the global minima or maxima of a function or a set of functions on a given set. It is usually described as a minimization problem because the maximization of the real-valued function is equivalent to the minimization of the function .

In quantum mechanics, information theory, and Fourier analysis, the entropic uncertainty or Hirschman uncertainty is defined as the sum of the temporal and spectral Shannon entropies. It turns out that Heisenberg's uncertainty principle can be expressed as a lower bound on the sum of these entropies. This is stronger than the usual statement of the uncertainty principle in terms of the product of standard deviations.

Stochastic gradient descent is an iterative method for optimizing an objective function with suitable smoothness properties. It can be regarded as a stochastic approximation of gradient descent optimization, since it replaces the actual gradient by an estimate thereof. Especially in high-dimensional optimization problems this reduces the very high computational burden, achieving faster iterations in exchange for a lower convergence rate.

In probability theory and mathematical physics, a random matrix is a matrix-valued random variable—that is, a matrix in which some or all of its entries are sampled randomly from a probability distribution. Random matrix theory (RMT) is the study of properties of random matrices, often as they become large. RMT provides techniques like mean-field theory, diagrammatic methods, the cavity method, or the replica method to compute quantities like traces, spectral densities, or scalar products between eigenvectors. Many physical phenomena, such as the spectrum of nuclei of heavy atoms, the thermal conductivity of a lattice, or the emergence of quantum chaos, can be modeled mathematically as problems concerning large, random matrices.

In mathematics, a symplectic integrator (SI) is a numerical integration scheme for Hamiltonian systems. Symplectic integrators form the subclass of geometric integrators which, by definition, are canonical transformations. They are widely used in nonlinear dynamics, molecular dynamics, discrete element methods, accelerator physics, plasma physics, quantum physics, and celestial mechanics.

In applied mathematics, highly optimized tolerance (HOT) is a method of generating power law behavior in systems by including a global optimization principle. It was developed by Jean M. Carlson and John Doyle in the early 2000s. For some systems that display a characteristic scale, a global optimization term could potentially be added that would then yield power law behavior. It has been used to generate and describe internet-like graphs, forest fire models and may also apply to biological systems.

The Gross–Pitaevskii equation describes the ground state of a quantum system of identical bosons using the Hartree–Fock approximation and the pseudopotential interaction model.

Stochastic optimization (SO) methods are optimization methods that generate and use random variables. For stochastic problems, the random variables appear in the formulation of the optimization problem itself, which involves random objective functions or random constraints. Stochastic optimization methods also include methods with random iterates. Some stochastic optimization methods use random iterates to solve stochastic problems, combining both meanings of stochastic optimization. Stochastic optimization methods generalize deterministic methods for deterministic problems.

The Wang and Landau algorithm, proposed by Fugao Wang and David P. Landau, is a Monte Carlo method designed to estimate the density of states of a system. The method performs a non-Markovian random walk to build the density of states by quickly visiting all the available energy spectrum. The Wang and Landau algorithm is an important method to obtain the density of states required to perform a multicanonical simulation.

In applied mathematics, the numerical sign problem is the problem of numerically evaluating the integral of a highly oscillatory function of a large number of variables. Numerical methods fail because of the near-cancellation of the positive and negative contributions to the integral. Each has to be integrated to very high precision in order for their difference to be obtained with useful accuracy.

A polymer field theory is a statistical field theory describing the statistical behavior of a neutral or charged polymer system. It can be derived by transforming the partition function from its standard many-dimensional integral representation over the particle degrees of freedom in a functional integral representation over an auxiliary field function, using either the Hubbard–Stratonovich transformation or the delta-functional transformation. Computer simulations based on polymer field theories have been shown to deliver useful results, for example to calculate the structures and properties of polymer solutions, polymer melts and thermoplastics.

The Tracy–Widom distribution is a probability distribution from random matrix theory introduced by Craig Tracy and Harold Widom. It is the distribution of the normalized largest eigenvalue of a random Hermitian matrix. The distribution is defined as a Fredholm determinant.

The Swendsen–Wang algorithm is the first non-local or cluster algorithm for Monte Carlo simulation for large systems near criticality. It has been introduced by Robert Swendsen and Jian-Sheng Wang in 1987 at Carnegie Mellon.

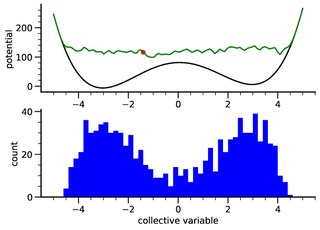

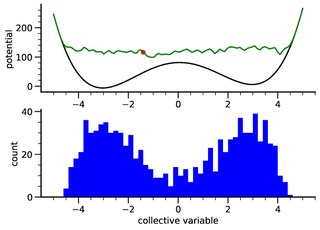

Metadynamics is a computer simulation method in computational physics, chemistry and biology. It is used to estimate the free energy and other state functions of a system, where ergodicity is hindered by the form of the system's energy landscape. It was first suggested by Alessandro Laio and Michele Parrinello in 2002 and is usually applied within molecular dynamics simulations. MTD closely resembles a number of newer methods such as adaptively biased molecular dynamics, adaptive reaction coordinate forces and local elevation umbrella sampling. More recently, both the original and well-tempered metadynamics were derived in the context of importance sampling and shown to be a special case of the adaptive biasing potential setting. MTD is related to the Wang–Landau sampling.

The phase-space formulation of quantum mechanics places the position and momentum variables on equal footing in phase space. In contrast, the Schrödinger picture uses the position or momentum representations. The two key features of the phase-space formulation are that the quantum state is described by a quasiprobability distribution and operator multiplication is replaced by a star product.

In statistics and physics, multicanonical ensemble is a Markov chain Monte Carlo sampling technique that uses the Metropolis–Hastings algorithm to compute integrals where the integrand has a rough landscape with multiple local minima. It samples states according to the inverse of the density of states, which has to be known a priori or be computed using other techniques like the Wang and Landau algorithm. Multicanonical sampling is an important technique for spin systems like the Ising model or spin glasses.

In computational solid state physics, Continuous-time quantum Monte Carlo (CT-QMC) is a family of stochastic algorithms for solving the Anderson impurity model at finite temperature. These methods first expand the full partition function as a series of Feynman diagrams, employ Wick's theorem to group diagrams into determinants, and finally use Markov chain Monte Carlo to stochastically sum up the resulting series.

Quantum optimization algorithms are quantum algorithms that are used to solve optimization problems. Mathematical optimization deals with finding the best solution to a problem from a set of possible solutions. Mostly, the optimization problem is formulated as a minimization problem, where one tries to minimize an error which depends on the solution: the optimal solution has the minimal error. Different optimization techniques are applied in various fields such as mechanics, economics and engineering, and as the complexity and amount of data involved rise, more efficient ways of solving optimization problems are needed. Quantum computing may allow problems which are not practically feasible on classical computers to be solved, or suggest a considerable speed up with respect to the best known classical algorithm.