Sulston score exposition

The Sulston score is rooted in the concepts of Bernoulli and binomial processes, as follows. Consider two clones, and , having and measured fragment lengths, respectively, where . That is, clone has at least as many fragments as clone , but usually more. The Sulston score is the probability that at least fragment lengths on clone will be matched by any combination of lengths on . Intuitively, we see that, at most, there can be matches. Thus, for a given comparison between two clones, one can measure the statistical significance of a match of fragments, i.e. how likely it is that this match occurred simply as a result of random chance. Very low values would indicate a significant match that is highly unlikely to have arisen by pure chance, while higher values would suggest that the given match could be just a coincidence.

Derivation of the Sulston Score One of the basic assumptions is that fragments are uniformly distributed on a gel, i.e. a fragment has an equal likelihood of appearing anywhere on the gel. Since gel position is an indicator of fragment length, this assumption is equivalent to presuming that the fragment lengths are uniformly distributed. The measured location of any fragment , has an associated error tolerance of , so that its true location is only known to lie within the segment . In what follows, let us refer to individual fragment lengths simply as lengths. Consider a specific length on clone and a specific length on clone . These two lengths are arbitrarily selected from their respective sets and . We assume that the gel location of fragment has been determined and we want the probability of the event that the location of fragment will match that of . Geometrically, will be declared to match if it falls inside the window of size around . Since fragment could occur anywhere in the gel of length , we have . The probability that does not match is simply the complement, i.e. , since it must either match or not match.

Now, let us expand this to compute the probability that no length on clone matches the single particular length on clone . This is simply the intersection of all individual trials where the event occurs, i.e.. This can be restated verbally as: length 1 on clone does not match length on clone and length 2 does not match length and length 3 does not match, etc. Since each of these trials is assumed to be independent, the probability is simply

Of course, the actual event of interest is the complement: i.e. there is not "no matches". In other words, the probability of one or more matches is . Formally, is the probability that at least one band on clone matches band on clone .

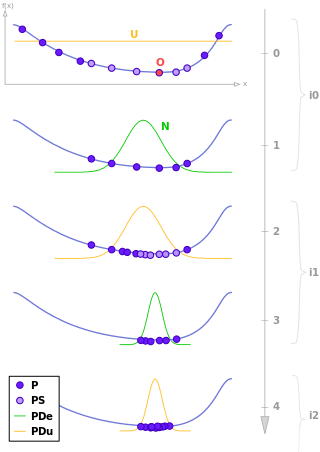

This event is taken as a Bernoulli trial having a "success" (matching) probability of for band . However, we want to describe the process over all the bands on clone . Since is constant, the number of matches is distributed binomially. Given observed matches, the Sulston score is simply the probability of obtaining at least matches by chance according to

where are binomial coefficients.