In mathematics, Jensen's inequality, named after the Danish mathematician Johan Jensen, relates the value of a convex function of an integral to the integral of the convex function. It was proven by Jensen in 1906. Given its generality, the inequality appears in many forms depending on the context, some of which are presented below. In its simplest form the inequality states that the convex transformation of a mean is less than or equal to the mean applied after convex transformation; it is a simple corollary that the opposite is true of concave transformations.

In mathematical analysis, a function of bounded variation, also known as BV function, is a real-valued function whose total variation is bounded (finite): the graph of a function having this property is well behaved in a precise sense. For a continuous function of a single variable, being of bounded variation means that the distance along the direction of the y-axis, neglecting the contribution of motion along x-axis, traveled by a point moving along the graph has a finite value. For a continuous function of several variables, the meaning of the definition is the same, except for the fact that the continuous path to be considered cannot be the whole graph of the given function, but can be every intersection of the graph itself with a hyperplane parallel to a fixed x-axis and to the y-axis.

In mathematics a Hausdorff measure is a type of outer measure, named for Felix Hausdorff, that assigns a number in [0,∞] to each set in or, more generally, in any metric space. The zero-dimensional Hausdorff measure is the number of points in the set or ∞ if the set is infinite. The one-dimensional Hausdorff measure of a simple curve in is equal to the length of the curve. Likewise, the two dimensional Hausdorff measure of a measurable subset of is proportional to the area of the set. Thus, the concept of the Hausdorff measure generalizes counting, length, and area. It also generalizes volume. In fact, there are d-dimensional Hausdorff measures for any d ≥ 0, which is not necessarily an integer. These measures are fundamental in geometric measure theory. They appear naturally in harmonic analysis or potential theory.

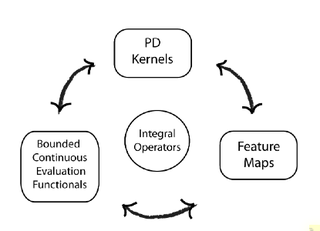

In functional analysis, a reproducing kernel Hilbert space (RKHS) is a Hilbert space of functions in which point evaluation is a continuous linear functional. Roughly speaking, this means that if two functions and in the RKHS are close in norm, i.e., is small, then and are also pointwise close, i.e., is small for all . The reverse need not be true.

In mathematics and mathematical optimization, the convex conjugate of a function is a generalization of the Legendre transformation which applies to non-convex functions. It is also known as Legendre–Fenchel transformation or Fenchel transformation. It is used to transform an optimization problem into its corresponding dual problem, which can often be simpler to solve.

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets. Whereas many classes of convex optimization problems admit polynomial-time algorithms, mathematical optimization is in general NP-hard.

In mathematical analysis, a Banach limit is a continuous linear functional defined on the Banach space of all bounded complex-valued sequences such that for all sequences , in , and complex numbers :

- (linearity);

- if for all , then (positivity);

- , where is the shift operator defined by (shift-invariance);

- if is a convergent sequence, then .

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. The solution to the dual problem provides a lower bound to the solution of the primal (minimization) problem. However in general the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition.

In mathematics, in the field of functional analysis, a Minkowski functional is a function that recovers a notion of distance on a linear space.

In linear algebra, a convex cone is a subset of a vector space over an ordered field that is closed under linear combinations with positive coefficients.

The Palais–Smale compactness condition, named after Richard Palais and Stephen Smale, is a hypothesis for some theorems of the calculus of variations. It is useful for guaranteeing the existence of certain kinds of critical points, in particular saddle points. The Palais-Smale condition is a condition on the functional that one is trying to extremize.

In mathematics, the theory of optimal stopping or early stopping is concerned with the problem of choosing a time to take a particular action, in order to maximise an expected reward or minimise an expected cost. Optimal stopping problems can be found in areas of statistics, economics, and mathematical finance. A key example of an optimal stopping problem is the secretary problem. Optimal stopping problems can often be written in the form of a Bellman equation, and are therefore often solved using dynamic programming.

A second-order cone program (SOCP) is a convex optimization problem of the form

The Titchmarsh convolution theorem is named after Edward Charles Titchmarsh, a British mathematician. The theorem describes the properties of the support of the convolution of two functions.

In functional analysis, the dual norm is a measure of the "size" of each continuous linear functional defined on a normed vector space.

In the mathematical analysis, and especially in real and harmonic analysis, a Birnbaum–Orlicz space is a type of function space which generalizes the Lp spaces. Like the Lp spaces, they are Banach spaces. The spaces are named for Władysław Orlicz and Zygmunt William Birnbaum, who first defined them in 1931.

In mathematics, the Pettis integral or Gelfand–Pettis integral, named after Israel M. Gelfand and Billy James Pettis, extends the definition of the Lebesgue integral to vector-valued functions on a measure space, by exploiting duality. The integral was introduced by Gelfand for the case when the measure space is an interval with Lebesgue measure. The integral is also called the weak integral in contrast to the Bochner integral, which is the strong integral.

In mathematics the Thurston boundary of Teichmüller space of a surface is obtained as the boundary of its closure in the projective space of functionals on simple closed curves on the surface. It can be interpreted as the space of projective measured foliations on the surface.