Related Research Articles

The raven paradox, also known as Hempel's paradox, Hempel's ravens, or rarely the paradox of indoor ornithology, is a paradox arising from the question of what constitutes evidence for the truth of a statement. Observing objects that are neither black nor ravens may formally increase the likelihood that all ravens are black even though, intuitively, these observations are unrelated.

Bayes' theorem gives a mathematical rule for inverting conditional probabilities, allowing us to find the probability of a cause given its effect. For example, if the risk of developing health problems is known to increase with age, Bayes' theorem allows the risk to an individual of a known age to be assessed more accurately by conditioning it relative to their age, rather than assuming that the individual is typical of the population as a whole. Based on Bayes law both the prevalence of a disease in a given population and the error rate of an infectious disease test have to be taken into account to evaluate the meaning of a positive test result correctly and avoid the base-rate fallacy.

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Fundamentally, Bayesian inference uses prior knowledge, in the form of a prior distribution in order to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability".

Prediction markets, also known as betting markets, information markets, decision markets, idea futures or event derivatives, are open markets that enable the prediction of specific outcomes using financial incentives. They are exchange-traded markets established for trading bets in the outcome of various events. The market prices can indicate what the crowd thinks the probability of the event is. A typical prediction market contract is set up to trade between 0 and 100%. The most common form of a prediction market is a binary option market, which will expire at the price of 0 or 100%. Prediction markets can be thought of as belonging to the more general concept of crowdsourcing which is specially designed to aggregate information on particular topics of interest. The main purposes of prediction markets are eliciting aggregating beliefs over an unknown future outcome. Traders with different beliefs trade on contracts whose payoffs are related to the unknown future outcome and the market prices of the contracts are considered as the aggregated belief.

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior probability contains everything there is to know about an uncertain proposition, given prior knowledge and a mathematical model describing the observations available at a particular time. After the arrival of new information, the current posterior probability may serve as the prior in another round of Bayesian updating.

Inductive reasoning is any of various methods of reasoning in which broad generalizations or principles are derived from a body of observations. This article is concerned with the inductive reasoning other than deductive reasoning, where the conclusion of a deductive argument is certain given the premises are correct; in contrast, the truth of the conclusion of an inductive argument is at best probable, based upon the evidence given.

A prior probability distribution of an uncertain quantity, often simply called the prior, is its assumed probability distribution before some evidence is taken into account. For example, the prior could be the probability distribution representing the relative proportions of voters who will vote for a particular politician in a future election. The unknown quantity may be a parameter of the model or a latent variable rather than an observable variable.

The base rate fallacy, also called base rate neglect or base rate bias, is a type of fallacy in which people tend to ignore the base rate in favor of the individuating information. For example, if someone hears that a friend is very shy and quiet, they might think the friend is more likely to be a librarian than a salesperson, even though there are far more salespeople than librarians overall - hence making it more likely that their friend is actually a salesperson. Base rate neglect is a specific form of the more general extension neglect.

In probability theory, the rule of succession is a formula introduced in the 18th century by Pierre-Simon Laplace in the course of treating the sunrise problem. The formula is still used, particularly to estimate underlying probabilities when there are few observations or events that have not been observed to occur at all in (finite) sample data.

In Bayesian probability theory, if, given a likelihood function , the posterior distribution is in the same probability distribution family as the prior probability distribution , the prior and posterior are then called conjugate distributions with respect to that likelihood function and the prior is called a conjugate prior for the likelihood function .

Statistics, when used in a misleading fashion, can trick the casual observer into believing something other than what the data shows. That is, a misuse of statistics occurs when a statistical argument asserts a falsehood. In some cases, the misuse may be accidental. In others, it is purposeful and for the gain of the perpetrator. When the statistical reason involved is false or misapplied, this constitutes a statistical fallacy.

The Wisdom of Crowds: Why the Many Are Smarter Than the Few and How Collective Wisdom Shapes Business, Economies, Societies and Nations, published in 2004, is a book written by James Surowiecki about the aggregation of information in groups, resulting in decisions that, he argues, are often better than could have been made by any single member of the group. The book presents numerous case studies and anecdotes to illustrate its argument, and touches on several fields, primarily economics and psychology.

Condorcet's jury theorem is a political science theorem about the relative probability of a given group of individuals arriving at a correct decision. The theorem was first expressed by the Marquis de Condorcet in his 1785 work Essay on the Application of Analysis to the Probability of Majority Decisions.

In social science research, social-desirability bias is a type of response bias that is the tendency of survey respondents to answer questions in a manner that will be viewed favorably by others. It can take the form of over-reporting "good behavior" or under-reporting "bad", or undesirable behavior. The tendency poses a serious problem with conducting research with self-reports. This bias interferes with the interpretation of average tendencies as well as individual differences.

The minimum intelligent signal test, or MIST, is a variation of the Turing test proposed by Chris McKinstry in which only boolean answers may be given to questions. The purpose of such a test is to provide a quantitative statistical measure of humanness, which may subsequently be used to optimize the performance of artificial intelligence systems intended to imitate human responses.

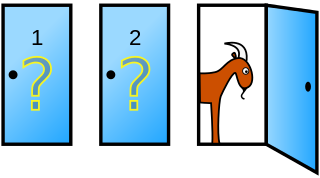

The Monty Hall problem is a brain teaser, in the form of a probability puzzle, based nominally on the American television game show Let's Make a Deal and named after its original host, Monty Hall. The problem was originally posed in a letter by Steve Selvin to the American Statistician in 1975. It became famous as a question from reader Craig F. Whitaker's letter quoted in Marilyn vos Savant's "Ask Marilyn" column in Parade magazine in 1990:

Suppose you're on a game show, and you're given the choice of three doors: Behind one door is a car; behind the others, goats. You pick a door, say No. 1, and the host, who knows what's behind the doors, opens another door, say No. 3, which has a goat. He then says to you, "Do you want to pick door No. 2?" Is it to your advantage to switch your choice?

The wisdom of the crowd is the collective opinion of a diverse and independent group of individuals rather than that of a single expert. This process, while not new to the Information Age, has been pushed into the mainstream spotlight by social information sites such as Quora, Reddit, Stack Exchange, Wikipedia, Yahoo! Answers, and other web resources which rely on collective human knowledge. An explanation for this phenomenon is that there is idiosyncratic noise associated with each individual judgment, and taking the average over a large number of responses will go some way toward canceling the effect of this noise.

Social information seeking is a field of research that involves studying situations, motivations, and methods for people seeking and sharing information in participatory online social sites, such as Yahoo! Answers, Answerbag, WikiAnswers and Twitter as well as building systems for supporting such activities. Highly related topics involve traditional and virtual reference services, information retrieval, information extraction, and knowledge representation.

Drazen Prelec is a professor of management science and economics in the MIT Sloan School of Management, and holds appointments in the Department of Economics and in the Department of Brain and Cognitive Sciences at MIT as well. He is a pioneer in the field of neuroeconomics.

Cultural consensus theory is an approach to information pooling which supports a framework for the measurement and evaluation of beliefs as cultural; shared to some extent by a group of individuals. Cultural consensus models guide the aggregation of responses from individuals to estimate (1) the culturally appropriate answers to a series of related questions and (2) individual competence in answering those questions. The theory is applicable when there is sufficient agreement across people to assume that a single set of answers exists. The agreement between pairs of individuals is used to estimate individual cultural competence. Answers are estimated by weighting responses of individuals by their competence and then combining responses.

References

- ↑ Akst, Daniel (February 16, 2017). "The Wisdom of Even Wiser Crowds". The Wall Street Journal. Retrieved 16 May 2018.

- 1 2 3 Dizikes, Peter (January 25, 2017). "Better wisdom from crowds". MIT News. Retrieved 16 May 2018.

- 1 2 3 Prelec, Dražen; Seung, H. Sebastian; McCoy, John (2017). "A solution to the single-question crowd wisdom problem". Nature. 541 (7638): 532–535. Bibcode:2017Natur.541..532P. doi:10.1038/nature21054. ISSN 1476-4687. PMID 28128245. S2CID 4452604.

- ↑ Hosseini, Hadi; Mandal, Debmalya; Shah, Nisarg; Shi, Kevin (2021). "Surprisingly Popular Voting Recovers Rankings, Surprisingly!". Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence. pp. 245–251. arXiv: 2105.09386 . doi:10.24963/ijcai.2021/35. ISBN 978-0-9992411-9-6.