Related Research Articles

In mathematics, a linear map is a mapping between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism.

In linear algebra, the rank of a matrix A is the dimension of the vector space generated by its columns. This corresponds to the maximal number of linearly independent columns of A. This, in turn, is identical to the dimension of the vector space spanned by its rows. Rank is thus a measure of the "nondegenerateness" of the system of linear equations and linear transformation encoded by A. There are multiple equivalent definitions of rank. A matrix's rank is one of its most fundamental characteristics.

In mathematics, a tensor is an algebraic object that describes a (multilinear) relationship between sets of algebraic objects related to a vector space. Objects that tensors may map between include vectors and scalars, and even other tensors. There are many types of tensors, including scalars and vectors, dual vectors, multilinear maps between vector spaces, and even some operations such as the dot product. Tensors are defined independent of any basis, although they are often referred to by their components in a basis related to a particular coordinate system.

In mathematics, a symmetric matrix with real entries is positive-definite if the real number is positive for every nonzero real column vector where is the transpose of . More generally, a Hermitian matrix is positive-definite if the real number is positive for every nonzero complex column vector where denotes the conjugate transpose of

In linear algebra, the column space of a matrix A is the span of its column vectors. The column space of a matrix is the image or range of the corresponding matrix transformation.

The moment of inertia, otherwise known as the mass moment of inertia, angular mass, second moment of mass, or most accurately, rotational inertia, of a rigid body is a quantity that determines the torque needed for a desired angular acceleration about a rotational axis, akin to how mass determines the force needed for a desired acceleration. It depends on the body's mass distribution and the axis chosen, with larger moments requiring more torque to change the body's rate of rotation.

In vector calculus, the Jacobian matrix of a vector-valued function in several variables is the matrix of all its first-order partial derivatives. When this matrix is square, that is, when the function takes the same number of variables as input as the number of vector components of its output, its determinant is referred to as the Jacobian determinant. Both the matrix and the determinant are often referred to simply as the Jacobian in literature.

James Joseph Sylvester was an English mathematician. He made fundamental contributions to matrix theory, invariant theory, number theory, partition theory, and combinatorics. He played a leadership role in American mathematics in the later half of the 19th century as a professor at the Johns Hopkins University and as founder of the American Journal of Mathematics. At his death, he was a professor at Oxford University.

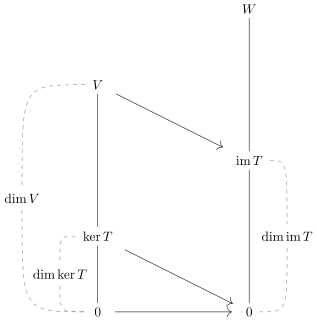

The rank–nullity theorem is a theorem in linear algebra, which asserts that the dimension of the domain of a linear map is the sum of its rank and its nullity.

In mathematics, the kernel of a linear map, also known as the null space or nullspace, is the linear subspace of the domain of the map which is mapped to the zero vector. That is, given a linear map L : V → W between two vector spaces V and W, the kernel of L is the vector space of all elements v of V such that L(v) = 0, where 0 denotes the zero vector in W, or more symbolically:

Sylvester's law of inertia is a theorem in matrix algebra about certain properties of the coefficient matrix of a real quadratic form that remain invariant under a change of basis. Namely, if A is the symmetric matrix that defines the quadratic form, and S is any invertible matrix such that D = SAST is diagonal, then the number of negative elements in the diagonal of D is always the same, for all such S; and the same goes for the number of positive elements.

In mathematics, a matrix norm is a vector norm in a vector space whose elements (vectors) are matrices.

In mathematics, a Bézout matrix is a special square matrix associated with two polynomials, introduced by James Joseph Sylvester (1853) and Arthur Cayley (1857) and named after Étienne Bézout. Bézoutian may also refer to the determinant of this matrix, which is equal to the resultant of the two polynomials. Bézout matrices are sometimes used to test the stability of a given polynomial.

In mathematics, a Sylvester matrix is a matrix associated to two univariate polynomials with coefficients in a field or a commutative ring. The entries of the Sylvester matrix of two polynomials are coefficients of the polynomials. The determinant of the Sylvester matrix of two polynomials is their resultant, which is zero when the two polynomials have a common root or a non-constant common divisor.

Semidefinite programming (SDP) is a subfield of convex optimization concerned with the optimization of a linear objective function over the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron.

In graph theory, a branch of mathematics, the rank of an undirected graph has two unrelated definitions. Let n equal the number of vertices of the graph.

The nullity of a graph in the mathematical subject of graph theory can mean either of two unrelated numbers. If the graph has n vertices and m edges, then:

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns. For example, the dimension of the matrix below is 2 × 3, because there are two rows and three columns:

Sylvester's theorem or the Sylvester theorem may refer to any of several theorems named after James Joseph Sylvester:

References

- Dicks, Warren; Sontag, Eduardo D. (1978), "Sylvester domains", Journal of Pure and Applied Algebra, 13 (3): 243–275, doi: 10.1016/0022-4049(78)90011-7 , ISSN 0022-4049, MR 0509164

- Sylvester, James Joseph (1884), "On involutants and other allied species of invariants to matrix systems", Johns Hopkins University Circulars, III: 9–12, 34–35, Reprinted in collected papers volume IV, paper 15