In number theory, integer factorization is the decomposition, of a positive integer into a product of integers. If the factors are further restricted to be prime numbers, the process is called prime factorization, and includes the test whether the given integer is prime.

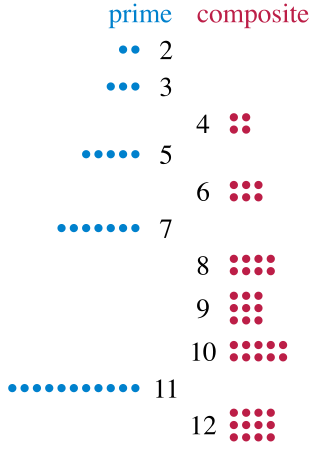

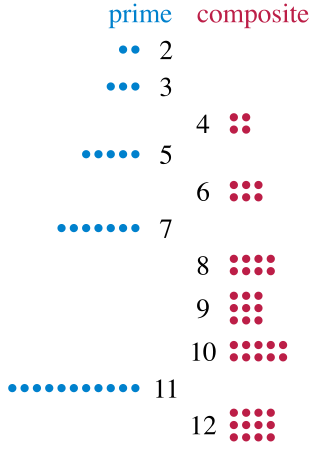

A prime number is a natural number greater than 1 that is not a product of two smaller natural numbers. A natural number greater than 1 that is not prime is called a composite number. For example, 5 is prime because the only ways of writing it as a product, 1 × 5 or 5 × 1, involve 5 itself. However, 4 is composite because it is a product (2 × 2) in which both numbers are smaller than 4. Primes are central in number theory because of the fundamental theorem of arithmetic: every natural number greater than 1 is either a prime itself or can be factorized as a product of primes that is unique up to their order.

RSA (Rivest–Shamir–Adleman) is a public-key cryptosystem, one of the oldest, that is widely used for secure data transmission. The acronym "RSA" comes from the surnames of Ron Rivest, Adi Shamir and Leonard Adleman, who publicly described the algorithm in 1977. An equivalent system was developed secretly in 1973 at Government Communications Headquarters (GCHQ) by the English mathematician Clifford Cocks. That system was declassified in 1997.

In mathematics, the sieve of Eratosthenes is an ancient algorithm for finding all prime numbers up to any given limit.

In number theory, the general number field sieve (GNFS) is the most efficient classical algorithm known for factoring integers larger than 10100. Heuristically, its complexity for factoring an integer n (consisting of ⌊log2n⌋ + 1 bits) is of the form

In mathematics, for given real numbers a and b, the logarithm logb a is a number x such that bx = a. Analogously, in any group G, powers bk can be defined for all integers k, and the discrete logarithm logb a is an integer k such that bk = a. In number theory, the more commonly used term is index: we can write x = indra (mod m) (read "the index of a to the base r modulo m") for rx ≡ a (mod m) if r is a primitive root of m and gcd(a,m) = 1.

The AKS primality test is a deterministic primality-proving algorithm created and published by Manindra Agrawal, Neeraj Kayal, and Nitin Saxena, computer scientists at the Indian Institute of Technology Kanpur, on August 6, 2002, in an article titled "PRIMES is in P". The algorithm was the first one which is able to determine in polynomial time, whether a given number is prime or composite and this without relying on mathematical conjectures such as the generalized Riemann hypothesis. The proof is also notable for not relying on the field of analysis. In 2006 the authors received both the Gödel Prize and Fulkerson Prize for their work.

Trial division is the most laborious but easiest to understand of the integer factorization algorithms. The essential idea behind trial division tests to see if an integer n, the integer to be factored, can be divided by each number in turn that is less than n. For example, for the integer n = 12, the only numbers that divide it are 1, 2, 3, 4, 6, 12. Selecting only the largest powers of primes in this list gives that 12 = 3 × 4 = 3 × 22.

Pollard's p − 1 algorithm is a number theoretic integer factorization algorithm, invented by John Pollard in 1974. It is a special-purpose algorithm, meaning that it is only suitable for integers with specific types of factors; it is the simplest example of an algebraic-group factorisation algorithm.

The quadratic sieve algorithm (QS) is an integer factorization algorithm and, in practice, the second fastest method known. It is still the fastest for integers under 100 decimal digits or so, and is considerably simpler than the number field sieve. It is a general-purpose factorization algorithm, meaning that its running time depends solely on the size of the integer to be factored, and not on special structure or properties. It was invented by Carl Pomerance in 1981 as an improvement to Schroeppel's linear sieve.

In number theory, a branch of mathematics, the special number field sieve (SNFS) is a special-purpose integer factorization algorithm. The general number field sieve (GNFS) was derived from it.

In cryptography and number theory, TWIRL is a hypothetical hardware device designed to speed up the sieving step of the general number field sieve integer factorization algorithm. During the sieving step, the algorithm searches for numbers with a certain mathematical relationship. In distributed factoring projects, this is the step that is parallelized to a large number of processors.

In number theory, an n-smooth (or n-friable) number is an integer whose prime factors are all less than or equal to n. For example, a 7-smooth number is a number whose every prime factor is at most 7, so 49 = 72 and 15750 = 2 × 32 × 53 × 7 are both 7-smooth, while 11 and 702 = 2 × 33 × 13 are not 7-smooth. The term seems to have been coined by Leonard Adleman. Smooth numbers are especially important in cryptography, which relies on factorization of integers. The 2-smooth numbers are just the powers of 2, while 5-smooth numbers are known as regular numbers.

In computational number theory, the index calculus algorithm is a probabilistic algorithm for computing discrete logarithms. Dedicated to the discrete logarithm in where is a prime, index calculus leads to a family of algorithms adapted to finite fields and to some families of elliptic curves. The algorithm collects relations among the discrete logarithms of small primes, computes them by a linear algebra procedure and finally expresses the desired discrete logarithm with respect to the discrete logarithms of small primes.

In mathematics, the sieve of Atkin is a modern algorithm for finding all prime numbers up to a specified integer. Compared with the ancient sieve of Eratosthenes, which marks off multiples of primes, the sieve of Atkin does some preliminary work and then marks off multiples of squares of primes, thus achieving a better theoretical asymptotic complexity. It was created in 2003 by A. O. L. Atkin and Daniel J. Bernstein.

In mathematics, the rational sieve is a general algorithm for factoring integers into prime factors. It is a special case of the general number field sieve. While it is less efficient than the general algorithm, it is conceptually simpler. It serves as a helpful first step in understanding how the general number field sieve works.

Integer factorization is the process of determining which prime numbers divide a given positive integer. Doing this quickly has applications in cryptography. The difficulty depends on both the size and form of the number and its prime factors; it is currently very difficult to factorize large semiprimes.

In mathematics the Function Field Sieve is one of the most efficient algorithms to solve the Discrete Logarithm Problem (DLP) in a finite field. It has heuristic subexponential complexity. Leonard Adleman developed it in 1994 and then elaborated it together with M. D. Huang in 1999. Previous work includes the work of D. Coppersmith about the DLP in fields of characteristic two.

Knapsack cryptosystems are cryptosystems whose security is based on the hardness of solving the knapsack problem. They remain quite unpopular because simple versions of these algorithms have been broken for several decades. However, that type of cryptosystem is a good candidate for post-quantum cryptography.

In mathematics, the sieve of Pritchard is an algorithm for finding all prime numbers up to a specified bound. Like the ancient sieve of Eratosthenes, it has a simple conceptual basis in number theory. It is especially suited to quick hand computation for small bounds.