Test environment management (TEM) is a function in a software delivery process which aids the software testing cycle by providing a validated, stable and usable test environment to execute the test scenarios or replicate bugs.

Test environment management (TEM) is a function in a software delivery process which aids the software testing cycle by providing a validated, stable and usable test environment to execute the test scenarios or replicate bugs.

As with a scientific experiment, in Testing repeatability and control of variables is essential. In testing a key component of this control is to manage the environment in which testing is taking place. This environment specifically includes the underlying hardware and software which supports the actual software under test. This encompasses items such as servers, operating systems, communications tools, databases, cloud ecosystems browsers.

In early testing stages only limited formal management of environments is required, if any. For example programmers may typically perform their testing within standardised IDEs which provide control by default. However at later stages, test execution will tend to work across multiple technologies and development streams, and typically involving multiple (teams of) testers. In these circumstances individual testers cannot reasonably be expected to exercise control over the technical landscape. This is where the need for some formal Test Environment Management function arises.

The activities under the TEM function include:

Many teams use spreadsheets instead of using specific tools for the first two areas if the data is less. However, if the data is more, it is recommended to use specialized tools for it.

Software testing is the act of examining the artifacts and the behavior of the software under test by validation and verification. Software testing can also provide an objective, independent view of the software to allow the business to appreciate and understand the risks of software implementation. Test techniques include, but are not necessarily limited to:

In software engineering, version control is a class of systems responsible for managing changes to computer programs, documents, large web sites, or other collections of information. Version control is a component of software configuration management.

In software testing, test automation is the use of software separate from the software being tested to control the execution of tests and the comparison of actual outcomes with predicted outcomes. Test automation can automate some repetitive but necessary tasks in a formalized testing process already in place, or perform additional testing that would be difficult to do manually. Test automation is critical for continuous delivery and continuous testing.

Reliability engineering is a sub-discipline of systems engineering that emphasizes the ability of equipment to function without failure. Reliability describes the ability of a system or component to function under stated conditions for a specified period of time. Reliability is closely related to availability, which is typically described as the ability of a component or system to function at a specified moment or interval of time.

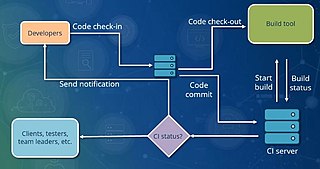

In software engineering, continuous integration (CI) is the practice of merging all developers' working copies to a shared mainline several times a day. Nowadays it is typically implemented in such a way that it triggers an automated build with testing. Grady Booch first proposed the term CI in his 1991 method, although he did not advocate integrating several times a day. Extreme programming (XP) adopted the concept of CI and did advocate integrating more than once per day – perhaps as many as tens of times per day.

In business and accounting, information technology controls are specific activities performed by persons or systems designed to ensure that business objectives are met. They are a subset of an enterprise's internal control. IT control objectives relate to the confidentiality, integrity, and availability of data and the overall management of the IT function of the business enterprise. IT controls are often described in two categories: IT general controls (ITGC) and IT application controls. ITGC include controls over the Information Technology (IT) environment, computer operations, access to programs and data, program development and program changes. IT application controls refer to transaction processing controls, sometimes called "input-processing-output" controls. Information technology controls have been given increased prominence in corporations listed in the United States by the Sarbanes-Oxley Act. The COBIT Framework is a widely used framework promulgated by the IT Governance Institute, which defines a variety of ITGC and application control objectives and recommended evaluation approaches. IT departments in organizations are often led by a chief information officer (CIO), who is responsible for ensuring effective information technology controls are utilized.

Computer-aided production engineering (CAPE) is a relatively new and significant branch of engineering. Global manufacturing has changed the environment in which goods are produced. Meanwhile, the rapid development of electronics and communication technologies has required design and manufacturing to keep pace.

An issue tracking system is a computer software package that manages and maintains lists of issues. Issue tracking systems are generally used in collaborative settings, especially in large or distributed collaborations, but can also be employed by individuals as part of a time management or personal productivity regimen. These systems often encompass resource allocation, time accounting, priority management, and oversight workflow in addition to implementing a centralized issue registry.

Game testing, a subset of game development, is a software testing process for quality control of video games. The primary function of game testing is the discovery and documentation of software defects. Interactive entertainment software testing is a highly technical field requiring computing expertise, analytic competence, critical evaluation skills, and endurance. In recent years the field of game testing has come under fire for being extremely strenuous and unrewarding, both financially and emotionally.

Database security concerns the use of a broad range of information security controls to protect databases against compromises of their confidentiality, integrity and availability. It involves various types or categories of controls, such as technical, procedural/administrative and physical.

WET Web Tester is a web testing tool that drives an IE browser directly, so the automated testing done is equivalent to how a user would drive the web pages. The tool allows a user to perform all the operations required for testing web applications – like automatically clicking a link, entering text in a text field, clicking a button, etc. One may also perform various checks as a part of the testing process by using Checkpoints. The latest version of WET is 1.0.0.

Software asset management (SAM) is a business practice that involves managing and optimizing the purchase, deployment, maintenance, utilization, and disposal of software applications within an organization. According to ITIL, SAM is defined as “…all of the infrastructure and processes necessary for the effective management, control, and protection of the software assets…throughout all stages of their lifecycle.” Fundamentally intended to be part of an organization's information technology business strategy, the goals of SAM are to reduce information technology (IT) costs and limit business and legal risk related to the ownership and use of software, while maximizing IT responsiveness and end-user productivity. SAM is particularly important for large corporations regarding redistribution of licenses and managing legal risks associated with software ownership and expiration. SAM technologies track license expiration, thus allowing the company to function ethically and within software compliance regulations. This can be important for both eliminating legal costs associated with license agreement violations and as part of a company's reputation management strategy. Both are important forms of risk management and are critical for large corporations' long-term business strategies.

In software engineering, graphical user interface testing is the process of testing a product's graphical user interface (GUI) to ensure it meets its specifications. This is normally done through the use of a variety of test cases.

Azure DevOps Server is a Microsoft product that provides version control, reporting, requirements management, project management, automated builds, testing and release management capabilities. It covers the entire application lifecycle and enables DevOps capabilities. Azure DevOps can be used as a back-end to numerous integrated development environments (IDEs) but is tailored for Microsoft Visual Studio and Eclipse on all platforms.

AnthillPro is a software tool originally developed and released as one of the first continuous integration servers. AnthillPro automates the process of building code into software projects and testing it to verify that project quality has been maintained. Software developers are able to identify bugs and errors earlier by using AnthillPro to track, collate, and test changes in real time to a collectively maintained body of computer code.

Production support covers the practices and disciplines of supporting the IT systems/applications which are currently being used by the end users. A production support person/team is responsible for monitoring the production servers, scheduled jobs, incident management and receiving incidents and requests from end-users, analyzing these and either responding to the end user with a solution or escalating it to the other IT teams. These teams may include developers, system engineers and database administrators.

A test strategy is an outline that describes the testing approach of the software development cycle. The purpose of a test strategy is to provide a rational deduction from organizational, high-level objectives to actual test activities to meet those objectives from a quality assurance perspective. The creation and documentation of a test strategy should be done in a systematic way to ensure that all objectives are fully covered and understood by all stakeholders. It should also frequently be reviewed, challenged and updated as the organization and the product evolve over time. Furthermore, a test strategy should also aim to align different stakeholders of quality assurance in terms of terminology, test and integration levels, roles and responsibilities, traceability, planning of resources, etc.

In software engineering, service virtualization or service virtualisation is a method to emulate the behavior of specific components in heterogeneous component-based applications such as API-driven applications, cloud-based applications and service-oriented architectures. It is used to provide software development and QA/testing teams access to dependent system components that are needed to exercise an application under test (AUT), but are unavailable or difficult-to-access for development and testing purposes. With the behavior of the dependent components "virtualized", testing and development can proceed without accessing the actual live components. Service virtualization is recognized by vendors, industry analysts, and industry publications as being different than mocking. See here for a Comparison of API simulation tools.

This article discusses a set of tactics useful in software testing. It is intended as a comprehensive list of tactical approaches to Software Quality Assurance (more widely colloquially known as Quality Assurance and general application of the test method.

In IT operations, software performance management is the subset of tools and processes in IT Operations which deals with the collection, monitoring, and analysis of performance metrics. These metrics can indicate to IT staff whether a system component is up and running (available), or that the component is behaving in an abnormal way that would impact its ability to function correctly—much like how a doctor may measure pulse, respiration, and temperature to measure how the human body is "operating". This type of monitoring originated with computer network components, but has now expanded into monitoring other components such as servers and storage devices, as well as groups of components organized to deliver specific services and Business Service Management).