Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures used to analyze the differences among group means in a sample. ANOVA was developed by statistician and evolutionary biologist Ronald Fisher. In the ANOVA setting, the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether the population means of several groups are equal, and therefore generalizes the t-test to more than two groups. ANOVA is useful for comparing (testing) three or more group means for statistical significance. It is conceptually similar to multiple two-sample t-tests, but is more conservative, resulting in fewer type I errors, and is therefore suited to a wide range of practical problems.

Sociotechnical systems (STS) in organizational development is an approach to complex organizational work design that recognizes the interaction between people and technology in workplaces. The term also refers to the interaction between society's complex infrastructures and human behaviour. In this sense, society itself, and most of its substructures, are complex sociotechnical systems. The term sociotechnical systems was coined by Eric Trist, Ken Bamforth and Fred Emery, in the World War II era, based on their work with workers in English coal mines at the Tavistock Institute in London.

Usability is the ease of use and learnability of a human-made object such as a tool or device. In software engineering, usability is the degree to which a software can be used by specified consumers to achieve quantified objectives with effectiveness, efficiency, and satisfaction in a quantified context of use.

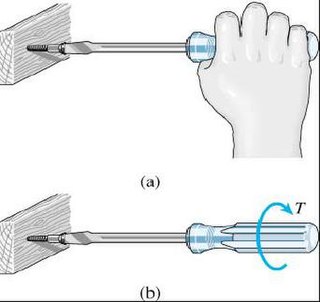

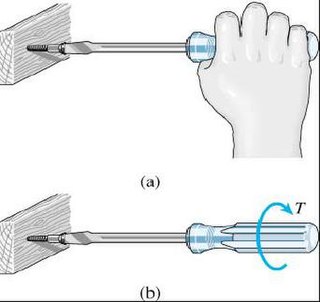

Interaction design, often abbreviated as IxD, is "the practice of designing interactive digital products, environments, systems, and services." Beyond the digital aspect, interaction design is also useful when creating physical (non-digital) products, exploring how a user might interact with it. Common topics of interaction design include design, human–computer interaction, and software development. While interaction design has an interest in form, its main area of focus rests on behavior. Rather than analyzing how things are, interaction design synthesizes and imagines things as they could be. This element of interaction design is what characterizes IxD as a design field as opposed to a science or engineering field.

The International Nuclear and Radiological Event Scale (INES) was introduced in 1990 by the International Atomic Energy Agency (IAEA) in order to enable prompt communication of safety-significant information in case of nuclear accidents.

Kim Vicente is an inactive professor of Mechanical and Industrial Engineering at the University of Toronto. He was previously a researcher, teacher, and author in the field of human factors. He is best known for his two books: The Human Factor and Cognitive Work Analysis.

S.T.A.L.K.E.R.: Shadow of Chernobyl is a first-person shooter survival horror video game developed by Ukrainian game developer GSC Game World and published by THQ. The game is set in an alternative reality, where a second nuclear disaster occurs at the Chernobyl Nuclear Power Plant Exclusion Zone in the near future and causes strange changes in the area around it. The game has a non-linear storyline and features gameplay elements such as trading and two-way communication with NPCs. The game includes role-playing and first person shooter elements. In S.T.A.L.K.E.R, the player assumes the identity of an amnesiac "Stalker", an illegal explorer/artifact scavenger in "The Zone", dubbed "The Marked One". "The Zone" is the location of an alternate reality version of the Zone of alienation surrounding the Chernobyl Power Plant after a fictitious second meltdown, which further contaminated the surrounding area with radiation, and caused strange otherworldly changes in local fauna, flora, and the laws of physics. "Stalker" in the context of the video game refers to the older meaning of the word as a tracker and hunter of game or guide.

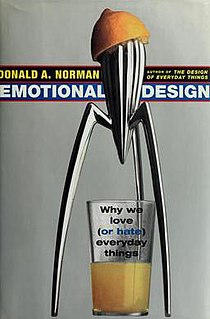

Emotional Design is both the title of a book by Donald Norman and of the concept it represents.

An error-tolerant design is one that does not unduly penalize user or human errors. It is the human equivalent of fault tolerant design that allows equipment to continue functioning in the presence of hardware faults, such as a "limp-in" mode for an automobile electronics unit that would be employed if something like the oxygen sensor failed.

In statistics, a full factorial experiment is an experiment whose design consists of two or more factors, each with discrete possible values or "levels", and whose experimental units take on all possible combinations of these levels across all such factors. A full factorial design may also be called a fully crossed design. Such an experiment allows the investigator to study the effect of each factor on the response variable, as well as the effects of interactions between factors on the response variable.

The Chernobyl disaster, also referred to as the Chernobyl accident, was a catastrophic nuclear accident. It occurred on 25–26 April 1986 in the No. 4 light water graphite moderated reactor at the Chernobyl Nuclear Power Plant near the now-abandoned town of Pripyat, in northern Ukrainian Soviet Socialist Republic, Soviet Union, approximately 104 km (65 mi) north of Kiev.

An incident is an event that could lead to loss of, or disruption to, an organization's operations, services or functions. Incident management (IcM) is a term describing the activities of an organization to identify, analyze, and correct hazards to prevent a future re-occurrence. These incidents within a structured organization are normally dealt with by either an incident response team (IRT), an incident management team (IMT), or Incident Command System (ICS). Without effective incident management, an incident can disrupt business operations, information security, IT systems, employees, customers, or other vital business functions.

The 1986 Chernobyl disaster triggered the release of substantial amounts of radioactivity into the atmosphere in the form of both particulate and gaseous radioisotopes. It is one of the most significant unintentional releases of radioactivity into the environment to present day.

Nuclear safety is defined by the International Atomic Energy Agency (IAEA) as "The achievement of proper operating conditions, prevention of accidents or mitigation of accident consequences, resulting in protection of workers, the public and the environment from undue radiation hazards". The IAEA defines nuclear security as "The prevention and detection of and response to, theft, sabotage, unauthorized access, illegal transfer or other malicious acts involving nuclear material, other radioactive substances or their associated facilities".

A system accident is an "unanticipated interaction of multiple failures" in a complex system. This complexity can be either technological or organizational, and is frequently both. A system accident can be very easy to see in hindsight, but difficult in foresight because there are too many different action pathways to seriously consider all of them.

Normal Accidents: Living with High-Risk Technologies is a 1984 book by Yale sociologist Charles Perrow, which provides a detailed analysis of complex systems from a sociological perspective. It was the first to "propose a framework for characterizing complex technological systems such as air traffic, marine traffic, chemical plants, dams, and especially nuclear power plants according to their riskiness". Perrow argues that multiple and unexpected failures are built into society's complex and tightly coupled systems. Such accidents are unavoidable and cannot be designed around.

The following is a glossary of terms. It is not intended to be all-inclusive.

Human–computer interaction (HCI) researches the design and use of computer technology, focused on the interfaces between people (users) and computers. Researchers in the field of HCI both observe the ways in which humans interact with computers and design technologies that let humans interact with computers in novel ways.

As a field of research, human–computer interaction is situated at the intersection of computer science, behavioral sciences, design, media studies, and several other fields of study. The term was popularized by Stuart K. Card, Allen Newell, and Thomas P. Moran in their seminal 1983 book, The Psychology of Human–Computer Interaction, although the authors first used the term in 1980 and the first known use was in 1975. The term connotes that, unlike other tools with only limited uses, a computer has many uses and this takes place as an open-ended dialog between the user and the computer. The notion of dialog likens human–computer interaction to human-to-human interaction, an analogy which is crucial to theoretical considerations in the field.

Human factors are the physical or cognitive properties of individuals, or social behavior which is specific to humans, and influence functioning of technological systems as well as human-environment equilibria. The safety of underwater diving operations can be improved by reducing the frequency of human error and the consequences when it does occur. Human error can be defined as an individual's deviation from acceptable or desirable practice which culminates in undesirable or unexpected results.

Dive safety is primarily a function of four factors: the environment, equipment, individual diver performance and dive team performance. The water is a harsh and alien environment which can impose severe physical and psychological stress on a diver. The remaining factors must be controlled and coordinated so the diver can overcome the stresses imposed by the underwater environment and work safely. Diving equipment is crucial because it provides life support to the diver, but the majority of dive accidents are caused by individual diver panic and an associated degradation of the individual diver's performance. - M.A. Blumenberg, 1996

Human factors and ergonomics is the application of psychological and physiological principles to the design of products, processes, and systems. The goal of human factors is to reduce human error, increase productivity, and enhance safety and comfort with a specific focus on the interaction between the human and the thing of interest.