In computing, a database is an organized collection of data stored and accessed electronically from a computer system. Where databases are more complex they are often developed using formal design and modeling techniques.

A relational database is a digital database based on the relational model of data, as proposed by E. F. Codd in 1970. A system used to maintain relational databases is a relational database management system (RDBMS). Many relational database systems have an option of using the SQL for querying and maintaining the database.

SQL is a domain-specific language used in programming and designed for managing data held in a relational database management system (RDBMS), or for stream processing in a relational data stream management system (RDSMS). It is particularly useful in handling structured data, i.e. data incorporating relations among entities and variables. SQL offers two main advantages over older read–write APIs such as ISAM or VSAM. Firstly, it introduced the concept of accessing many records with one single command. Secondly, it eliminates the need to specify how to reach a record, e.g. with or without an index.

An object–relational database (ORD), or object–relational database management system (ORDBMS), is a database management system (DBMS) similar to a relational database, but with an object-oriented database model: objects, classes and inheritance are directly supported in database schemas and in the query language. In addition, just as with pure relational systems, it supports extension of the data model with custom data types and methods.

Db2 is a family of data management products, including database servers, developed by IBM. They initially supported the relational model, but were extended to support object–relational features and non-relational structures like JSON and XML. The brand name was originally styled as DB/2, then DB2 until 2017 and finally changed to its present form.

Oracle Rdb is a relational database management system for the OpenVMS operating system. It was originally released by Digital Equipment Corporation (DEC) in 1984 as VAX Rdb/VMS.

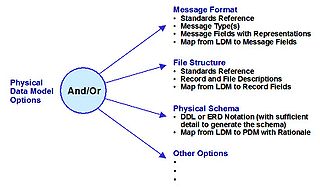

A physical data model is a representation of a data design as implemented, or intended to be implemented, in a database management system. In the lifecycle of a project it typically derives from a logical data model, though it may be reverse-engineered from a given database implementation. A complete physical data model will include all the database artifacts required to create relationships between tables or to achieve performance goals, such as indexes, constraint definitions, linking tables, partitioned tables or clusters. Analysts can usually use a physical data model to calculate storage estimates; it may include specific storage allocation details for a given database system.

ArcSDE is a server-software sub-system that aims to enable the usage of Relational Database Management Systems for spatial data. The spatial data may then be used as part of a geodatabase.

In database computing, Oracle Real Application Clusters (RAC) — an option for the Oracle Database software produced by Oracle Corporation and introduced in 2001 with Oracle9i — provides software for clustering and high availability in Oracle database environments. Oracle Corporation includes RAC with the Enterprise Edition, provided the nodes are clustered using Oracle Clusterware.

Virtuoso Universal Server is a middleware and database engine hybrid that combines the functionality of a traditional relational database management system (RDBMS), object–relational database (ORDBMS), virtual database, RDF, XML, free-text, web application server and file server functionality in a single system. Rather than have dedicated servers for each of the aforementioned functionality realms, Virtuoso is an "universal server"; it enables a single multithreaded server process that implements multiple protocols. The free and open source edition of Virtuoso Universal Server is also known as OpenLink Virtuoso. The software has been developed by OpenLink Software with Kingsley Uyi Idehen and Orri Erling as the chief software architects.

In computing, the term data warehouse appliance (DWA) was coined by Foster Hinshaw for a computer architecture for data warehouses (DW) specifically marketed for big data analysis and discovery that is simple to use and high performance for the workload. A DWA includes an integrated set of servers, storage, operating systems, and databases.

ALTIBASE is a hybrid database, relational open source database management system manufactured by Altibase Corporation. The software comes with a hybrid architecture which allows it to access both memory-resident and disk-resident tables using single interface. It supports both synchronous and asynchronous replication and offers real-time ACID compliance. Support is also offered for a variety of SQL standards and programming languages. Other important capabilities include data import and export, data encryption for security, multiple data access command sets, materialized view and temporary tables, and others.

Exasol is an analytics database management software company. Its product is called Exasol, an in-memory, column-oriented, relational database management system

Michael Ralph Stonebraker is a computer scientist specializing in database systems. Through a series of academic prototypes and commercial startups, Stonebraker's research and products are central to many relational databases. He is also the founder of many database companies, including Ingres Corporation, Illustra, Paradigm4, StreamBase Systems, Tamr, Vertica and VoltDB, and served as chief technical officer of Informix. For his contributions to database research, Stonebraker received the 2014 Turing Award, often described as "the Nobel Prize for computing."

VoltDB is an in-memory database designed by Michael Stonebraker, Sam Madden, and Daniel Abadi.

The following is provided as an overview of and topical guide to databases:

Array database management systems provide database services specifically for arrays, that is: homogeneous collections of data items, sitting on a regular grid of one, two, or more dimensions. Often arrays are used to represent sensor, simulation, image, or statistics data. Such arrays tend to be Big Data, with single objects frequently ranging into Terabyte and soon Petabyte sizes; for example, today's earth and space observation archives typically grow by Terabytes a day. Array databases aim at offering flexible, scalable storage and retrieval on this information category.

JEUS is a Korean Web application server which is developed by TmaxSoft. JEUS provides the web application server component of TmaxSoft's middleware-tier framework solution. It has been widely adopted in Korea where it holds the largest (42.1%) share of the market.

The following outline is provided as an overview of and topical guide to MySQL:

Database scalability is the ability of a database to handle changing demands by adding/removing resources. Databases have adopted a host of techniques to cope.