Uses

They are frequently used in the invariant theory of matrices to find the generators and relations of the ring of invariants, and therefore are useful in answering questions similar to that posed by Hilbert's fourteenth problem.

In mathematics, a trace identity is any equation involving the trace of a matrix.

Trace identities are invariant under simultaneous conjugation.

They are frequently used in the invariant theory of matrices to find the generators and relations of the ring of invariants, and therefore are useful in answering questions similar to that posed by Hilbert's fourteenth problem.

In mathematics, the determinant is a scalar value that is a certain function of the entries of a square matrix. The determinant of a matrix A is commonly denoted det(A), det A, or |A|. Its value characterizes some properties of the matrix and the linear map represented, on a given basis, by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the corresponding linear map is an isomorphism. The determinant of a product of matrices is the product of their determinants.

In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices that are Hermitian, involutory and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries.

In linear algebra, the trace of a square matrix A, denoted tr(A), is defined to be the sum of elements on the main diagonal of A. The trace is only defined for a square matrix.

In mathematics, a square matrix is a matrix with the same number of rows and columns. An n-by-n matrix is known as a square matrix of order . Any two square matrices of the same order can be added and multiplied.

In linear algebra, the Cayley–Hamilton theorem states that every square matrix over a commutative ring satisfies its own characteristic equation.

In mathematics, specifically functional analysis, a trace-class operator is a linear operator for which a trace may be defined, such that the trace is a finite number independent of the choice of basis used to compute the trace. This trace of trace-class operators generalizes the trace of matrices studied in linear algebra. All trace-class operators are compact operators.

In linear algebra, the adjugate of a square matrix A is the transpose of its cofactor matrix and is denoted by adj(A). It is also occasionally known as adjunct matrix, or "adjoint", though the latter term today normally refers to a different concept, the adjoint operator which for a matrix is the conjugate transpose.

In linear algebra, the permanent of a square matrix is a function of the matrix similar to the determinant. The permanent, as well as the determinant, is a polynomial in the entries of the matrix. Both are special cases of a more general function of a matrix called the immanant.

In linear algebra, an n-by-n square matrix A is called invertible if there exists an n-by-n square matrix B such that

In mathematics, the conjugate transpose, also known as the Hermitian transpose, of an complex matrix is an matrix obtained by transposing and applying complex conjugation to each entry. There are several notations, such as or , , or .

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The characteristic polynomial of an endomorphism of a finite-dimensional vector space is the characteristic polynomial of the matrix of that endomorphism over any base. The characteristic equation, also known as the determinantal equation, is the equation obtained by equating the characteristic polynomial to zero.

In mathematics the determinant of an m×m skew-symmetric matrix can always be written as the square of a polynomial in the matrix entries, a polynomial with integer coefficients that only depends on m. When m is odd, the polynomial is zero. When m is even, it is a nonzero polynomial of degree m/2, and is unique up to multiplication by ±1. The convention on skew-symmetric tridiagonal matrices, given below in the examples, then determines one specific polynomial, called the Pfaffian polynomial. The value of this polynomial, when applied to the entries of a skew-symmetric matrix, is called the Pfaffian of that matrix. The term Pfaffian was introduced by Cayley, who indirectly named them after Johann Friedrich Pfaff.

In matrix calculus, Jacobi's formula expresses the derivative of the determinant of a matrix A in terms of the adjugate of A and the derivative of A.

In physics, the von Neumann entropy, named after John von Neumann, is an extension of the concept of Gibbs entropy from classical statistical mechanics to quantum statistical mechanics. For a quantum-mechanical system described by a density matrix ρ, the von Neumann entropy is

In mathematics, Sylvester’s criterion is a necessary and sufficient criterion to determine whether a Hermitian matrix is positive-definite.

In linear algebra, the computation of the permanent of a matrix is a problem that is thought to be more difficult than the computation of the determinant of a matrix despite the apparent similarity of the definitions.

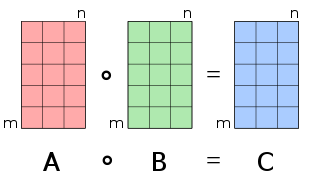

In mathematics, the Hadamard product is a binary operation that takes in two matrices of the same dimensions and returns a matrix of the multiplied corresponding elements. This operation can be thought as a "naive matrix multiplication" and is different from the matrix product. It is attributed to, and named after, either French mathematician Jacques Hadamard or German mathematician Issai Schur.

In mathematics, there are many kinds of inequalities involving matrices and linear operators on Hilbert spaces. This article covers some important operator inequalities connected with traces of matrices.

In mathematics, Fischer's inequality gives an upper bound for the determinant of a positive-semidefinite matrix whose entries are complex numbers in terms of the determinants of its principal diagonal blocks. Suppose A, C are respectively p×p, q×q positive-semidefinite complex matrices and B is a p×q complex matrix. Let

In mathematics, the Hamiltonian cycle polynomial of an n×n-matrix is a polynomial in its entries, defined as

Rowen, Louis Halle (2008), Graduate Algebra: Noncommutative View, Graduate Studies in Mathematics, vol. 2, American Mathematical Society, p. 412, ISBN 9780821841532 .