In mathematics, a series is, roughly speaking, the operation of adding infinitely many quantities, one after the other, to a given starting quantity. The study of series is a major part of calculus and its generalization, mathematical analysis. Series are used in most areas of mathematics, even for studying finite structures through generating functions. In addition to their ubiquity in mathematics, infinite series are also widely used in other quantitative disciplines such as physics, computer science, statistics and finance.

In mathematics, the Taylor series or Taylor expansion of a function is an infinite sum of terms that are expressed in terms of the function's derivatives at a single point. For most common functions, the function and the sum of its Taylor series are equal near this point. Taylor series are named after Brook Taylor, who introduced them in 1715. A Taylor series is also called a Maclaurin series when 0 is the point where the derivatives are considered, after Colin Maclaurin, who made extensive use of this special case of Taylor series in the mid-18th century.

A finite difference is a mathematical expression of the form f (x + b) − f (x + a). If a finite difference is divided by b − a, one gets a difference quotient. The approximation of derivatives by finite differences plays a central role in finite difference methods for the numerical solution of differential equations, especially boundary value problems.

In calculus, Taylor's theorem gives an approximation of a -times differentiable function around a given point by a polynomial of degree , called the -th-order Taylor polynomial. For a smooth function, the Taylor polynomial is the truncation at the order of the Taylor series of the function. The first-order Taylor polynomial is the linear approximation of the function, and the second-order Taylor polynomial is often referred to as the quadratic approximation. There are several versions of Taylor's theorem, some giving explicit estimates of the approximation error of the function by its Taylor polynomial.

In mathematics, a generating function is a way of encoding an infinite sequence of numbers by treating them as the coefficients of a formal power series. This series is called the generating function of the sequence. Unlike an ordinary series, the formal power series is not required to converge: in fact, the generating function is not actually regarded as a function, and the "variable" remains an indeterminate. Generating functions were first introduced by Abraham de Moivre in 1730, in order to solve the general linear recurrence problem. One can generalize to formal power series in more than one indeterminate, to encode information about infinite multi-dimensional arrays of numbers.

In analysis, numerical integration comprises a broad family of algorithms for calculating the numerical value of a definite integral, and by extension, the term is also sometimes used to describe the numerical solution of differential equations. This article focuses on calculation of definite integrals.

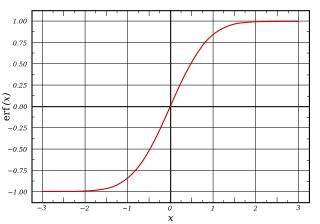

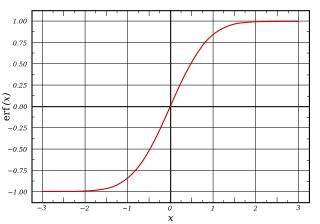

In mathematics, the error function, often denoted by erf, is a complex function of a complex variable defined as:

In numerical analysis, the Runge–Kutta methods are a family of implicit and explicit iterative methods, which include the Euler method, used in temporal discretization for the approximate solutions of simultaneous nonlinear equations. These methods were developed around 1900 by the German mathematicians Carl Runge and Wilhelm Kutta.

A Gabriel's horn is a type of geometric figure that has infinite surface area but finite volume. The name refers to the Christian tradition where the archangel Gabriel blows the horn to announce Judgment Day. The properties of this figure were first studied by Italian physicist and mathematician Evangelista Torricelli in the 17th century.

In algebra, a group ring is a free module and at the same time a ring, constructed in a natural way from any given ring and any given group. As a free module, its ring of scalars is the given ring, and its basis is the set of elements of the given group. As a ring, its addition law is that of the free module and its multiplication extends "by linearity" the given group law on the basis. Less formally, a group ring is a generalization of a given group, by attaching to each element of the group a "weighting factor" from a given ring.

In signal processing, a finite impulse response (FIR) filter is a filter whose impulse response is of finite duration, because it settles to zero in finite time. This is in contrast to infinite impulse response (IIR) filters, which may have internal feedback and may continue to respond indefinitely.

The Basel problem is a problem in mathematical analysis with relevance to number theory, concerning an infinite sum of inverse squares. It was first posed by Pietro Mengoli in 1650 and solved by Leonhard Euler in 1734, and read on 5 December 1735 in The Saint Petersburg Academy of Sciences. Since the problem had withstood the attacks of the leading mathematicians of the day, Euler's solution brought him immediate fame when he was twenty-eight. Euler generalised the problem considerably, and his ideas were taken up more than a century later by Bernhard Riemann in his seminal 1859 paper "On the Number of Primes Less Than a Given Magnitude", in which he defined his zeta function and proved its basic properties. The problem is named after Basel, hometown of Euler as well as of the Bernoulli family who unsuccessfully attacked the problem.

The Voigt profile is a probability distribution given by a convolution of a Cauchy-Lorentz distribution and a Gaussian distribution. It is often used in analyzing data from spectroscopy or diffraction.

In mathematics, the Leibniz formula for π, named after Gottfried Wilhelm Leibniz, states that

In continuum mechanics, the finite strain theory—also called large strain theory, or large deformation theory—deals with deformations in which strains and/or rotations are large enough to invalidate assumptions inherent in infinitesimal strain theory. In this case, the undeformed and deformed configurations of the continuum are significantly different, requiring a clear distinction between them. This is commonly the case with elastomers, plastically-deforming materials and other fluids and biological soft tissue.

The Engel expansion of a positive real number x is the unique non-decreasing sequence of positive integers such that

In mathematics and computational science, the Euler method is a first-order numerical procedure for solving ordinary differential equations (ODEs) with a given initial value. It is the most basic explicit method for numerical integration of ordinary differential equations and is the simplest Runge–Kutta method. The Euler method is named after Leonhard Euler, who first proposed it in his book Institutionum calculi integralis.

In numerical analysis, finite-difference methods (FDM) are a class of numerical techniques for solving differential equations by approximating derivatives with finite differences. Both the spatial domain and time interval are discretized, or broken into a finite number of steps, and the value of the solution at these discrete points is approximated by solving algebraic equations containing finite differences and values from nearby points.

In applied mathematics, discontinuous Galerkin methods (DG methods) form a class of numerical methods for solving differential equations. They combine features of the finite element and the finite volume framework and have been successfully applied to hyperbolic, elliptic, parabolic and mixed form problems arising from a wide range of applications. DG methods have in particular received considerable interest for problems with a dominant first-order part, e.g. in electrodynamics, fluid mechanics and plasma physics.

In calculus, the differential represents the principal part of the change in a function with respect to changes in the independent variable. The differential is defined by