Issues of heterogeneity in duration models can take on different forms. On the one hand, unobserved heterogeneity can play a crucial role when it comes to different sampling methods, such as stock or flow sampling. [1] On the other hand, duration models have also been extended to allow for different subpopulations, with a strong link to mixture models. Many of these models impose the assumptions that heterogeneity is independent of the observed covariates, it has a distribution that depends on a finite number of parameters only, and it enters the hazard function multiplicatively. [2]

The stock of a corporation is all of the shares into which ownership of the corporation is divided. In American English, the shares are commonly known as "stocks." A single share of the stock represents fractional ownership of the corporation in proportion to the total number of shares. This typically entitles the stockholder to that fraction of the company's earnings, proceeds from liquidation of assets, or voting power, often dividing these up in proportion to the amount of money each stockholder has invested. Not all stock is necessarily equal, as certain classes of stock may be issued for example without voting rights, with enhanced voting rights, or with a certain priority to receive profits or liquidation proceeds before or after other classes of shareholders.

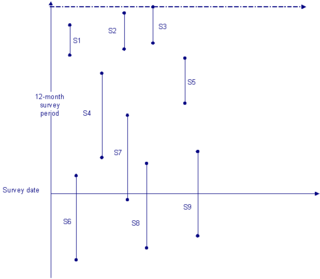

Flow sampling, in contrast to stock sampling, means we collect observations that enter the particular state of interest during a particular interval. When dealing with duration data, the data sampling method has a direct impact on subsequent analyses and inference. An example in demography would be sampling the number of people that die within a given time frame ; a popular example in economics would be the number of people leaving unemployment within a given time frame. Researchers imposing similar assumptions but using different sampling methods, can reach fundamentally different conclusions if the joint distribution across the flow and stock samples differ.

In probability theory, two events are independent, statistically independent, or stochastically independent if the occurrence of one does not affect the probability of occurrence of the other. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other.

One can define the conditional hazard as the hazard function conditional on the observed covariates and the unobserved heterogeneity. [3] In the general case, the cumulative distribution function of ti* associated with the conditional hazard is given by F(t|xi , vi ; θ). Under the first assumption above, the unobserved component can be integrated out and we obtain the cumulative distribution on the observed covariates only, i.e.

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable , or just distribution function of , evaluated at , is the probability that will take a value less than or equal to .

G(t ∨ xi ; θ , ρ) = ∫ F (t ∨ xi, ν ; θ ) h ( ν ; ρ ) dν [4]

where the additional parameter ρ parameterizes the density of the unobserved component v. Now, the different estimation methods for stock or flow sampling data are available to estimate the relevant parameters.

A specific example is described by Lancaster. Assume that the conditional hazard is given by

λ(t ; xi , vi ) = vi exp (x [5] β) α t α-1

where x is a vector of observed characteristics, v is the unobserved heterogeneity part, and a normalization (often E[vi] = 1) needs to be imposed. It then follows that the average hazard is given by exp(x'β) αtα-1. More generally, it can be shown that as long as the hazard function exhibits proportional properties of the form λ ( t ; xi, vi ) = vi κ (xi ) λ0 (t), one can identify both the covariate function κ(.) and the hazard function λ(.). [6]

In statistics and applications of statistics, normalization can have a range of meanings. In the simplest cases, normalization of ratings means adjusting values measured on different scales to a notionally common scale, often prior to averaging. In more complicated cases, normalization may refer to more sophisticated adjustments where the intention is to bring the entire probability distributions of adjusted values into alignment. In the case of normalization of scores in educational assessment, there may be an intention to align distributions to a normal distribution. A different approach to normalization of probability distributions is quantile normalization, where the quantiles of the different measures are brought into alignment.

Recent examples provide a nonparametric approaches to estimating the baseline hazard and the distribution of the unobserved heterogeneity under fairly weak assumptions. [7] In grouped data, the strict exogeneity assumptions for time-varying covariates are hard to relax. Parametric forms can be imposed for the distribution of the unobserved heterogeneity, [8] even though semiparametric methods that do not specify such parametric forms for the unobserved heterogeneity are available. [9]

Grouped data are data formed by aggregating individual observations of a variable into groups, so that a frequency distribution of these groups serves as a convenient means of summarizing or analyzing the data.

In mathematics, a parametric equation defines a group of quantities as functions of one or more independent variables called parameters. Parametric equations are commonly used to express the coordinates of the points that make up a geometric object such as a curve or surface, in which case the equations are collectively called a parametric representation or parameterization of the object.