Related Research Articles

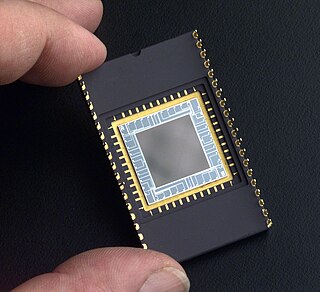

A charge-coupled device (CCD) is an integrated circuit containing an array of linked, or coupled, capacitors. Under the control of an external circuit, each capacitor can transfer its electric charge to a neighboring capacitor. CCD sensors are a major technology used in digital imaging.

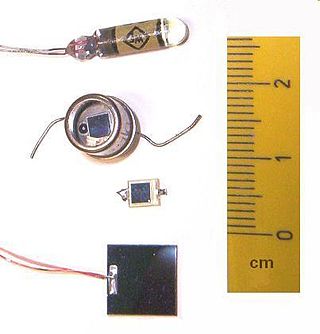

A photodiode is a semiconductor diode sensitive to photon radiation, such as visible light, infrared or ultraviolet radiation, X-rays and gamma rays. It produces an electrical current when it absorbs photons. This can be used for detection and measurement applications, or for the generation of electrical power in solar cells. Photodiodes are used in a wide range of applications throughout the electromagnetic spectrum from visible light photocells to gamma ray spectrometers.

Digital image processing is the use of a digital computer to process digital images through an algorithm. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of noise and distortion during processing. Since images are defined over two dimensions digital image processing may be modeled in the form of multidimensional systems. The generation and development of digital image processing are mainly affected by three factors: first, the development of computers; second, the development of mathematics ; third, the demand for a wide range of applications in environment, agriculture, military, industry and medical science has increased.

A sensor is a device that produces an output signal for the purpose of detecting a physical phenomenon.

Neuromorphic computing is an approach to computing that is inspired by the structure and function of the human brain. A neuromorphic computer/chip is any device that uses physical artificial neurons to do computations. In recent times, the term neuromorphic has been used to describe analog, digital, mixed-mode analog/digital VLSI, and software systems that implement models of neural systems. Recent advances have even discovered ways to mimic the human nervous system through liquid solutions of chemical systems.

Carver Andress Mead is an American scientist and engineer. He currently holds the position of Gordon and Betty Moore Professor Emeritus of Engineering and Applied Science at the California Institute of Technology (Caltech), having taught there for over 40 years.

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene. Optical flow can also be defined as the distribution of apparent velocities of movement of brightness pattern in an image.

A single-photon avalanche diode (SPAD), also called Geiger-mode avalanche photodiode is a solid-state photodetector within the same family as photodiodes and avalanche photodiodes (APDs), while also being fundamentally linked with basic diode behaviours. As with photodiodes and APDs, a SPAD is based around a semi-conductor p-n junction that can be illuminated with ionizing radiation such as gamma, x-rays, beta and alpha particles along with a wide portion of the electromagnetic spectrum from ultraviolet (UV) through the visible wavelengths and into the infrared (IR).

A mixed-signal integrated circuit is any integrated circuit that has both analog circuits and digital circuits on a single semiconductor die. Their usage has grown dramatically with the increased use of cell phones, telecommunications, portable electronics, and automobiles with electronics and digital sensors.

Photodetectors, also called photosensors, are sensors of light or other electromagnetic radiation. There are a wide variety of photodetectors which may be classified by mechanism of detection, such as photoelectric or photochemical effects, or by various performance metrics, such as spectral response. Semiconductor-based photodetectors typically use a p–n junction that converts photons into charge. The absorbed photons make electron–hole pairs in the depletion region. Photodiodes and photo transistors are a few examples of photo detectors. Solar cells convert some of the light energy absorbed into electrical energy.

In computer science and machine learning, cellular neural networks (CNN) or cellular nonlinear networks (CNN) are a parallel computing paradigm similar to neural networks, with the difference that communication is allowed between neighbouring units only. Typical applications include image processing, analyzing 3D surfaces, solving partial differential equations, reducing non-visual problems to geometric maps, modelling biological vision and other sensory-motor organs.

An image sensor or imager is a sensor that detects and conveys information used to form an image. It does so by converting the variable attenuation of light waves into signals, small bursts of current that convey the information. The waves can be light or other electromagnetic radiation. Image sensors are used in electronic imaging devices of both analog and digital types, which include digital cameras, camera modules, camera phones, optical mouse devices, medical imaging equipment, night vision equipment such as thermal imaging devices, radar, sonar, and others. As technology changes, electronic and digital imaging tends to replace chemical and analog imaging.

An active-pixel sensor (APS) is an image sensor, which was invented by Peter J.W. Noble in 1968, where each pixel sensor unit cell has a photodetector and one or more active transistors. In a metal–oxide–semiconductor (MOS) active-pixel sensor, MOS field-effect transistors (MOSFETs) are used as amplifiers. There are different types of APS, including the early NMOS APS and the now much more common complementary MOS (CMOS) APS, also known as the CMOS sensor. CMOS sensors are used in digital camera technologies such as cell phone cameras, web cameras, most modern digital pocket cameras, most digital single-lens reflex cameras (DSLRs), mirrorless interchangeable-lens cameras (MILCs), and lensless imaging for cells.

Richard "Dick" Francis Lyon is an American inventor, scientist, and engineer. He is one of the two people who independently invented the first optical mouse devices in 1980. He has worked in signal processing and was a co-founder of Foveon, Inc., a digital camera and image sensor company.

Michelle Anne Mahowald was an American computational neuroscientist in the emerging field of neuromorphic engineering. In 1996 she was inducted into the Women in Technology International Hall of Fame for her development of the Silicon Eye and other computational systems. She died by suicide at age 33.

Kwabena Adu Boahen is a Ghanaian-born Professor of Bioengineering and Electrical Engineering at Stanford University. He previously taught at the University of Pennsylvania.

The Rice University Department of Electrical and Computer Engineering is one of nine academic departments at the George R. Brown School of Engineering at Rice University. Ashutosh Sabharwal is the Department Chair. Originally the Rice Department of Electrical Engineering, it was renamed in 1984 to Electrical and Computer Engineering.

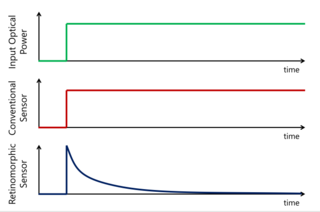

An event camera, also known as a neuromorphic camera, silicon retina or dynamic vision sensor, is an imaging sensor that responds to local changes in brightness. Event cameras do not capture images using a shutter as conventional (frame) cameras do. Instead, each pixel inside an event camera operates independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise.

Retinomorphic sensors are a type of event-driven optical sensor which produce a signal in response to changes in light intensity, rather than to light intensity itself. This is in contrast to conventional optical sensors such as charge coupled device (CCD) or complementary metal oxide semiconductor (CMOS) based sensors, which output a signal that increases with increasing light intensity. Because they respond to movement only, retinomorphic sensors are hoped to enable faster tracking of moving objects than conventional image sensors, and have potential applications in autonomous vehicles, robotics, and neuromorphic engineering.

References

- ↑ Analog VLSI and Neural Systems, by Carver Mead, 1989

- ↑ Vision Chips: Implementing Vision Algorithms With Analog Vlsi Circuits, Ed. by Koch and Li, IEEE, 1995

- ↑ Analog VLSI Circuits for the Perception of Visual Motion, by Alan Stocker, Wiley and Sons, 2006

- ↑ Vision Chips, by Alireza Moini, Kluwer Academic Publishers, 2000

- ↑ E. Funatsu, K. Hara, T. Toyoda, J. Ohta & K. Kyuma, Variable-sensitivity photodetector of pn-np structure for optical neural networks, Japanese Journal of Applied Physics, Part 2 (Letters), Vol. 33, No. 1B, pp. L113-L115, January 1994.