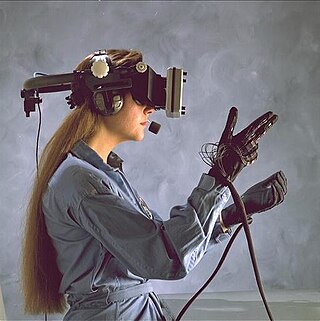

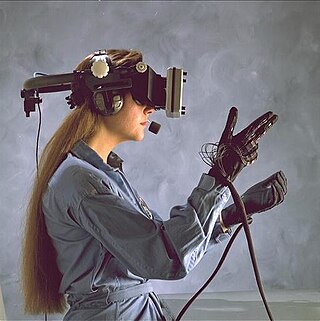

Virtual reality (VR) is a simulated experience that employs pose tracking and 3D near-eye displays to give the user an immersive feel of a virtual world. Applications of virtual reality include entertainment, education and business. Other distinct types of VR-style technology include augmented reality and mixed reality, sometimes referred to as extended reality or XR, although definitions are currently changing due to the nascence of the industry.

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

Augmented reality (AR) is an interactive experience that combines the real world and computer-generated content. The content can span multiple sensory modalities, including visual, auditory, haptic, somatosensory and olfactory. AR can be defined as a system that incorporates three basic features: a combination of real and virtual worlds, real-time interaction, and accurate 3D registration of virtual and real objects. The overlaid sensory information can be constructive, or destructive. This experience is seamlessly interwoven with the physical world such that it is perceived as an immersive aspect of the real environment. In this way, augmented reality alters one's ongoing perception of a real-world environment, whereas virtual reality completely replaces the user's real-world environment with a simulated one.

Haptic technology is technology that can create an experience of touch by applying forces, vibrations, or motions to the user. These technologies can be used to create virtual objects in a computer simulation, to control virtual objects, and to enhance remote control of machines and devices (telerobotics). Haptic devices may incorporate tactile sensors that measure forces exerted by the user on the interface. The word haptic, from the Greek: ἁπτικός (haptikos), means "tactile, pertaining to the sense of touch". Simple haptic devices are common in the form of game controllers, joysticks, and steering wheels.

Telepresence refers to a set of technologies which allow a person to feel as if they were present, to give the appearance or effect of being present via telerobotics, at a place other than their true location.

Computer-mediated reality refers to the ability to add to, subtract information from, or otherwise manipulate one's perception of reality through the use of a wearable computer or hand-held device such as a smartphone.

Mixed reality (MR) is a term used to describe the merging of a real-world environment and a computer-generated one. Physical and virtual objects may co-exist in mixed reality environments and interact in real time.

A tangible user interface (TUI) is a user interface in which a person interacts with digital information through the physical environment. The initial name was Graspable User Interface, which is no longer used. The purpose of TUI development is to empower collaboration, learning, and design by giving physical forms to digital information, thus taking advantage of the human ability to grasp and manipulate physical objects and materials.

Sensory substitution is a change of the characteristics of one sensory modality into stimuli of another sensory modality.

Enactive interfaces are interactive systems that allow organization and transmission of knowledge obtained through action. Examples are interfaces that couple a human with a machine to do things usually done unaided, such as shaping a three-dimensional object using multiple modality interactions with a database, or using interactive video to allow a student to visually engage with mathematical concepts. Enactive interface design can be approached through the idea of raising awareness of affordances, that is, optimization of the awareness of possible actions available to someone using the enactive interface. This optimization involves visibility, affordance, and feedback.

A virtual fixture is an overlay of augmented sensory information upon a user's perception of a real environment in order to improve human performance in both direct and remotely manipulated tasks. Developed in the early 1990s by Louis Rosenberg at the U.S. Air Force Research Laboratory (AFRL), Virtual Fixtures was a pioneering platform in virtual reality and augmented reality technologies.

Immersion into virtual reality (VR) is a perception of being physically present in a non-physical world. The perception is created by surrounding the user of the VR system in images, sound or other stimuli that provide an engrossing total environment.

A projection augmented model is an element sometimes employed in virtual reality systems. It consists of a physical three-dimensional model onto which a computer image is projected to create a realistic looking object. Importantly, the physical model is the same geometric shape as the object that the PA model depicts.

Haptic perception means literally the ability "to grasp something". Perception in this case is achieved through the active exploration of surfaces and objects by a moving subject, as opposed to passive contact by a static subject during tactile perception.

In computing, 3D interaction is a form of human-machine interaction where users are able to move and perform interaction in 3D space. Both human and machine process information where the physical position of elements in the 3D space is relevant.

Affective haptics is the emerging area of research which focuses on the study and design of devices and systems that can elicit, enhance, or influence the emotional state of a human by means of sense of touch. The research field is originated with the Dzmitry Tsetserukou and Alena Neviarouskaya papers on affective haptics and real-time communication system with rich emotional and haptic channels. Driven by the motivation to enhance social interactivity and emotionally immersive experience of users of real-time messaging, virtual, augmented realities, the idea of reinforcing (intensifying) own feelings and reproducing (simulating) the emotions felt by the partner was proposed. Four basic haptic (tactile) channels governing our emotions can be distinguished:

- physiological changes

- physical stimulation

- social touch

- emotional haptic design.

Haptic memory is the form of sensory memory specific to touch stimuli. Haptic memory is used regularly when assessing the necessary forces for gripping and interacting with familiar objects. It may also influence one's interactions with novel objects of an apparently similar size and density. Similar to visual iconic memory, traces of haptically acquired information are short lived and prone to decay after approximately two seconds. Haptic memory is best for stimuli applied to areas of the skin that are more sensitive to touch. Haptics involves at least two subsystems; cutaneous, or everything skin related, and kinesthetic, or joint angle and the relative location of body. Haptics generally involves active, manual examination and is quite capable of processing physical traits of objects and surfaces.

A peripheral head-mounted display (PHMD) is avisual display mounted to the user's head that is in the peripheral of the user's field of view (FOV) / peripheral vision. Whereby the actual position of the mounting is considered to be irrelevant as long as it does not cover the entire FOV. While a PHMD provide an additional, always-available visual output channel, it does not limit the user performing real world tasks.

Ken Hinckley is an American computer scientist and inventor. He is a senior principal research manager at Microsoft Research. He is known for his research in human-computer interaction, specifically on sensing techniques, pen computing, and cross-device interaction.

Vincent Hayward, was an engineer specializing in touch and haptics. He was a professor at Sorbonne University, Institute of Intelligent Systems and Robotics (ISIR), where since 2008 he led a team dedicated to the study of haptic perception and the creation of tactile stimulation devices. In 2020, he was elected to the French Academy of sciences.