Transcription in the linguistic sense is the systematic representation of spoken language in written form. The source can either be utterances or preexisting text in another writing system.

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005) we can distinguish between three different perspectives of text mining: information extraction, data mining, and a knowledge discovery in databases (KDD) process. Text mining usually involves the process of structuring the input text, deriving patterns within the structured data, and finally evaluation and interpretation of the output. 'High quality' in text mining usually refers to some combination of relevance, novelty, and interest. Typical text mining tasks include text categorization, text clustering, concept/entity extraction, production of granular taxonomies, sentiment analysis, document summarization, and entity relation modeling.

Content analysis is the study of documents and communication artifacts, which might be texts of various formats, pictures, audio or video. Social scientists use content analysis to examine patterns in communication in a replicable and systematic manner. One of the key advantages of using content analysis to analyse social phenomena is their non-invasive nature, in contrast to simulating social experiences or collecting survey answers.

Analytics is the systematic computational analysis of data or statistics. It is used for the discovery, interpretation, and communication of meaningful patterns in data. It also entails applying data patterns toward effective decision-making. It can be valuable in areas rich with recorded information; analytics relies on the simultaneous application of statistics, computer programming, and operations research to quantify performance.

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization’s data so it can be analyzed for decision making.

The Biocomplexity Institute of Virginia Tech is a research organization specializing in bioinformatics, computational biology, and systems biology. The institute has more than 250 personnel, including over 50 tenured and research faculty. Research at the institute involves collaboration in diverse disciplines such as mathematics, computer science, biology, plant pathology, biochemistry, systems biology, statistics, economics, synthetic biology and medicine. The institute develops -omic and bioinformatic tools and databases that can be applied to the study of human, animal and plant diseases as well as the discovery of new vaccine, drug and diagnostic targets.

Lev Manovich is an artist, an author and a theorist of digital culture. He is a Distinguished Professor at the Graduate Center of the City University of New York. Manovich played a key role in creating four new research fields: new media studies (1991-), software studies (2001-), cultural analytics (2007-) and AI aesthetics (2018-). Manovich's current research focuses on generative media, AI culture, digital art, and media theory.

A business analyst (BA) is a person who processes, interprets and documents business processes, products, services and software through analysis of data. The role of a business analyst is to ensure business efficiency increases through their knowledge of both IT and business function.

Web analytics is the measurement, collection, analysis, and reporting of web data to understand and optimize web usage. Web analytics is not just a process for measuring web traffic but can be used as a tool for business and market research and assess and improve website effectiveness. Web analytics applications can also help companies measure the results of traditional print or broadcast advertising campaigns. It can be used to estimate how traffic to a website changes after launching a new advertising campaign. Web analytics provides information about the number of visitors to a website and the number of page views, or create user behavior profiles. It helps gauge traffic and popularity trends, which is useful for market research.

Unstructured data is information that either does not have a pre-defined data model or is not organized in a pre-defined manner. Unstructured information is typically text-heavy, but may contain data such as dates, numbers, and facts as well. This results in irregularities and ambiguities that make it difficult to understand using traditional programs as compared to data stored in fielded form in databases or annotated in documents.

Digital humanities (DH) is an area of scholarly activity at the intersection of computing or digital technologies and the disciplines of the humanities. It includes the systematic use of digital resources in the humanities, as well as the analysis of their application. DH can be defined as new ways of doing scholarship that involve collaborative, transdisciplinary, and computationally engaged research, teaching, and publishing. It brings digital tools and methods to the study of the humanities with the recognition that the printed word is no longer the main medium for knowledge production and distribution.

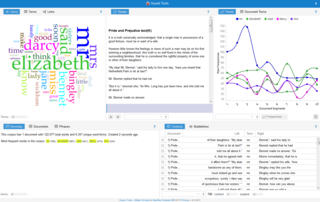

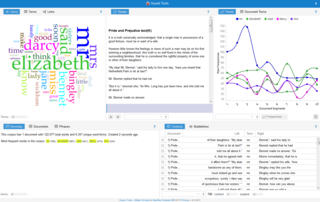

TAPoR is a gateway that highlights tools and code snippets usable for textual criticism of all types. The project is housed at the University of Alberta, and is currently led by Geoffrey Rockwell, Stéfan Sinclair, Kirsten C. Uszkalo, and Milena Radzikowska. Users of the portal explore tools to use in their research, and can rate, review, and comment on tools, browse curated lists of recommended tools, and add tags to tools. Tool pages on TAPoR consist of a short description, authorial information, a screenshot of the tool, tags, suggested related tools, and user ratings and comments. Code snippet pages also contain an excerpt of code and a link to the full code's location online.

The Applied Research in Patacriticism (ARP) was a digital humanities lab based at the University of Virginia founded and run by Jerome McGann and Johanna Drucker. ARP's open-source tools include Juxta, IVANHOE, and Collex. Collex is the social software and faceted browsing backbone of the NINES federation. ARP was funded by the Mellon Foundation.

Digital history is the use of digital media to further historical analysis, presentation, and research. It is a branch of the digital humanities and an extension of quantitative history, cliometrics, and computing. Digital history is commonly digital public history, concerned primarily with engaging online audiences with historical content, or, digital research methods, that further academic research. Digital history outputs include: digital archives, online presentations, data visualizations, interactive maps, timelines, audio files, and virtual worlds to make history more accessible to the user. Recent digital history projects focus on creativity, collaboration, and technical innovation, text mining, corpus linguistics, network analysis, 3D modeling, and big data analysis. By utilizing these resources, the user can rapidly develop new analyses that can link to, extend, and bring to life existing histories.

Cultural analytics refers to the use of computational, visualization, and big data methods for the exploration of contemporary and historical cultures. While digital humanities research has focused on text data, cultural analytics has a particular focus on massive cultural data sets of visual material – both digitized visual artifacts and contemporary visual and interactive media. Taking on the challenge of how to best explore large collections of rich cultural content, cultural analytics researchers developed new methods and intuitive visual techniques that rely on high-resolution visualization and digital image processing. These methods are used to address both the existing research questions in humanities, to explore new questions, and to develop new theoretical concepts that fit the mega-scale of digital culture in the early 21st century.

Computer-assistedqualitative data analysis software (CAQDAS) offers tools that assist with qualitative research such as transcription analysis, coding and text interpretation, recursive abstraction, content analysis, discourse analysis, grounded theory methodology, etc.

Computational musicology is an interdisciplinary research area between musicology and computer science. Computational musicology includes any disciplines that use computation in order to study music. It includes sub-disciplines such as mathematical music theory, computer music, systematic musicology, music information retrieval, digital musicology, sound and music computing, and music informatics. As this area of research is defined by the tools that it uses and its subject matter, research in computational musicology intersects with both the humanities and the sciences. The use of computers in order to study and analyze music generally began in the 1960s, although musicians have been using computers to assist them in the composition of music beginning in the 1950s. Today, computational musicology encompasses a wide range of research topics dealing with the multiple ways music can be represented.

The Maryland Institute for Technology in the Humanities (MITH) is an international research center that works with humanities in the 21st century. A collaboration among the University of Maryland College of Arts and Humanities, Libraries, and Office of Information Technology, MITH cultivates research agendas clustered around digital tools, text mining and visualization, and the creation and preservation of electronic literature, digital games and virtual worlds.

The Index Thomisticus was a digital humanities project begun in the 1940s that created a concordance to 179 texts centering around Thomas Aquinas. Led by Roberto Busa, the project indexed 10,631,980 words over the course of 34 years, initially onto punched cards. It is considered a pioneering project in the field of digital humanities.